-

Need to change my credit card as my old one is expiring, so I’m doing a stocktake of what I’ve subscribed to. Total service count is 28. Potentially more as I’m sure there are some that I don’t have a 1Password entry for. A good opportunity to trim some of them down.

-

I.S. Know: An Online Quiz About ISO and RFC Standards

An online quiz called “I.S. Know” was created for software developers to test their knowledge of ISO and RFC standards in a fun way. Continue reading →

-

Day 23: fracture

#mbjune

-

Brief Look At Microsoft's New CLI Text Editor

Kicking the tyres on Edit, Microsoft’s new text editor for the CLI. Continue reading →

-

Day 22: hometown

#mbjune

-

On MacOS Permissions Again

Some dissenting thoughts about John Gruber and Ben Thompson discussion about Mac permissions and the iPad on the latest Dithering. Continue reading →

-

Returned to Tuggeranong for breakfast and a walk around the lake. The morning was quick crisp, and there was plenty of frost on the ground, but it was quite nice in the sun.

-

Day 21: silhouette

#mbjune

-

Out early in yet another bracing morning. Didn’t even know this temperature gauge showed negative numbers.

-

Being in meetings most of the day has left my voice hoarse and completely tired out. I don’t know how people who talk for a living do it (my suspicion is a decent microphone and regular vocal training).

-

Day 20: gather

#mbjune

-

Was writing “Airbus” in a message and mistakenly typed a P instead of a B. Found the results amusing, so I fired up ChatGPT’s image generator:

-

Done. Removed the Discover feed from BlueSky. I was getting a little too obsessed with it and depressed from it. It’s reverse chronological from here on out.

(Honestly, I should probably cut down on social media altogether, but one step at a time.)

-

Day 19: equal

Full disclosure: I used the Google Photo AI erasure tool to remove the front number-plates from these two silver Toyota Echoes (if you’re a regular reader, you can probably guess why). #mbjune

-

Found a particularly interesting bug in Safari when I tried adding a background transition to radio-button labels styled to look like regular buttons. Tapping the labels on the iPad causes the background colour to flicker:

Fixed it by working around the problem: I only need the transition when the user taps “Submit”, so I held-off from applying the transition attributes until that happens. But wow, what an weird way to fail.

-

Pro tip: don’t copy the

.gitdirectory of one repository over the.gitdirectory of another. Some weird and wacky things will happen, like the active branch changing from underneith you to the files you modified being in conflict with those same files being deleted. -

Tried out the Micro.blog Raycast extension by Tynan Purdy. Works well. I am curious to know how customisable the input form is. A larger text box and a character counter would be nice.

-

Thinking of Meta putting ads in WhatsApp reminds me of when Microsoft tried ads in Skype. The pitch for users, as I was to understand it, was that the ads will help drive the conversation. I know my life could be spiced up with a conversation about dishwashing detergent.

-

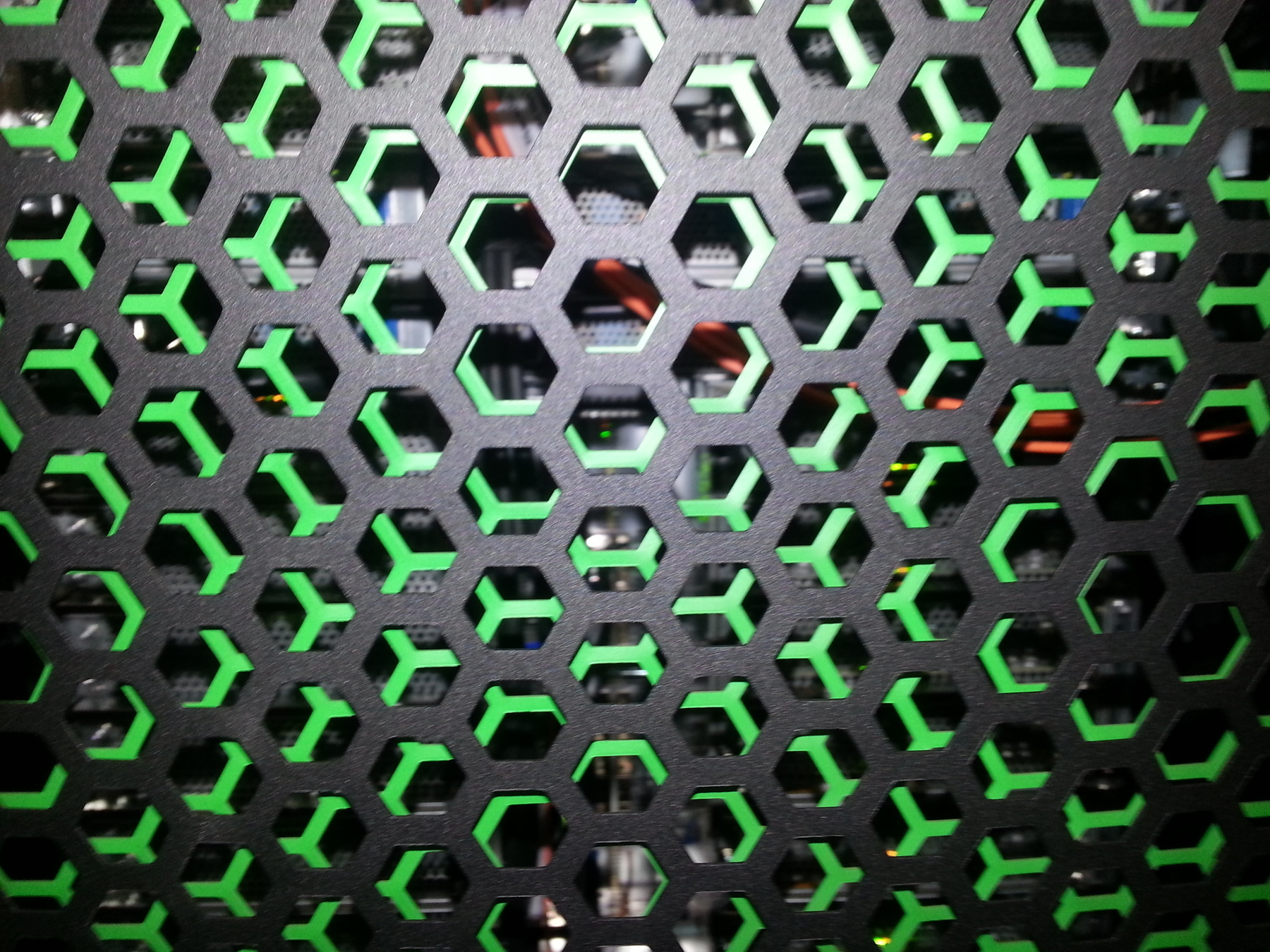

Day 18: texture

#mbjune

-

Mark this day where I got swayed to build something atop of Kubernetes rather than doing something hand-rolled. Hope not to look back on this day when things are broken and delayed and I have no idea of how to fix it.

-

I think I’ve decided that I prefer Canberra in the summer rather than the winter. Sure it means you’re likely to get the hot weather, but it needs to get really hot before you decide not to go outside. And getting outside when the temperature is at or below freezing is just as hard. 🥶

-

🔗 Nicholas Bate: The Greatest Productivity Tips, 159

Simply because we do not affix a postage stamp to our e-mail does not mean it is free. That mail has a huge cost in productivity terms: for us, in did we craft it correctly first time? For others in lack of certainty perhaps of what is required of them. For others in being cc’d without need.

No, mail isn’t free. It’s extraordinarily expensive.

So true. I would add that Slack is just as expensive, only that you’re making micropayments over the course of the day instead of one lump sum.

-

Day 17: warmth

#mbjune

-

Just watched the WWDC video on Liquid Glass and I must say it looks pretty nice. Granted I’ve not actually used any part of this new design yet, but if they can manage to pull it off, it’ll be quite a refreshing new look.