-

Well mark this one off your bucket list: appearing in someone’s travel vlog recommended to you by the YouTube algorithm. 😄

-

📘 Devlog

3rd March 2026

This is a crosspost from devlog.lmika.org. I’m going to try and write posts about what I’ve been working on over there, while crossposting them here. Original post was made using Weiro, another attempt at a blogging CMS I’ve been working on. Oof! Everyone’s building blogging CMS’s now, apparently. Since starting work on this project, I saw one other announce their own CMS that was vibe-coded with Claude. No shame in that: making something that works for you is part of the joy of participating in the Indie-web. Continue reading →

-

Ooh, the barge is back. This time it’s car themed, probably because of the F1.

-

Oof! As a non-American, it’s sometimes a little hard to listen to Ben Thompson.

-

I do wonder if some of the patterns we’ve been using in software engineering, like multi-repo micro-service architecture, is actually a determent to agent coding. Those were deployed to help human developers coordinate, but it subdivides the possible area for agents to operate in. A monolith in a single repo, remarkably, may actually be better here.

-

How is it that people making screenshot mark-up apps still don’t understand that the blend mode for highlights should be multiply, not mix with alpha. A real highlighter would keep the text black, and won’t produce obvious overcoating. This just looks like I’m smearing yellow paint everywhere.

-

Grand final bocce match at Fitzroy Gardens. Congrats to Foxy for winning the season.

-

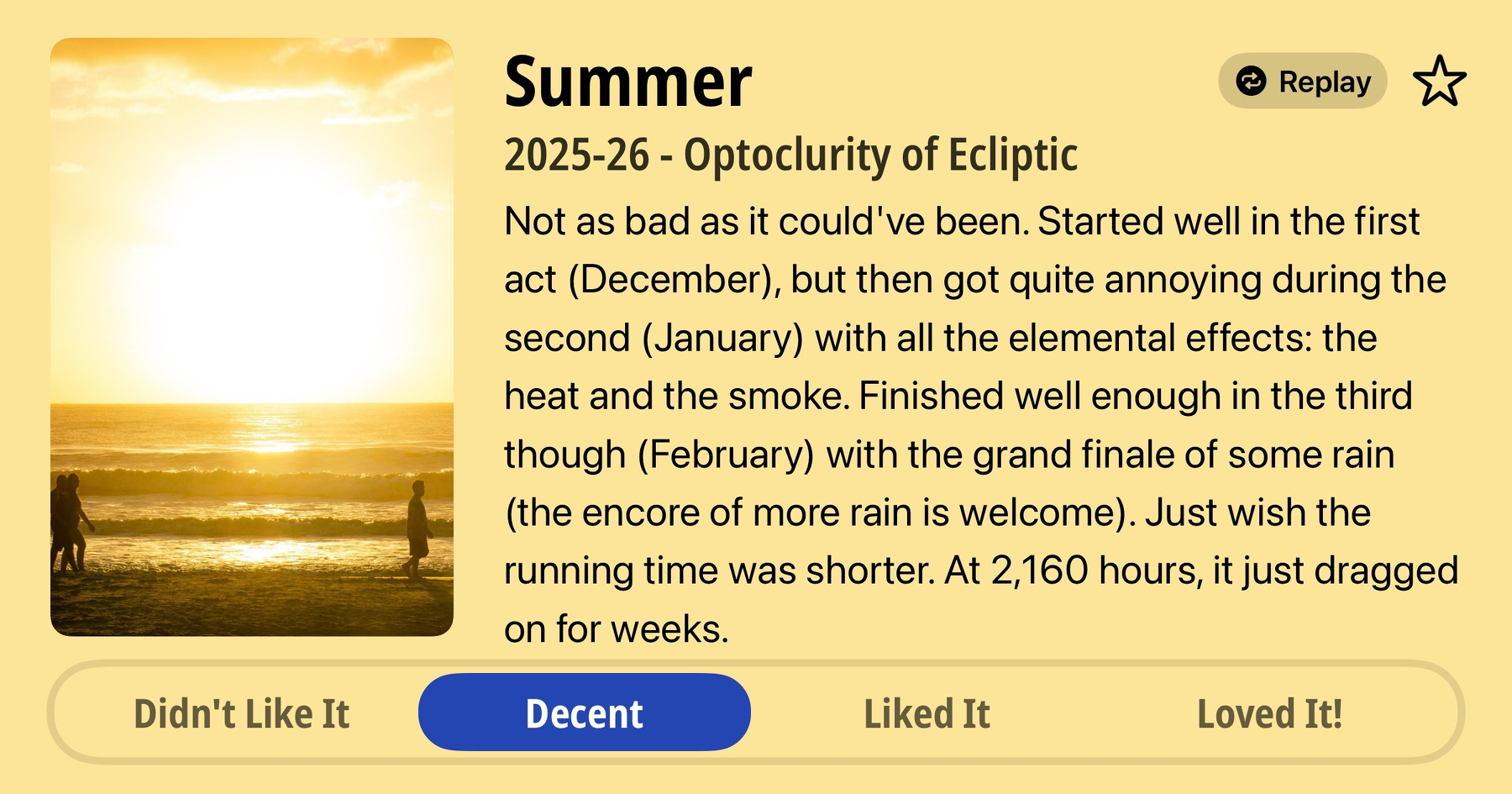

Oof! Glad that’s over.

-

Also saw a flock of pigeons enjoying the morning.

-

Saw an ibis this morning, but not a bin chicken. This is a Straw-necked Ibis.

-

You offer me Parmesan cheese for anything, you’re not getting it back.

(And lo! The floodgates, they open.)

-

It’s funny how some days, your overthinking of what to post results in nothing being written. This is me not overthinking it (with a bit of assistance from alcohol).

-

The Colour of Production

On the colour-coding system used for different development environments. Continue reading →

-

A bit frustrating that I asked my IDE to rename all instances of a method, and it did around half of them, ignoring the interfaces the type implements. I know Go interfaces are different to Java interfaces, but if the type checker passes already, rename things such that it remains that way.

-

This is green grass. A week ago it was brown grass that hasn’t seen rain in about a month and a half. What a difference a week and two rainy days makes.

-

Been riding the Metro tunnel to work in the morning, but I think I’ll switch back to my old commute. I’ve been touching off after 7 AM which means I’m losing out on the free travel coming into the city. Noticed this after seeing the top-up alerts come through more frequently.

-

Coding agents may help with coding, but it’s been my experience that the biggest time sink in software is dealing with all the rubbish associated with micro-services. Troubleshooting dozens of services, trying to see why they’re not talking to one another. Ain’t no agent that can fix that.

-

🔗 Pixel Envy: On Software Quality

I am somewhat impressed by the breadth of Apple’s current offerings as I consider all the ways they are failing me, and I cannot help but wonder if it is that breadth that is contributing to the unreliability of this software. Or perhaps it is the company’s annual treadmill.

I’m almost certain that the devs at Apple are not happy with shipping software that doesn’t match this quality threshold. They’d fix all this issues with Tahoe and iOS 26 if they could (well, the one’s that are not just bad designs). They just don’t have the time to do so.

Hardware takes months to setup, and once the lines are ready, it’s expensive to change them. So there’s huge resistance to change it near the end of the release cycle. Software’s malleability, in this respect, is a blessing and a curse. So easy to change, meaning that those making the decisions don’t see a cost in making these changes in the 11th hour. After all, it’s “just” software. But established software products are also resistant to change, and adding more cruft on the top just makes the change harder. Rather than fix things, devs spend all their time trying to get the bodged code working in concert while trying to meet the deadlines set from those that need features to sell. The result is lower quality software.

There’s no escaping the “scope, quality, time: pick two” maxim.

-

Will the solution to my problems really be at the end of an infinitely long social media feed?

Well, I don’t know. I haven’t reached the end yet. 😛

-

Missed my tram but thanks to the horrible traffic due to the rain, managed to catch it by walking to the next stop. Managed to lap the tram before it too, before it reached the dedicated track section.

-

🔗 Steve Yegge: You Should Write Blogs

An oldie but a goodie.

The last big problem I grapple with is biting off too much for a single blog. I find that if I can write a blog in a single sitting, it’ll usually seem worth publishing, at least at the time. […] If I can’t write it in one sitting, I feel like I don’t have something concrete enough to say. […] I can only do that with coding, not writing.

Yeah, I suffer from this too. I need to hit publish soon after writing the first draft, otherwise the resistance to finishing it gets too great.

-

The thing about DNS is that you’ve got one chance to get it right, otherwise your SOL for a good 15-20 minutes.

-

After almost 3 years to the day since buying it, my roll of cling wrap ran out. Took a lot less time to go through than my last roll of cling wrap. It was only 90 metres though, so that sort of tracks.

-

Getting software ready for go-live is a bit like landing a plane. Get what you have ready to go early, and once you’re done, just land it. Final approach is not the time to say “hey, what would be cool is if you can get this thing done too.”

-

I’m always at a loss of how best to represent a small number of items with a large number of properties in an SQL database. Tables feel great when the number of rows is dramatically larger than the number of columns. But when it’s the other way around, when you have one or two rows in a table with 30 columns, it feels strange. As if it’s out of balance.

I do wonder if some of these alternatives could work better:

- Having one DB table contain the items and another containing the item properties. This second table will only have four columns:

id,item_id,property, andvalue. - Storing the properties in a JSON column or similar.

These will probably work well for items with dynamic properties, or when only a subset have values at any one time. It would also be viable if one wouldn’t need to select on these properties, as querying this information would be a pain (although doable). Would it also work if the items don’t have dynamic properties? It’ll probably reduce the number of migrations involved.

This is probably just a me problem, and I should just write the table “naturally,” with one column per property. It’s not like the database cares.

- Having one DB table contain the items and another containing the item properties. This second table will only have four columns: