-

My online encounters with Steve Yegge’s writing is like one of those myths of someone going on a long journey. They’re travelling alone, but along they way, a mystical spirt guide appears to give the traveller some advice. These apparitions are unexpected, and the traveller can go long spells without seeing them. But occasionally, when they arrive at a new and unfamiliar place, the guide is there, ready to impart some wisdom before disappearing again.1

Anyway, I found a link to his writing via another post today. I guess he’s writing at Sourcegraph now: I assume his working there.

Far be it for me to recommend a site for someone else to build, but if anyone’s interested in registering

wheretheheckissteveyeggewritingnow.comand posting links to his current and former blogs, I’d subscribe to that.

-

Or, if you’re a fan of Half Life, Yegge’s a bit like the G-Man. ↩︎

-

-

Gotta be honest: the current kettle situation I find myself in, not my cup of tea. 😏

-

Amusing that find myself in a position where I have to log into one password manager to get the password to log into another password manager to get a password.

-

Does Google ever regret naming Go “Go”? Such a common word to use as a proper noun. I know the language devs prefer not to use Golang, but there’s no denying that it’s easier to search for.

-

The category keyword test is a go.

-

Unless you’re working on 32 bit hardware, or dealing with legacy systems, there’s really no need to be using 32 bit integers in database schemas or binary formats. There’s ample memory/storage/bandwidth for 64 bit integers nowadays. So save yourself the “overflow conversion” warnings.

This is where I think Java made a mistake of defaulting to 32 bit integers regardless of the architecture. I mean, I can see why: a language and VM made in the mid-90s targeting set-top boxes: settling on 32 integers made a lot of sense. But even back then, the talk of moving to 64 bit was in the air. Nintendo even made that part of the console marketing.

-

There’s also this series of videos by the same creator that goes in depth on how the Super Mario Bros. levels are encoded in ROM. This is even more fascinating, as they had very little memory to work with, and had to make some significant trade-offs, like allowing Mario to go left. 📺

-

If anyone’s interested in how levels in Super Mario Bros. 2 are encoded in the ROM, I can recommend this video by Retro Game Mechanics. It goes for about 100 minutes so it’s quite in depth. 📺

-

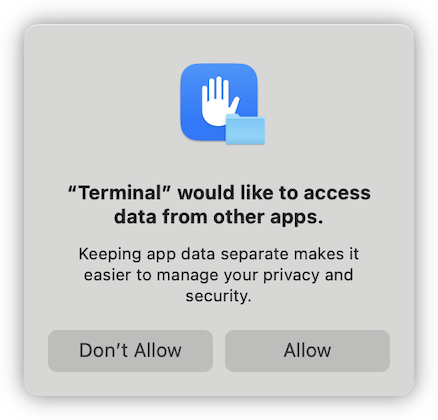

Just blindly accepting permission dialogs whenever MacOS throws them at me, like some bad arcade game. Was this your intention, Apple?

-

Overheard this exchange just now at the cafe:

Customer: How ya’ feeling?

Barista: Feeling cold.

Customer: Well at least that’s something. If ya’ don’t feel the cold it means you’re dead.

Had a lot more weight to me than I think the customer originally intended.

-

Mother’s Day in full bloom over here. 💐

-

Rubberducking: More On Mocking

Mocking in unit tests can be problematic due to the growing complexity of service methods with multiple dependencies, leading to increased maintenance challenges. But the root cause may not be the mocks themselves. Continue reading →

-

🔗 NY Mag: Rampant AI Cheating Is Ruining Education Alarmingly Fast

Two thoughts on this. The first is that I think these kids are doing a disservice to themselves. I’m not someone who’s going to say “don’t use AI ever,” but the only way I can really understanding something is working through it, either by writing it myself or spending lots of time on it. I find this even in my job: it’s hard for me to know of the existence of some feature in a library I haven’t touched myself, much less how to use it correctly. Offloading your thinking to AI may work when you’re plowing through boring coding tasks, but when it comes to designing something new, or working through a Sev-1, it helps to know the system your working on like the back of your hand.

Second thought: TikTok is like some sort of wraith, sucking the lifeblood of all who touches it, and needs to die in fire.

Via: Sharp Tech

-

How and when did “double click” become a phrase meaning to focus on or get into the details of a topic? Just heard it being used on a podcast for the second time in as many weeks.

-

If Apple think the recent App Store ruling is taking away their right to monetise their IP, then Apple needs to explain what the $US 99.00 developer fee is for. They’re probably shooting their videos for WWDC right now. Maybe have one going through each line item of a theoretical “developer fee invoice” and explain what the fee is, and what IP rights the fee is covering.

-

When hiring a senior software engineer, it’s probably less useful to know whether they could code up a sorting algorithm versus knowing whether they can work out what time it is in UTC in their head. 😀

-

Ooh, I lost my temper when I was under the pump and MacOS decided to stop everything and throw up this modal, asking if I permit Terminal to access files from other apps.

Of course I do, Apple! I’m not using the terminal to amuse myself. I’m trying to get shit done, and your insistent whining is getting in my way! 😡

-

Seeing these river tour boats moored like this reminds me of the UK, and all the narrowboats in the canals.

-

📘 Devlog

Blogging Tools — Ideas For Stills For A Podcast Clips Feature

I recently discovered that Pocketcasts for Android have changed their clip feature. It still exists, but instead of producing a video which you could share on the socials, it produces a link to play the clip from the Pocketcasts web player. Understandable to some degree: it always took a little bit of time to make these videos. But hardly a suitable solution for sharing clips of private podcasts: one could just listen to the entire episode from the site. Continue reading →

-

Ah, CSV files. They’re a little painful to work with, but they truly are the unsung heroes of ad-hoc scripts written in haste.

-

Listening to all the recent platform talk on Stratechery has been fascinating, if not a little melancholy. We may never see another mainstream platform be as truly open as Windows, MacOS, or the Web is right now.

-

What ever happen to Power Nap on MacOS? Is that still a thing? I’ve been asked when I’d like to install updates for about a week now. Yet despite choosing “later tonight” every time, nothing’s been happening. I could choose to install them now, but I thought deferring them to a time when I’m not using my computer was the point of this feature.

-

Argh! Someone has discovered my secret of where the best place to stand on an A-class tram is (it’s at the back, beside the back door).

-

It’s easy to get a irrational sense of how scalable a particular database technology can be. Take my experience with PostgreSQL. I use it all the time, but I have it in my head that it shouldn’t be used for “large” amounts of data. I get a little nervous when a table goes beyond 100,000 rows, for example.

But just today, I discovered a table that had 47 million rows of time-series data, and PostgreSQL seems to handle this table just fine. There are a few caviets: it’s a database with significant resources backing it, it only sees a few commits per second, and the queries that are not optimised for that table’s indices can take a few seconds. But PostgreSQL seems far from being under load. CPU usage is low (around 3%), and the disk queue depth is zero.

I guess my point is that PostgreSQL continues to be awesome and I really shouldn’t underestimate how well it can handle we we throw at it.

-

Ah! I think I know why I keep asking for a bagel with lettuce, tomato, and cheese instead of ham, tomato, and cheese. It’s because I always said “lettuce, tomato, and cheese” when ordering sandwiches back when I was working in the CBD. Wow, talk about old habits dying hard.