A Tour Of My New Self-Hosted Code Setup

While working on the draft for this post, a quote from Seinfield came to mind which I thought was a quite apt description of this little project:

Breaking up is knocking over a Coke machine. You can’t do it in one push. You gotta rock it back and forth a few times and then it goes over.

I’ve been thinking about “breaking up” with Github on and off for a while now. I know I’m not the only one: I’ve seen a few people online talk about leaving Github too. They have their own reasons for doing so: some because of AI, others are just not fans of Microsoft. For me, it was getting bitten by the indie-web bug and wanting to host my code on my own domain name. I have more than a hundred repositories in Github, and that single github.com/lmika namespace was getting quite crowded. Being able to organise all these repositories into groups, without fear of collisions or setting up new accounts, was the dream.

But much like the Seinfield quote, it took a few rocks of that fabled Coke machine to get going. I dipped my toe in the water a few times: launching Gitea instances in PikaPod, and also spinning up a Gitlab instance in Linode during a hackathon just to see how well it would feel to manage code that way. I knew it wouldn’t be easy: not only would I be paying more for doing this, it would involve a lot of effort up front (and on an ongoing basis), and I would be taking on the responsibility of backups, keeping CI/CD workers running, and making sure everything is secured and up-to-date. Not difficult work, but still an ongoing commitment.

Well, if I was going to do this at all, it was time to do it for real. I decided to set up my own code hosting properly this time, complete with CI/CD runners, all hosted under my own domain name. And well, that Coke machine is finally on the floor. I’m striking out on my own.

Let me give you a tour of what I have so far.

Infrastructure

My goal was to have a setup with the following properties:

- A self-hosted SCM (source code management) system that can be bound to my own domain name.

- A place to store Git repositories and LFS objects that can be scaled up as my needs for storage grow.

- A CI/CD runner of some sort that can be used for automated builds, ideally something that supports Linux and MacOS.

For the SCM, I settled on Forgejo, which is a fork of Gitea, as it seemed like the one that required the least amount of resources to run. When I briefly looked at doing this a while back, Forgejo didn’t have anything resembling GitHub Actions, which was a non-starter for me. But they’re now in Forgejo as an alpha, preview, don’t-use-it-for-anything-resembling-production level of support, and I was curious to know how well they worked, so it was worth trying it out.

I did briefly look at Gitea’s hosted solution, but it was relatively new and I wasn’t sure how long their operations would last. At least with self-hosting, I can choose to exit on my own terms.

It was difficult thinking about how much I was willing to budget for this, considering that it’ll be more than what I’m currently paying for GitHub now, which is about $9 USD /month ($13.34 AUD /month). I settled for a budget of around $20.00 AUD /month, which is a bit much, but I think would give me something that I’d be happy with without breaking the bank.

I first had a go at seeing what Linode had to offer for that kind of money. A single virtual CPU, with 2 GB RAM and 50 GB storage costs around $12.00 USD /month ($17.79 AUD /month). This would be fine if it was just the SCM, but I also want something to run CI/CD jobs. So I then took a look at Hetzner. Not only do they charge in Euro’s, which works in my favour as far as currency conversions go, but their shared-CPU virtual servers were much cheaper. A server with the same specs could be had for only a few euro.

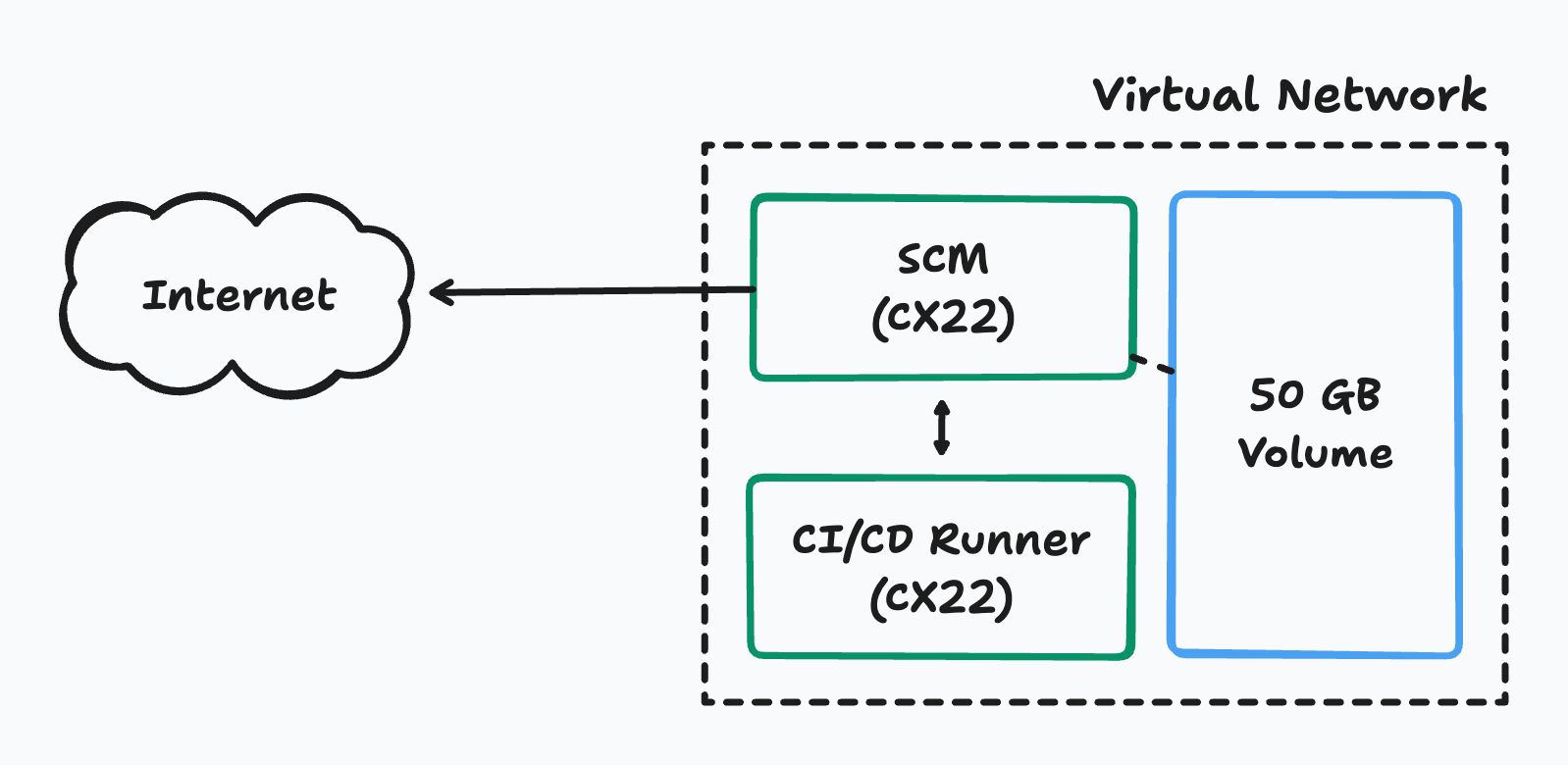

So after a bit of looking around, I settled for the following bill of materials:

- 2x vCPU (CX22) instances, each with 4 GB RAM and 40 GB storage

- A virtual network which houses these two instances

- One public IP address

- One 50 GB volume which can be resized

This came to €10.21, which was around $16.38 AUD /month. Better infrastructure for a cheaper price is great in my books. The only downside is that they don’t have a data-centre presences in Australia. I settled for the default placement of Falkenstein, Germany and just hoped that the latency wasn’t too slow as to be annoying.

Installing Forgejo

The next step was setting up Forgejo. This can be done using official channels by either downloading a binary, or by installing a Docker image. But there’s also a forgejo-contrib repository that distributes it via common package types, with Systemd configurations that launch Forgejo on startup. Since I was using Ubuntu, I downloaded and installed the Debian package.

Probably the easiest way to get started with Forgejo is to use the version that comes with Sqlite, but since this is something that I’d rather keep for a while, I elected to use Postgre for my database. I installed the latest Ubuntu distribution of Postgres, and setup the database as per the instructions. I also made sure the mount point for the volume was ready, and created a new directory with the necessary owner and permissions so that Forgejo can write to it.

At this point I was able to launch Forgejo and go through the first launch experience. This is where I configured the database connection details, and set the location of the repository and LFS data (I didn’t take a screenshot at the time, sorry). Once that was done, I shut the server down again as I needed to make some changes within the config file itself:

- I turned off the ability for others to register themselves as users, an important first step.

- I changed the bind address of Forgejo. It listens to

0.0.0.0:3000by default, but I wanted to put this behind a reverse proxy, so I changed it to127.0.0.1:3000. - I also reduced the minimum size of SSH RSA keys. The default was 3,072, but I still have keys of length 2,048 that I wanted to use. There was also an option to turn off this verification.

After that, it was a matter of setting up the reverse proxy. I decided to use Caddy for this, as it comes with HTTPS out of the box. This I installed as a Debian package also. Configuring the reverse proxy by changing the Caddyfile deployed in /etc was a breeze and after making the changes and starting Caddy, I was able to access Forgejo via the domain I setup.

One quick note about performance: although logging in via SSH was a little slow, I had no issues with the speed of accessing Forgejo via the browser.

The Runners

The next job was setting the runners. I thought this was going to be easier than setting up Forgejo itself, but I did run into a few snags which slowed me down.

The first was finding out that a Hetzner VM running without a public IP address actually doesn’t have any route to the internet, only the local network. The way to fix this is to setup one of the hosts which did have a public IP address to act as a NAT gateway. Hetzner has instructions on how to do this, and after performing a hybrid approach of following both the Ubuntu 20.04 instructions and Ubuntu 22.04 instructions, I was able to get the runner host online via the Forgejo host. Kinda wish I knew about this before I started this.

For the runners, I elected to go with the Docker-based setup. Forgejo has pretty straightforward instructions for setting them up using Docker Compose, and I changed it a bit so that I could have two runners running on the same host.

Setting up the runners took multiple attempts. The first attempts failed when Forgejo couldn’t locate any runners for an organisation to use. I’m not entirely sure why this was, as the runners were active and were properly registered with the Forgejo instance. It could be magical thinking, but my guess is that it was because I didn’t register the runners with an instance URL that ended with a slash. It seems like it’s possible to register runners that are only available to certain organisations or users. Might be that there’s some bit of code deep within Forgejo that’s expecting a slash to make the runners available to everyone? Not sure. In either case, after registering the runners with the trailing slash, the organisations started to recognise them.

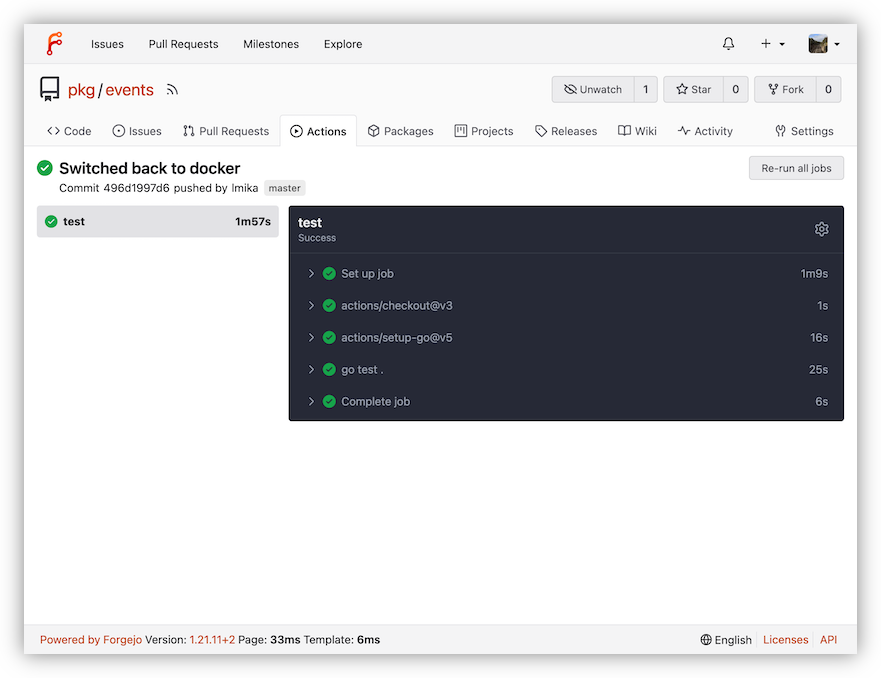

The other error was seeing runs fail with the error message cannot find: node in PATH. This resolved itself after I changed the run-on label within the action YAML file itself from linux to docker. I wasn’t expecting this to be an issue — I though the run-on field was used to select a runner based on their published labels, and that docker was just one such label. The Forgejo documentation was not super clear on this, but I got the sense that the docker label was special in some way. I don’t know. But whatever, I can use docker in my workflows.

Once these battles were won, the runners were ready, and I was able to build and test a Go package successfully. One last annoying thing is that Forgejo doesn’t enable runners by default for new repositories — I guess because they’re still considered an alpha release. I can live with that in the short term, or maybe there’s some configuration I can enable to always have them turned on. But in either case, I’ve now got two Linux runners working.

MacOS Runner And Repository Backup

The last piece of the puzzle was to setup a MacOS runner. This is for the occasional MacOS application I’d like to build, but it’s also to run the nightly repository backups. For this, I’m using a Mac Mini currently being used as a home server. This has an external hard drive connect, with online backups enabled, which makes it a perfect target for a local backup of Forgejo and all the repository data should the worse come to pass.

Forgejo does’t have an official release of a MacOS runner, but Gitea does, and I managed to download a MacOS build of act_runner and deploy it onto the Mac Mini. Registration and performing a quick test with the runner running in the foreground went smoothly. I then went through the process of setting it up as a MacOS launch agent. This was a pain, and it took me a couple of hours to get this working. I won’t go through every issue I encountered, mainly because I couldn’t remember half of them, but here’s a small breakdown of the big ones:

- I was unable to register the launch agent definition within the user domain. I had to use the

guidomain instead, which requires the user to be logged in. I’ve got the Mac Mini setup to login on startup, so this isn’t a huge issue, but it’s not quite what I was hoping for. - Half the commands in

launchctlare deprecated and not fully working. Apples documentation on the command is sparse, and many of the Stack Exchange answers are old. So a lot of effort was spent fumbling through unfinished and outdated documentation trying to install and enable the launch service. - The actual runner is launch using a shell script, but when I tried the backup job, Bash couldn’t access the external drive. I had to explicitly add Bash to Privacy & Security → Full Disk Access within the Settings app.

- Once I finally got the runner up and running as a launch agent, jobs were failing because

.bash_profilewasn’t been loaded. I had to adjust the launch script to include this explicitly, so that the PATH to Node and Go were set properly. - This was further exacerbated by two runners running at the same time. The foreground runner I was using to test with was configured correctly, while the one running as a launch agent wasn’t fully working yet. This manifested as the back-up job randomly failing with the same

cannot find: node in PATHerror half the time.

It took me most of Saturday morning, but in the end I managed to get this MacOS runner working properly. I’ve not done anything MacOS-specific yet, so I suspect I may have some XCode related stuff to do, but the backup job is running now and I can see it write stuff to the external hard drive.

The backup routine itself is a simple Go application that’s kicked off daily by a scheduled Forgejo Action (it’s not in the documentation yet, but the version of Forgejo I deployed does support scheduled actions). It makes a backup of the Forgejo instance, the PostgreSQL database, and all the repository data using SSH and Rsync.

I won’t share these repositories as they contain references to paths and such that I consider sensitive; but if you’re curious about what I’m using for the launch agent settings, here’s the plist file I’ve made:

<!-- dev.lmika.repo-admin.macos-runner.plist -->

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE plist PUBLIC "-//Apple//DTD PLIST 1.0//EN" "http://www.apple.com/DTDs/PropertyList-1.0.dtd">

<plist version="1.0">

<dict>

<key>Label</key>

<string>dev.lmika.repo-admin.macos-runner</string>

<key>ProgramArguments</key>

<array>

<string>/Users/lmika/opt/macos-runner/scripts/run-runner.sh</string>

</array>

<key>KeepAlive</key>

<true/>

<key>RunAtLoad</key>

<true/>

<key>StandardErrorPath</key>

<string>/tmp/runner-logs.err</string>

<key>StandardOutPath</key>

<string>/tmp/runner-logs.out</string>

</dict>

</plist>

This is deployed by copying it to $HOME/Library/LaunchAgents/dev.lmika.repo-admin.macos-runner.plist, and then installed and enabled by running these commands:

launchctl bootstrap gui/$UID "Library/LaunchAgents/dev.lmika.repo-admin.macos-runner.plist"

launchctl kickstart gui/$UID/dev.lmika.repo-admin.macos-runner

The Price Of A Name

One might see this endeavour, when viewed from a pure numbers and effort perspective, as a bit of a crazy thing to do. Saying “no” to all this cheap code hosting, complete with the backing of a large cooperation, just for the sake of a name? I can’t deny that this may seem a little unusual, even a little crazy. After all, it’s more work and more money. And I’m not going to suggest that others follow me into this realm of a self-hosted SCM.

But I think my code deserves it’s own name now. After all, my code is my work; and much like we encourage writers to write under their own domain name, or for artists and photographers to move away from the likes of Instagram and other such services, so too should my work be under a name I own and control. The code I write may not be much, but it is my own.

Of course, I’m not going to end this without my usual “we’ll see how we go” hedge against myself. I can only hope I got enough safeguards in place to save me from my own decisions, or to easily move back to a hosted service, when things go wrong or when it all becomes just a bit much. More on that in the future, I’m sure.