Long Form Posts

-

Or not. I mean, I’m not expecting you to notice. You’ve got lives of your own, after all. ↩︎

-

Technically it’s not a breaking change, and they will maintain backwards compatibility, at least for a while. But just humour me here. ↩︎

- Doesn’t know what feeds you’ve subscribed to,

- Doesn’t know what episode you’re listening to, and

- Doesn’t know where in the episode you are.

- Feeds had to be predefined: While it’s possible to create a clip from an arbitrary feed, it’s a bit involved, and the path of least resistence is to set up the feeds you want to clip ahead of time. This works for me as I only have a handful of feeds I tend to make clips from.

- Prioritise recent episodes: The clips I tend to make come from podcasts that touch on current events, so any episode listings should prioritise the more recent ones. The episode list is in the same order as the feed, which is not strictly the same, but fortunately the shows I subscribe to list episodes in reverse chronological order.

- Easy course and fine positioning of clips: This means going straight to a particular point in the episode by entering the timestamp. This is mainly to keep the implementation simple, but I’ve always found trying to position the clip range on a visual representation of a waveform frustrating. It was always such a pain trying to make fine adjustments to where the clip should end. So I just made this simple and allow you to advance the start time and duration by single second increments by tapping a button.

-

The annoying thing about this library is that it doesn’t use Go’s standard

Colortype, nor does it describe the limits of each component. So for anyone using this library: the range for R, G, and B go from 0 to 255, and A goes from 0 to 1. ↩︎

I.S. Know: An Online Quiz About ISO and RFC Standards

An online quiz called “I.S. Know” was created for software developers to test their knowledge of ISO and RFC standards in a fun way.

Brief Look At Microsoft's New CLI Text Editor

Kicking the tyres on Edit, Microsoft’s new text editor for the CLI.

On MacOS Permissions Again

Some dissenting thoughts about John Gruber and Ben Thompson discussion about Mac permissions and the iPad on the latest Dithering.

Gallery: Day Trip to Yass

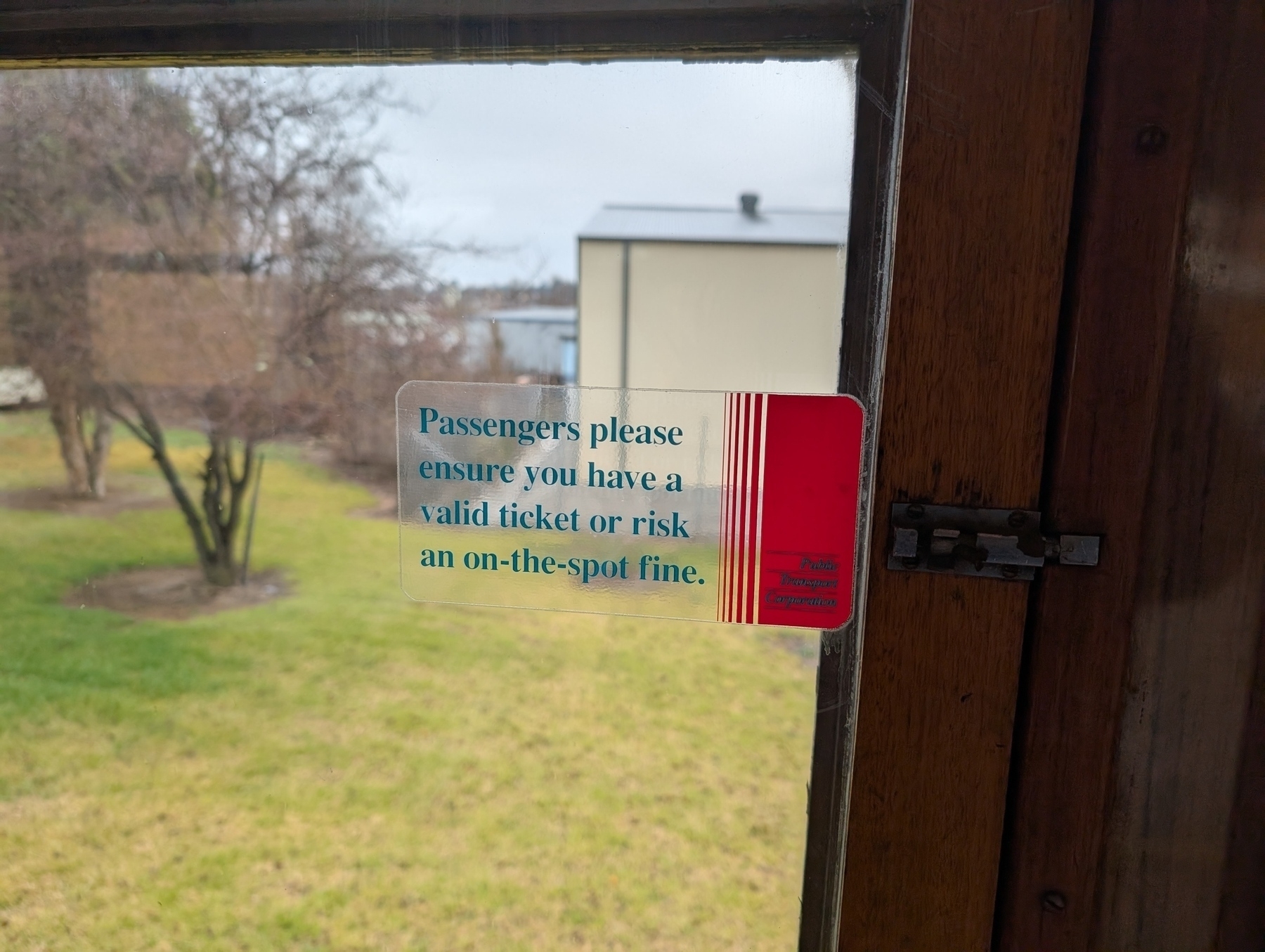

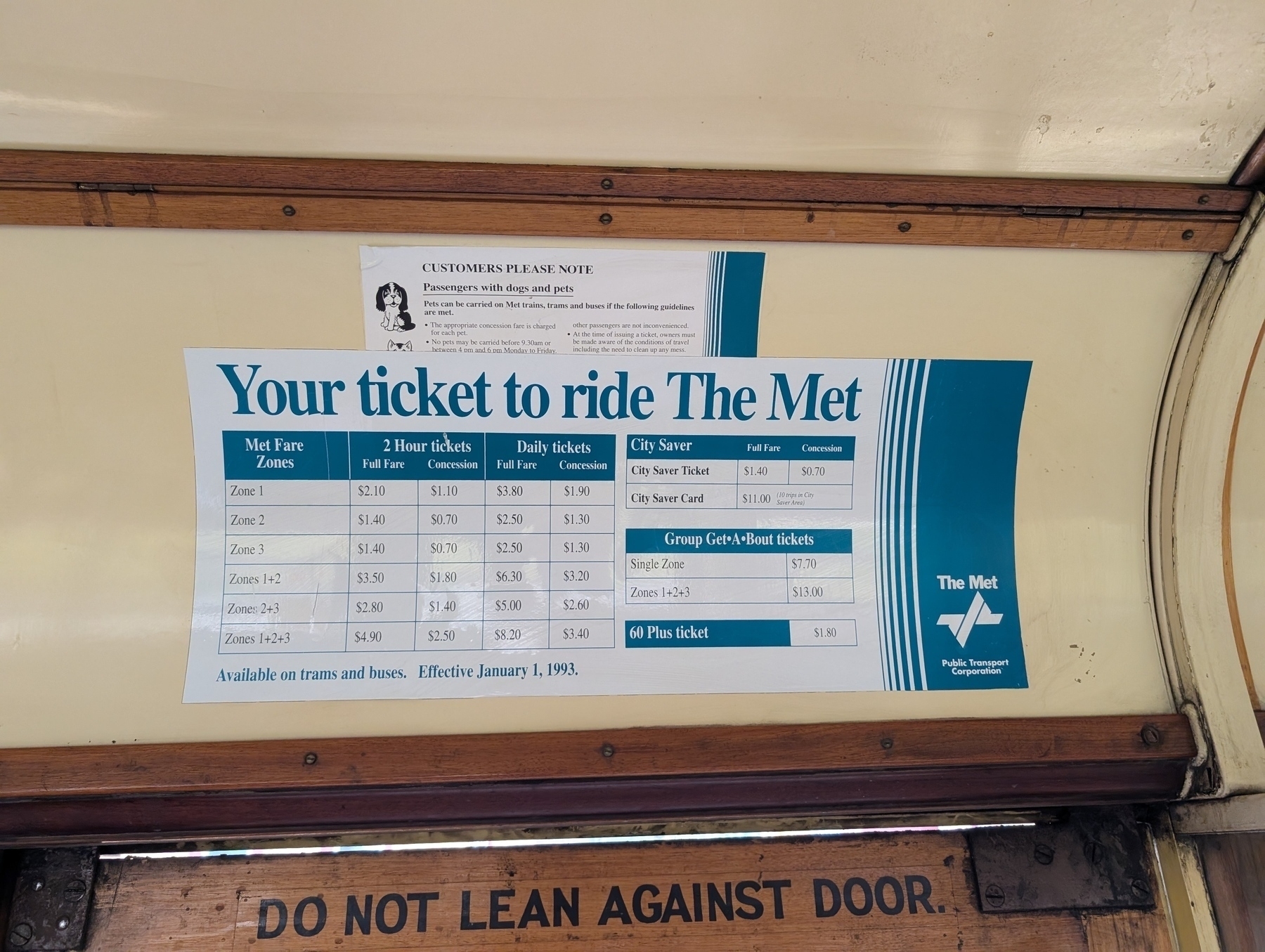

Decided to go to Yass today, a small town in NSW just north of where I’m staying in Canberra. I pass by Yass every time I drive to Canberra from Melbourne, and I wanted to see what it was like, at least once. And this morning I discovered that it had a railway museum, which sealed the deal. Unfortunately the weather was not kind: it was bitterly cold and rainy the whole time I was there.

First stop was the Railway Museum, which featured quite a few bits of rolling stock that operated on the Yass tramway. Yes, Yass had a tramway which, given the size of the town, I was not expecting.

This was followed by lunch at a local cafe, then a brief walk by the Yass river, which had an old tram bridge. I managed to walk around 500 metres from the bridge to the footy oval. It would’ve been nice to walk a bit longer, but it was cold, wet, and the ground was quite slippery. Plus I had to get home to spend some time with the birds. But I did make a brief diversion to Yass Junction railway station to see if there were any trains passing through (unfortunately, there were none).

Devlog: UCL — More About The Set Operator

I made a decision around the set operator in UCL this morning.

When I added the set operator, I made it such that when setting variables, you had to include the leading dollar sign:

$a = 123

The reason for this was that the set operator was also to be used for setting pseudo-variables, which had a different prefix character.

@ans = "this"

I needed the user to include the @ prefix to distinguish the two, and since one variable type required a prefix, it made sense to require it for the other.

I’ve been trying this for a while, and I’ve deceided I didn’t like it. It felt strange to me. It shouldn’t, really, as it’s similar to how variable assignments work in Go’s templating language, which I consider an inspiration for UCL. On the other hand, TCL and Bash scripts, which are also inspirations, require the variable name to be written without the leading dollar sign in assignments. Heck, UCL itself still had constructs where referencing a name for a variable is done so without a leading dollar sign, such as block inputs. And I had no interest in changing that:

proc foo { |x|

echo $x

}

for [1 2 3] { |v| foo $v }

So I made the decision to remove the need for the dollar sign prefix in the set operator. Now, when setting a variable, only the variable name can be used:

msg = "Hello"

echo $msg

In fact, if one were to use the leading dollar sign, the program will fail with an error.

This does have some tradeoffs. The first is that I still need to use the @ prefix for setting pseudo variables, and this change will violate the likeness of how the two look in assignments:

@ans = 123

bla = 234

The second is that this breaks the likeness of how a sub-index looks when reading it, verses how it looks when it’s being modified:

a = [1 2 3]

a.(1) = 4

$a

--> [1 4 3]

$a.(1)

--> 4

(One could argue that the dollar sign prefix makes sense here as the evaluator is dereferencing the list in order to modify the specific index. That’s a good argument, but it feels a little bit too esoteric to justify the confusion it would add).

This sucks, but I think they’re tradeoffs worth making. UCL is more of a command language than a templating language, so when asked to imagine similar languages, I like to think one will respond with TCL or shell-scripts, rather than Go templates.

And honestly, I think I just prefer it this way. I feel that I’m more likely to set regular variables rather than pseudo-variables and indicies. So why not go with the approach that seems nicer if you’re likely to encounter more often.

Finally, I did try support both prefixed and non-prefixed variables in the set operator, but this just felt like I was shying away from making a decision. So it wasn’t long before I scrapped that.

Some Morning AI Thoughts

Some contrasting views on the role of AI in creation, highlighting the importance of human creativity and quality over speed and cost-cutting in technological advancements.

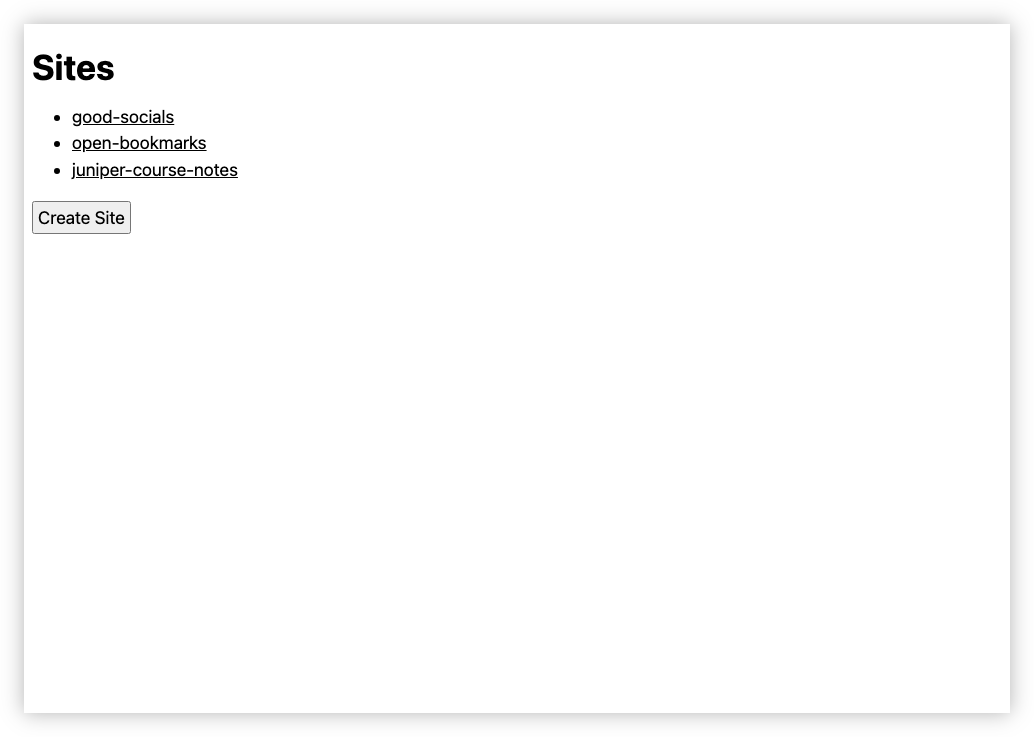

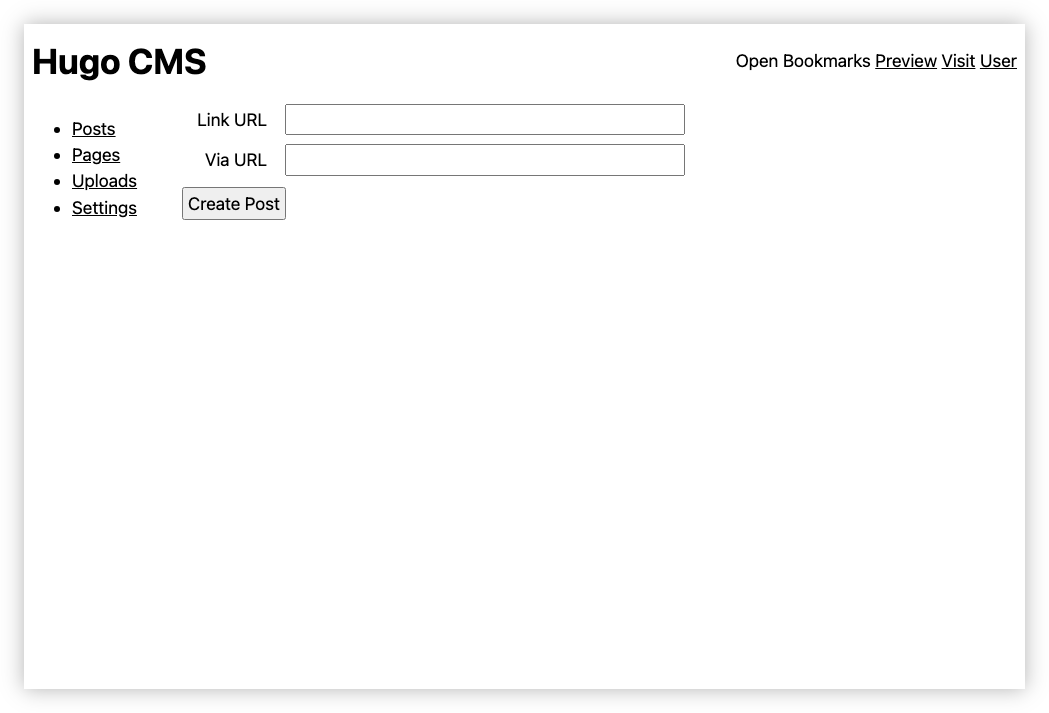

That Which Didn't Make the Cut: a Hugo CMS

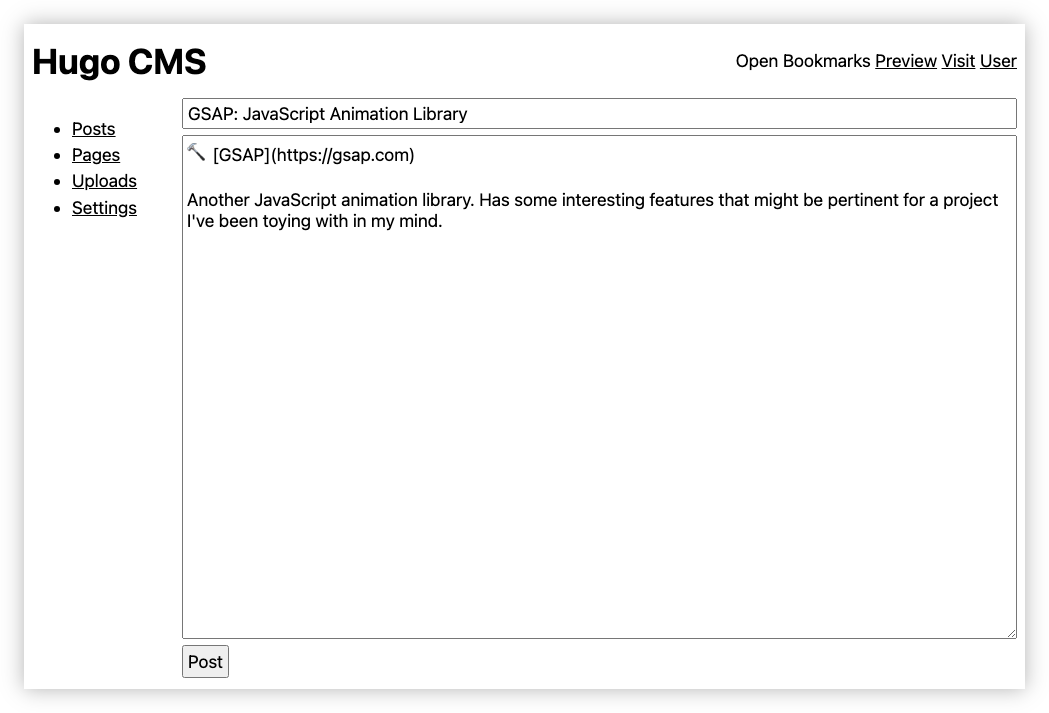

You’ve probably noticed1 that I’ve stopped posting links to Open Bookmarks, and have started posting them here again. The main reason for this is that I’ve abandoned work on the CMS I was working on that powered that bookmarking site. Yes, yes, I know: another one. Open Bookmarks was basically a static Hugo site, hosted on Netlify. But being someone that wanted to make it easy for me to post new links without having to do a Git checkout, or fiddle around YAML front-matter, I thought of building a simple web-service for this.

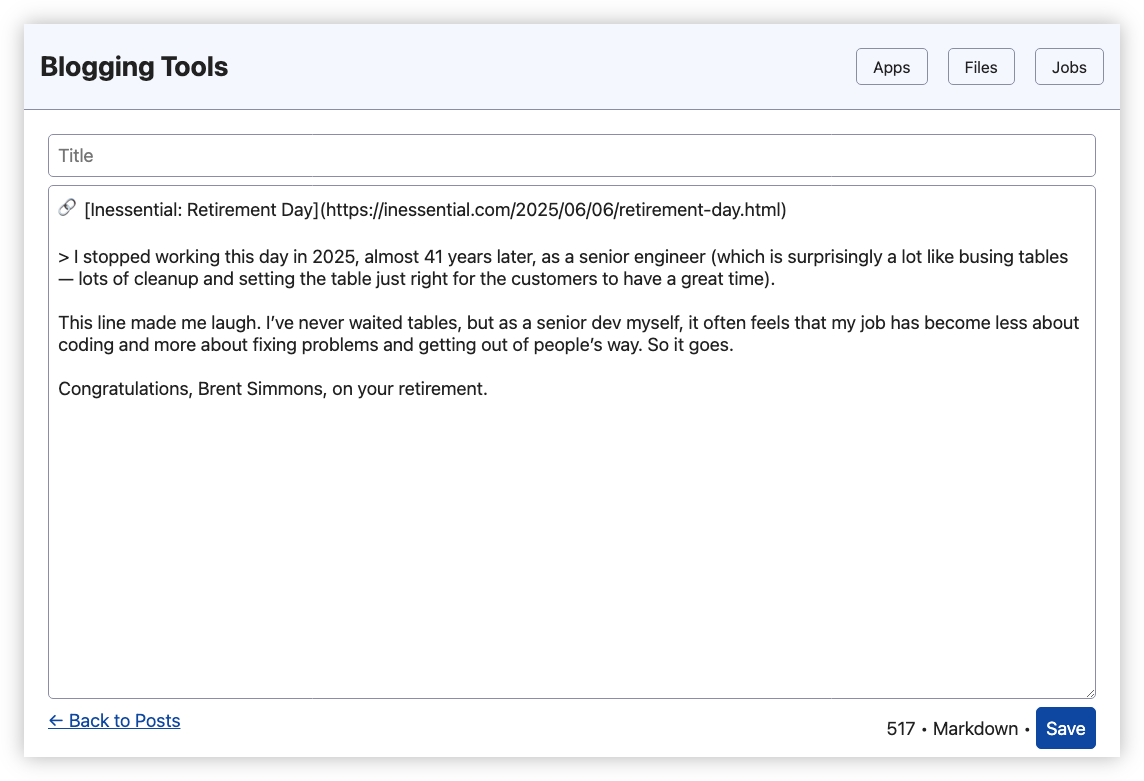

I don’t want to say too much about it, other than I managed to get the post functionality working. Creating a new link post would involve fetching the page, and pre-populating the link and optional via link with the fetched page title. I’d just finish the post with some quotes or quips, then click Post. That’ll save the post in a local database, write it to a staged Hugo site, run Hugo to generate the static site, and upload it to Netlify. There was nothing special about link posts per se: they were just a specialisation of the regular posting feature — a template if you will — which would work the same way, just without the pre-fills.

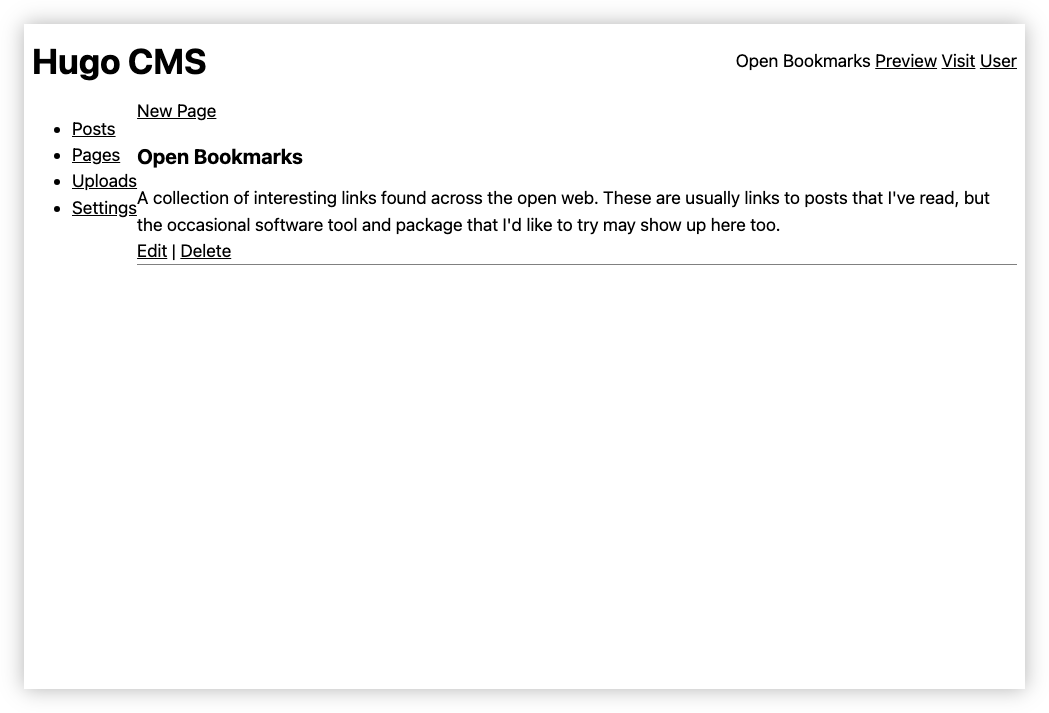

The other thing I added was support for adding arbitrary pages. This was a dramatic simplification to what is possible in Hugo, in that only one page bundle was supported. And it was pretty compromised: you had to set the page title to “Index” to modify the home page. But this was enough for that, plus some additional pages at the top level.

One other feature was that you can “preview” the site if you didn’t have a Netlify site setup and you wanted to see the Hugo site within the app itself. I had an idea of adding support for staging posts prior to publishing them to Netlify, so that one could look at them. But this never got built.

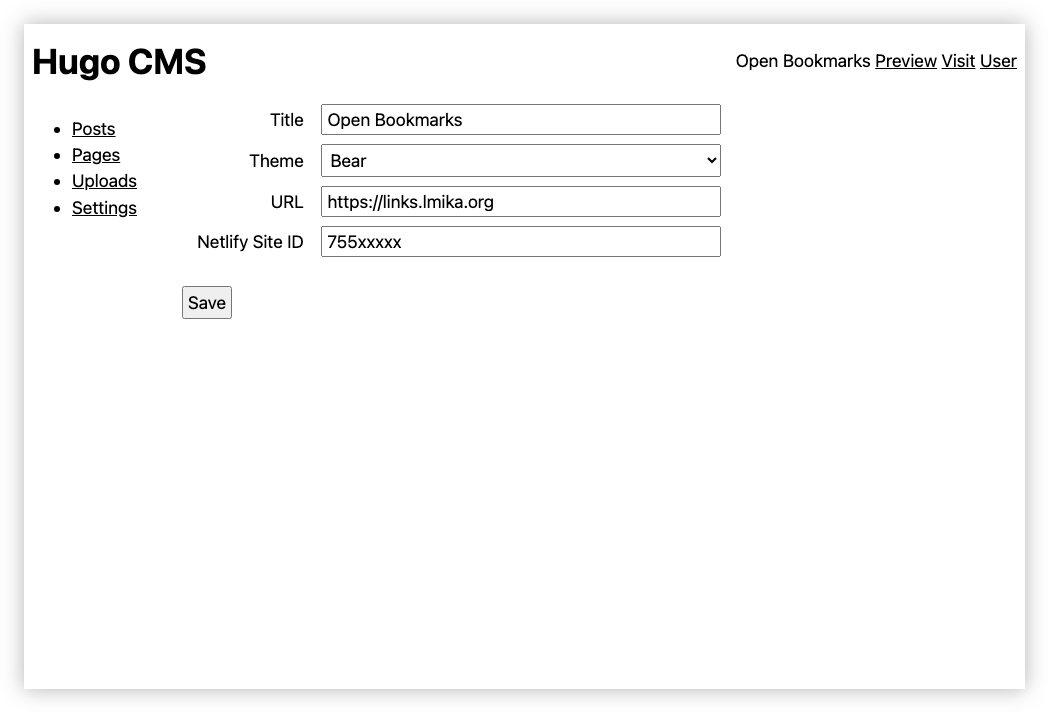

Finally there were some options for configuring the site properties. Uploads were never implemented.

Here are some screenshots, which isn’t much other than evidence that I was prioritising the backend over the user experience:

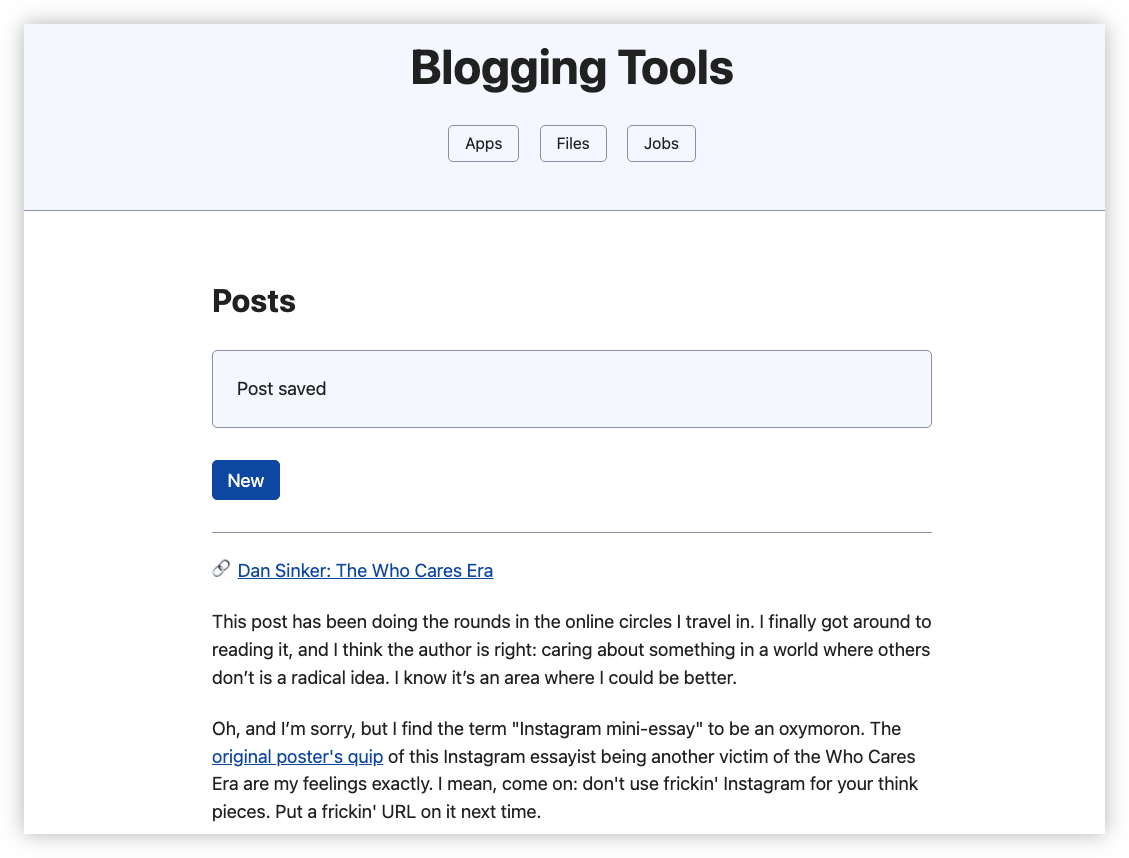

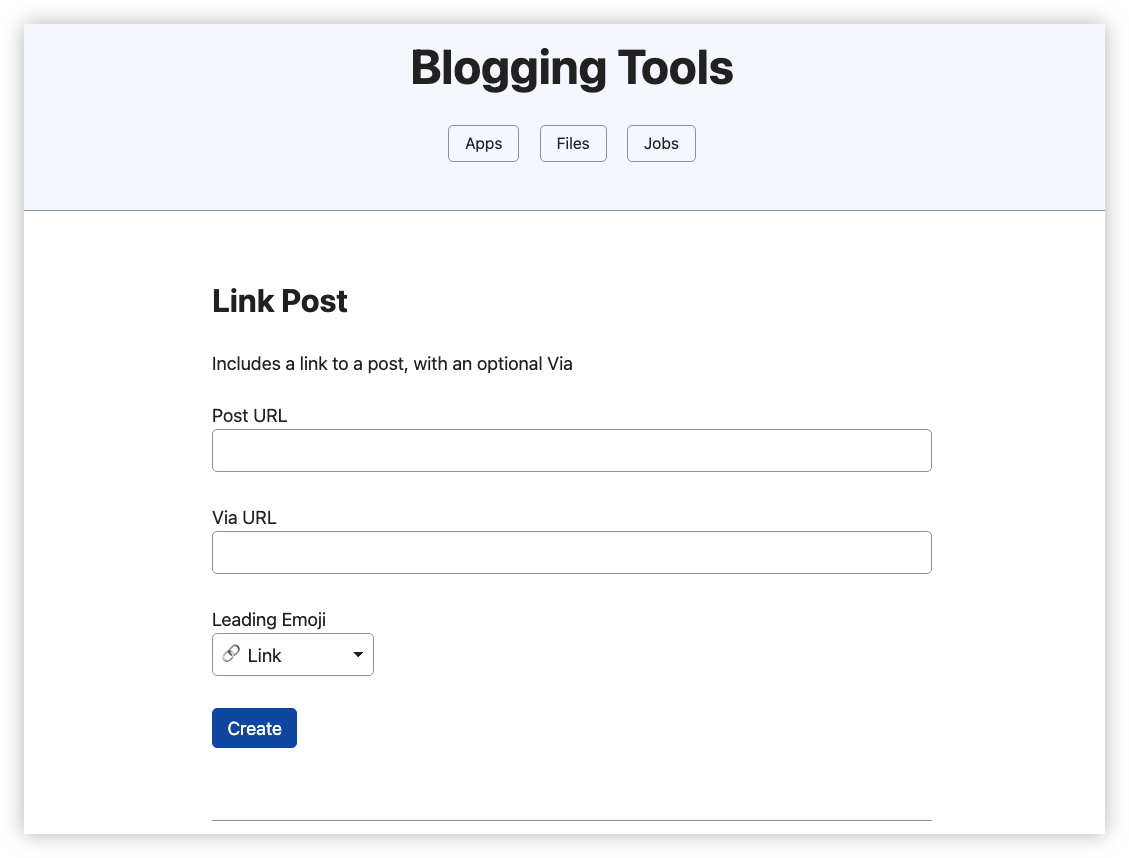

So, why was this killed? Well, apart from the fact that it seemed like remaking prior art — I’m sure there are plenty of Hugo CMSes out there — it seemed strange having two systems that relate to blogging. Recognising this, I decided to add this functionality to Blogging Tools:

This can’t really be described as CMS. It’s more of a place to create posts from a template which can then be published via a Micropub endpoint. But it does the job I need, which is creating link posts with the links pre-filled.

Encapsulation In Software Development Is Underrated

Encapsulation is something object-oriented programming got right.

Rubberducking: Of Brass and Browsers

🦆: Did you hear about The Browser Company?

L: Oh yeah, I heard the CEO wrote a letter about Arc.

🦆: Yeah, did you ever use Arc?

L: Nah. Probably won’t now that it seems like they’ve stopped work on it. Heard it was pretty nice thought.

🦆: Yeah, I heard Scott Forstall had an early look at it.

L: Oh yeah, and how he compared it to a saxophone and recommended making it more like a piano.

🦆: Yeah.

L: Yeah. Not sure I agree with him.

🦆: Oh really?

L: Yeah. I mean, there’s nothing wrong with pianos. Absolutely love them. But everyone seems to be making those, and no-one’s making saxophones, violins, etc. And we need those instruments too.

🦆: Yeah, I suppose an orchestra with 30 pianos would sound pretty bland.

L: Yeah, we need all the instruments: the one’s that are approachable, and the ones for those with the technical skills to get the best sound.

And no-one's a beginner for ever. I'm sure there are piano players out there who would like to try something else eventually, like a saxophone.

🦆: Would you say Vivaldi is like a saxophone?

L: I'd probably say Vivaldi is like a synthesiser. The basics are approachable for the beginners, yet it's super customisable for those that want to go beyond the basics.

And just like a synthesiser, it can be easy to get it sounding either really interesting, or really bizarre. You can get in a state where you can't back out and you'll have to start from scratch.

🦆: Oh, I can't imagine that being for everyone.

L: No, indeed. Probably for those piano players that would want to try something else.

Devlog: Dynamo-Browse Now Scanning For UCL Extensions

Significant milestone in integrating UCL with Dynamo-Browse, as UCL extensions are now being loaded on launch.

Devlog: UCL — Assignment

Some thoughts of changing how assignments work in UCL to support subscripts and pseudo-variables.

Serious Maintainers

I just learnt that Hugo has changed their layout directory structure (via) and has done so without bumping the major version. I was a little peeved by this: this is a breaking change1 and they’re not indicating the “semantic versioning” way by going from 1.x.x to 2.0.0. Surely they know that people are using Hugo, and that an ecosystem of sorts has sprung up around it.

But then a thought occurred: what if they don’t know? What if they’re plugging away at their little project, thinking that it’s them and a few others using it? They probably think it’s safe for them to slip this change in, since it’ll only inconvenience a handful of users.

I doubt this is actually the case: it’s pretty hard to avoid the various things that are using Hugo nowadays. But this thought experiment led to some reflection on the stuff I make. I’m planning a major change to one of my projects that will break backwards compatibility too. Should I bump the major version number? Could I slip it in a point release? How many people will this touch?

I could take this route, with the belief it’s just me using this project, but do I actually know that? And even if no-one’s using it now, what would others coming across this project think? What’s to get them to start using it, knowing that I just “pulled a Hugo”? If I’m so carefree about such changes now, could they trust me to not break the changes they depend on later?

Now, thanks to website analytics, I know for a fact that only a handful of people are using the thing I built, so I’m hardly in the same camp as the Hugo maintainers. But I came away from this wondering if it’s worth pretending that making this breaking change will annoy a bunch of users. That others may write their own post if I’m not serious about it. I guess you could call this an example of “fake it till you make it,” or, to borrow a quote from of Logan Roy in Succession: being a “serious” maintainer. If I take this project seriously, then others can do so too.

It might be worth a try. Highly unlikely that it itself will lead to success or adoption, but I can’t see how it will hurt.

Devlog: Blogging Tools — Finished Podcast Clips

Well, it’s done. I’ve finally finished adding the podcast clip to Blogging Tools. And I won’t lie to you, it took longer than expected, even after enabling some of the AI features my IDE came with. Along with the complexity that came from implementing this feature, that touched on most of the key subsystems of Blogging Tools, the biggest complexity came from designing how the clip creation flow should work. Blogging Tools is at a disadvantage over clipping features in podcast players in that it:

Blogging Tools needs to know this stuff for creating a clip, so there was no alternative to having the user input this when they’re creating the clip. I tried to streamline this in a few ways:

Rather than describe the whole flow in length, or prepare a set of screenshots, I’ve decided to record a video of how this all fits together.

The rest was pretty straightforward: the videos are made using ffmpeg and publishing it on Micro.blog involved the Micropub API. There were some small frills added to the UI using both HTMX and Stimulus.JS so that job status updates could be pushed via web-sockets. They weren’t necessary, as it’s just me using this, but this project is becoming a bit of a testbed for stretching my skills a little, so I think small frills like this helped a bit.

I haven’t made a clip for this yet or tested out how this will feel on a phone, but I’m guessing both will come in time. I also learnt some interesting tidbits, such that the source audio of an <audio> tag requires a HTTP response that supports range requests. Seeking won’t work otherwise: trying to change the time position will just seek the audio back to the start.

Anyway, good to see this in prod and moving onto something else. I’ve getting excited thinking about the next thing I want to work on. No spoilers now, but it features both Dynamo Browse and UCL.

Finally, I just want to make the point that this would not be possible without the open RSS podcasting ecosystem. If I was listening to podcasts in YouTube, forget it: I wouldn’t have been able to build something like this. I know for myself that I’ll continue to listen to RSS podcasts for as long as podcasters continue to publish them. Long may it be so.

Rubberducking: More On Mocking

Mocking in unit tests can be problematic due to the growing complexity of service methods with multiple dependencies, leading to increased maintenance challenges. But the root cause may not be the mocks themselves.

Devlog: Blogging Tools — Ideas For Stills For A Podcast Clips Feature

I recently discovered that Pocketcasts for Android have changed their clip feature. It still exists, but instead of producing a video which you could share on the socials, it produces a link to play the clip from the Pocketcasts web player. Understandable to some degree: it always took a little bit of time to make these videos. But hardly a suitable solution for sharing clips of private podcasts: one could just listen to the entire episode from the site. Not to mention relying on a dependent service for as long as those links (or the original podcast) is around.

So… um, yeah, I’m wondering if I could building something for myself that could replicate this.

I’m thinking of another module for Blogging Tools. I was already using this tool to crop the clip videos that came from Pocketcasts so it was already in my workflow. It also has ffmpeg bundled in the deployable artefact, meaning that I could use to produce video. Nothing fancy: I’m thinking of a still showing the show title, episode title, and artwork, with the audio track playing. I pretty confident that ffmpeg can handle such tasks.

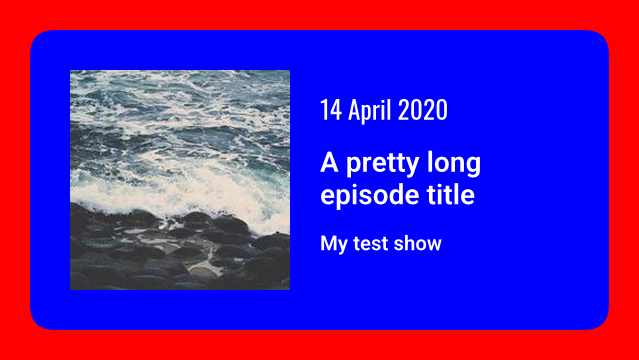

I decided to start with the fun part: making the stills. I started with using Draw2D to provide a very simple frame where I could place the artwork and render the text. I just started with primary colours so I could get the layout looking good:

I’m using Roboto Semi-bold for the title font, and Oswald Regular for the date. I do like the look of Oswald, the narrower style contrasts nicely with the neutral Roboto. Draw2D provides methods for measuring text sizes, which I’m using to power the text wrapping layout algorithm (it’s pretty dumb. It basically adds words to a line until it can’t fit the available space)

The layout I got nailed down yesterday evening. This evening I focused on colour.

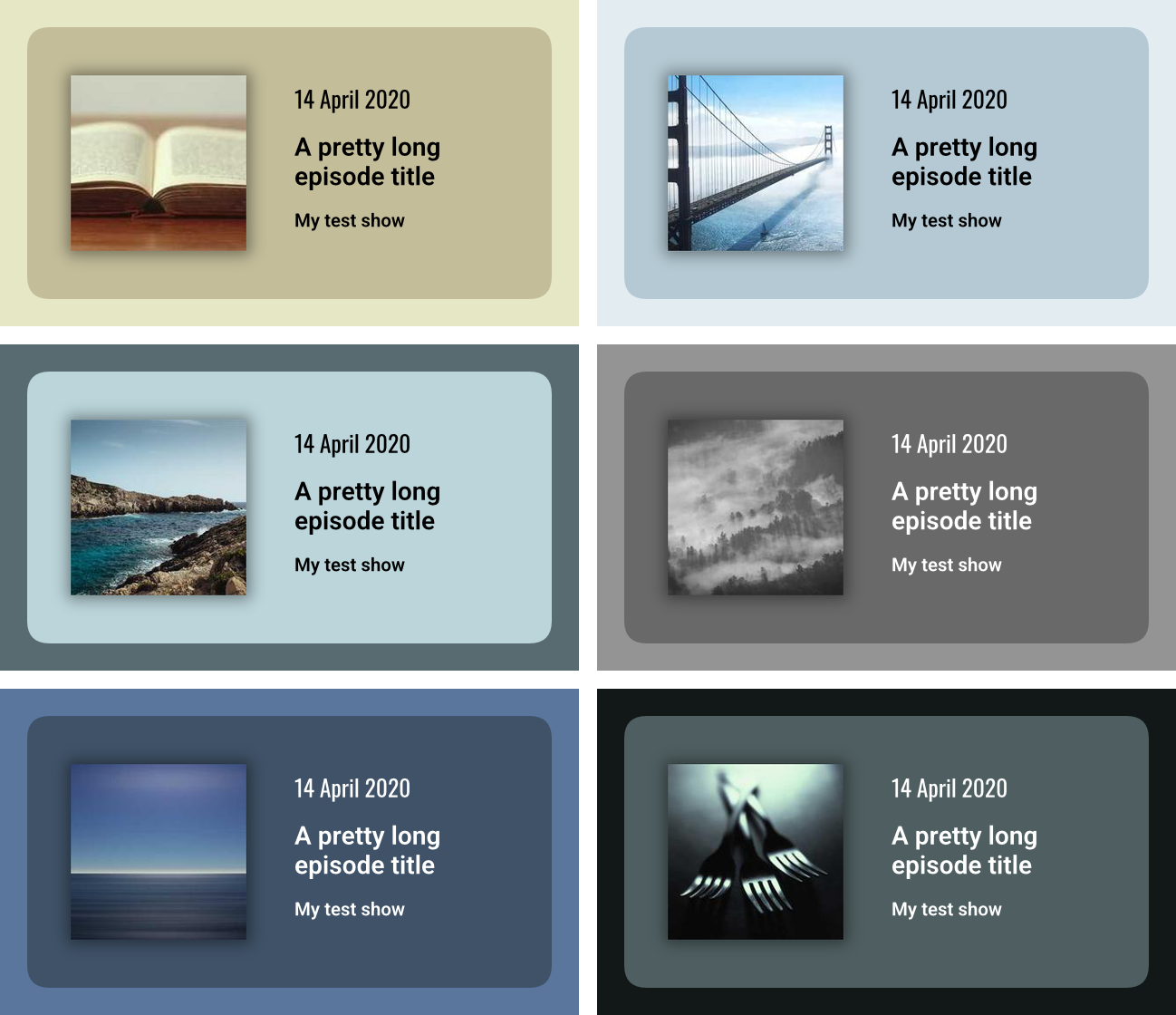

I want the frame to be interesting and close to the prominent colours that come from the artwork. I found this library which returns the dominant colours of an image using K-means clustering. I’ll be honest: I haven’t looked at how this actually works. But I tried the library out with some random artwork from Lorem Picsum, and I was quite happy with the colours it was returning. After adding this library1 to calculate the contract for the text colour, plus a slight shadow, and the stills started looking pretty good:

I then tried some real podcast artwork, starting with ATP. And that’s where things started going off the rails a little:

The library returns the colours in order of frequency, and I was using the first colour as the border and the second as the card background. But I’m guessing since the ATP logo has so few actual colour, the K-means algorithm was finding those of equal prominence and returning them in a random order. Since the first and second are of equal prominence, the results were a little garish and completely random.

To reduce the effects of this, I finished the evening by trying a variation where the card background was simply a shade of the border. That still produced random results, but at least the colour choices were a little more harmonious:

I’m not sure what I want to do here. I’ll need to explore the library a little, just to see whether it’s possible to reduce the amount of randomness. Might be that I go with the shaded approach and just keep it random: having some variety could make things interesting.

Of course, I’m still doing the easy and fun part. How the UI for making the clip will look is going to be a challenge. More on that in the future if I decide to keep working on this. And if not, at least I’ve got these nice looking stills.

The Alluring Trap Of Tying Your Fortunes To AI

It’s when the tools stop working the way you expect that you realise the full cost of what you bought into.

Devlog: Dialogues

A post describing a playful dialogue styling feature, inspired by rubber-duck debugging, and discusses the process and potential uses for it.

On AI, Process, and Output

Manuel Moreale’s latest post about AI was thought-provoking:

One thing I’m finding interesting is that I see people falling into two main camps for the most part. On one side are those who value output and outcome, and how to get there doesn’t seem to matter a lot to them. And on the other are the people who value the process over the result, those who care more about how you get to something and what you learn along the way.

I recently turned on the next level of AI assistence in my IDE. Previously I was using line auto-complete, which was quite good. This next level gives me something closer to Cursor: prompting the AI to generate full method implementations or having a chat interaction.

And I think I’m going to keep it on. One nice thing about this is that it’s on-demand: it stays out of the way, letting me implement something by hand if I want to. This is probably going to be the majority of the time, as I do enjoy the process of software creation.

But other times, I just want a capability added, such as marshalling and unmarshalling things to a database. In the past, this would largely be the code copied and pasted from another file. With the AI assistence, I can get this code generated for me. Of course I review it — I’m not vibe coding here — but it saves me from making a few subtle bugs and some pretty boring editing.

I guess my point is that I think these two camps are more porous then people think. There are times where the process is half the fun in making the thing, and others where it’s a slog, and you just want the thing to be. This is true for me in programming, and I can only guess that it’ll be similar in other forms of art. I guess the trap is choosing to join one camp, feeling that’s the only camp that people should be in, and refusing to recognise that others may feel differently.

Merge Schema Changes Only When The Implementation Is Ready

Integrating schema changes and implementation together before merging prevents project conflicts and errors for team members.