-

A Tour Of My New Self-Hosted Code Setup

While working on the draft for this post, a quote from Seinfield came to mind which I thought was a quite apt description of this little project: Breaking up is knocking over a Coke machine. You can’t do it in one push. You gotta rock it back and forth a few times and then it goes over. I’ve been thinking about “breaking up” with Github on and off for a while now. Continue reading →

-

Work offered us a very… American style lunch today. First time I had bacon with my pancakes. Honestly, not as bad as I was expecting.

-

👨💻 New post on Moan-routine over at Coding Bits: Zerolog’s API Mistake

-

Zerolog’s API Mistake

I’ll be honest, I was expecting a lot more moan-routine posts than I’ve written to date. Guess I’ve been in a positive mood. That is, until I started using Zerolog again this morning. Zerolog is a Go logging package that we use at work. It’s pretty successful, and all in all a good logger. But they made a fundamental mistake in their API which trips me up from time to time: they’re not consistent with their return types. Continue reading →

-

Got a long post written that I wanted to publish today. But I need to add the audio narration to it, and my voice is just not working this evening. So I’m going to have to hold it for a bit longer. A shame, but if a day late means a better overall post, it might be worth it in the end.

-

The gym has discovered they have a heater, which is immensely welcomed, because wearing shorts and a T-shirt in weather that’s barely 16°C is not fun (and this is the warmest it’s been in weeks). 🥶

-

An interesting tale on how

.DS_Store— a regular in Git ignore files everywhere — got its name.Via @Burk within the Hemispheric Views Discord.

-

Woke up with this tune in my head this morning. Managed to record it before I forgot it, then I added some accompaniments. I’ve called it Prophet, after the synth. It’s a decent start but I’m not sure how to continue it from this point on.

-

Woke up with this tune in my head this morning. Managed to record it before I forgot it, then I added some accompaniments. I’ve called it Prophet, after the synth. It’s a decent start but I’m not sure how to continue it from this point on.

-

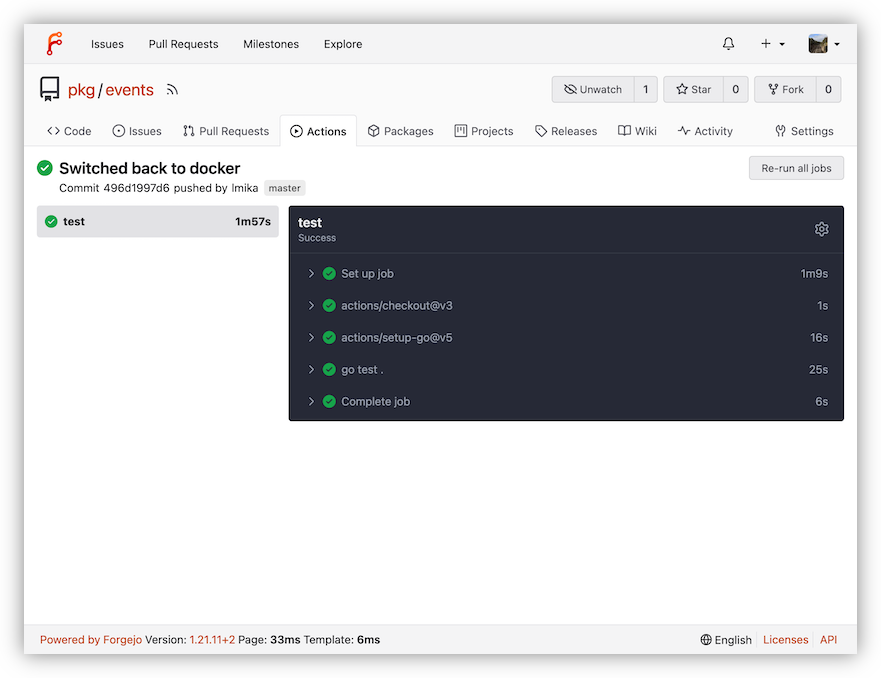

Added the final pieces of my self-hosted Forgejo instance this morning: a MacOS runner, and daily backups. I think we’re finally ready to start using it for current projects now.

-

🔗 txt.fyi

Thank you to the anonymous person who runs this. Something happened which left me ropeable, and I needed a place to scream into the void. I did it there. It’s now lost to the either, along with (most) of my anger. Hopefully time will fix what’s left.

-

A Bit of 'Illuminating' Computer Humour

Here’s some more computer-related humour to round out the week: How many software developers does it take to change a lightbulb? Just one. How many software developers does it take to change 2 lightbulbs? Just 10. How many software developers does it take to change 7 lightbulbs? One, but everyone within earshot will know about it. How many software developers does it take to change 32 lightbulbs? Just one, provided the space is there. Continue reading →

-

Just bought Crystal Caves HD from GoG. This might be the best $4.00 I spend today. 💎

-

Mark the date. First successful CI/CD run of a Go project running on my own Forgejo instance, running in Hetzner. 🙌

-

Some day, I’ll be working on a task I’d be pressured to get finished right then and there, and no one will be messaging me while I’m doing it. Today was not that day. 👨💻📳

-

Last night, I setup a Linode server to try out Forgejo. The setup went smoothly, and I managed to get Forgejo up and running, but the setup is a little expensive: around ~$18.00 AUD for a 2 GB server with 50 GB storage. So I’m going to try out Hetzner. I should, in theory, be able to get two servers — one for the frontend, and one as a CI/CD worker — both with twice as much RAM, plus a 50 GB volume for around ~$17.00 AUD.

The only downside is that the servers are further away: Falkenstein, Germany; rather than Sydney (I can’t be the only one that wishes the speed of light was faster). We’ll see how much the latency’s going to annoy me.

-

Ok, going to try out Forgejo for self-hosting my code. Got through the hardest part, which was paying for a Linux VPS (with backups enabled) and I’ll start with some old repositories that I won’t feel bad loosing. But if it all works out, I’ll use it as my replacement for Github. Wish me luck. 🤞

-

I’m enjoying the special guests on Downstream… but I do miss Julia. I mean, I’m super happy for her career advancement which led to her departure, but she and Jason were a great podcasting duo. But it’s fine, the special guests are great too. Currently listening to the episode with Tim Goodman.

-

I wish more podcasters know what a double-ended podcast is. Having Zoom’s compression algorithm garble your most important point is not a great listening experience. If you’re just starting off, or if you have a guest, that I understand. But if you’ve been doing this for years as part of your job? 🫤

-

There are times where I wish Go had Python-like tuples, but I think the decision to keep them out of the language is a good one. I feel like it’s easy for people to overuse these types of tuples, instead of coming up with new dedicated types. Go isn’t completely immune from this — I’ve seen some functions returning slices of slices of strings — but it does try to encourage writing code with many different types, each one with a narrow use case. The fact that this is found both in the culture and in the language itself (e.g. anonymous structs) is a good thing.

-

A university text-book author walks into a bar. The punchline is left as an exercise for the reader.

-

A QA walks into a bar, crawls into a bar, flys into a bar; and orders: a beer, 2 beers, 0 beers, -1 beers, then walks out saying “Test complete.” Meanwhile, a software developer asked to do QA walks into a bar and says “I didn’t fall down walking in. Test complete.”

-

Ran into my old barista this morning. He made morning coffees at the station in the late 2010s, before Covid wiped his business out. I thought he’d headed back to New Zealand after that, but no he’s still around and doing quite well (just not morning coffees). Really great to see him again. ☕️

-

Spent the last week polishing up the tool I use that takes journal entries from a Day One export and adds them as blog posts to a Hugo site. That tool is now open source for anyone else who may want to do this. You can find a link to it here: day-one-to-hugo.

Also recorded a simple demo video on how to use it:

-

It would be nice for browsers to remember every close tab that’s been open for more than, say, a day. This can sit alongside the browser’s current history and closed tab group, which is more geared towards your recent browsing. But unlike those, this would maintain a long term history, recording every closed tab since the beginning of time. And it doesn’t even need to be the full back-stack: the last visited URL would be fine.

The day limit is important, as it provides a good hint that it’s a tab that I want to revist later. There’ve been many a time I’ve had a tab open for weeks, saying to myself that I’ll read or do something with it, only to close it later accidently or when I want a tidier browser workspace. If and when the time comes where I want to revisit it, it’s has fallen out of the history, and all I end up is regret for not making a bookmark.

I suppose I could get into the habit of bookmarking things when I close them, but that’ll just move the mess from the browser to Linkding. No, this is something that might work better in the browsers themselves.