Blogging Tools - Podcast Clip Favourites

Inspired by the way Ron Jeffries writes about his work, as well as by Martin Hähnel attempt at this, I thought for this instalment of Devlog I’ll try a more “lab notes” approach. This means a potentially more mundane and less satisfactory description of project work today: less showing of what was accomplished, and more of a running commentary. I wanted to see if I liked this style of writing, and if it helped me or slowed me down. Preliminary results were mixed: it did slow me down, but I found it enjoyable. I’ll try this a few more times to see how I feel about it.

Today’s coding session is to continue the work of adding saved favourite podcast clips from Blogging Tools. Previously this was a standalone application connected to a database, but along with the tech stack used to build this falling out of active support, along with the feeds no longer working for reasons I rather not look into, I want to replace this with a static site that takes nothing to maintain. One other thing is that I want to move away from referencing links to the podcast clips and saving actual audio. Since I’m dealing with copyrighted material, some of it that is for pay, this site will be kept private.

I’m choosing to use Blogging Tools for this as it has a relatively decent podcast clipper that I use for clips I publish to my site. For the static site itself, I’m choosing to look at Hugo, given that I’m comfortable with this approach too. Although I do have some concerns about the long term viability of this for sites I don’t touch that often. An initial attempt at this used another site I use to track audio that used a template that fell out of maintenance and broke when I moved to Hugo 0.151. The Hugo maintainers really need to clean up their versioning act.

Anyway, the current status of this is a temporary Hugo site with a new template. At this stage, I’ve got Blogging Tools running a new job type that will take the clipped audio and image thumbnail, save them as static data to Hugo, and publish a new post.

At the moment, the template isn’t including the thumbnail or audio HTML. This is now a point of committing to this site and template: moving it out of the temp directory and into a proper workspace. So let’s start that now. I’m using the Hugo Flex theme as it provides a nice clean canvas to start with, and it seems to be in active maintenance (something to watch out for when dealing with Hugo themes). Added the git module, and setting Goldmark unsafe to true (once again) and now the new Hugo site is ready:

Now testing this with Blogging Tools. I’ve already got an ATP clip ready to go. And after remembering to change the target directory from the temp Hugo site to the real one, I am ready to test the extract. All that involves is clicking “Save”:

Okay, that didn’t work for a very stupid reason. I set the target directory to Blogging Tools repo, not the Hugo site. Made the change (Blogging Tools is being run using Air which gives me file watching capabilities one would see in the frontend world) and can now see that the new post was written to the new Hugo site.

Now to adjust the template a little, just to add the audio and thumbnail. The Markdown files produced by Blogging Tools have all the clip information in the front matter:

---

title: "449: An Unclean Mouse"

episode:

guid: some-random-id

url: "https://atp.fm/449"

title: "449: An Unclean Mouse"

date: 2021-09-23 12:27:49 +1000 AEST

image_src: "https://cdn.atp.fm/artwork-bootleg"

audio_src: "https://example.com/file.mp3"

show:

title: "Accidental Tech Podcast: Unedited Live Stream"

clip:

start: 0s

duration: 60ms

date: 2021-09-23 12:27:49 +1000 AEST

thumbnail: images/DQkt_Lk5QRjXVHE0rdR09.jpg

audio: audio/5fFX8m0BqqFqTwlVAtb2Y.mp3

---

Test thing

Oh, before I do that. Let me fix the dates should that they’re in UTC and formatted as ISO 8601. This is a quick change to the template used by Blogging Tools:

date: {{.Clip.EpisodeDate.UTC.Format "2006-01-02T15:04:05Z07:00"}}

Another quick test… perfect. The dates are coming through again. Oh, and a quick aside: because the front matter is all included in one Go template, I had to make sure to properly quote the string values, lest they contain colons and other constructs YAML doesn’t like. Easy way to do that is pipe them through printf "%q", which quotes them as if they’re Go strings. May not be perfect but it’s good enough for now:

title: {{.Clip.EpisodeTitle | printf "%q"}}

So, lets fix the template. I’ll start by creating a new template in layouts/clips/singles.html which is a copy-and-paste of the existing theme post. I’ll then add details of the front matter, such as the thumbnail and audio player. This I can get using the Params template construct. I’ll start by just printing it:

{{ define "main" }}

{{ $context := dict "page" . "level" 1 "isdateshown" true }}

{{ partial "heading.html" $context }}

Audio = {{.Params.audio}}, image = {{.Params.thumbnail}}

{{ .Content }}

{{ partial "tags.html" . }}

{{ partial "comments.html" . }}

{{ end }}

I had to restart Hugo, but that’s coming through. I need to convert these into proper URLs though. I’ll start by trying the absurl template function:

Audio = {{ absURL .Params.audio}}, image = {{ absURL .Params.thumbnail}}

Okay, getting better. Let’s try and turn them into proper HTML elements:

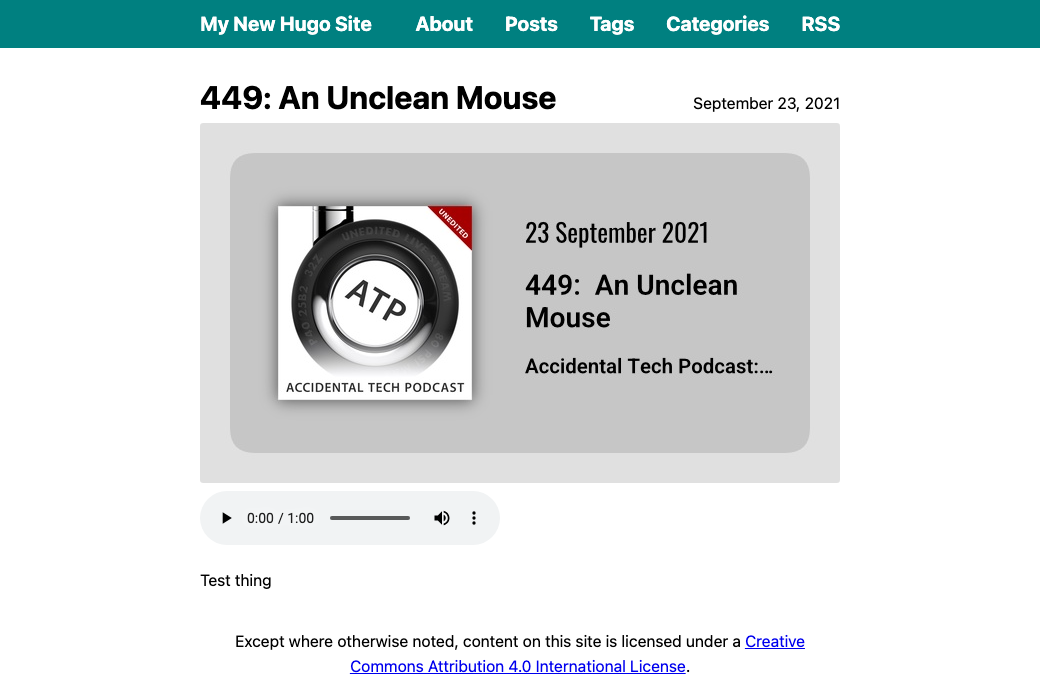

<img src="{{ absURL .Params.thumbnail}}">

<audio src="{{ absURL .Params.audio}}" controls></audio>

Good, the image and audio is coming through. Image looks a little large but I’ll fix that at little later. Oh, and it looks like the clips are showing up in the home page already. That’s a nice touch. I do want to change the clip paths though. At the moment, they’re saved using the auto-incrementing clip ID, which is not ideal. Clip metadata is stored in an Sqlite database and the library I’m using — which is a Go port of Sqlite3 that doesn’t need CGO — has a nasty habit of reusing row IDs. I really should replace this with an auto-generated primary key. But that’s for later.

Right now, I think I’ll use a hash of the episode Guid for the path. ATP seems to have randomly generated hashes but I know that some RSS feeds choose to use URLs, which won’t do for a path. Why the Guid? Well, I want to be able to regenerate a clip if need be, replacing the existing one.

Actually, no, that won’t do. I want to be able to create multiple clips from the same episode. So maybe I’ll use a hash that includes the Guid and clip timestamp. So let’s try that.

clipPathRaw := fmt.Sprintf("%v:%v", clip.EpisodeGUID, clip.ClipStart)

clipPathHash := md5.Sum([]byte(clipPathRaw))

clipPath := hex.EncodeToString(clipPathHash[:])

postFilename := filepath.Join(postDir, fmt.Sprintf("%v.md", clipPath))

MD5 should be fine: these don’t need to be cryptographically safe. Also, md5.Sum returns an [16]byte array, and I keep forgetting how to return this as a []byte slice. Apparently it’s array[:].

Another quick test. Yeah, it works. The clip paths now look like this: http://localhost:1313/clips/210da5b665c38c809eb1ea481b1b22de/ Not great, but I tend to browse this visually so I think I can live with this.

Okay, next thing is having Blogging Tools generate the clips and commit them to Git. The goal is to have the Hugo site stored in a Git repository and built to a private, undisclosed website. I did this for Nano Journal, which synchronised journal entries to a Git repo after saving it locally. Looking at the code, though, I have no idea how I setup the credentials. There’s nothing in the config hinting at a certificate so I may have done it manually.

I’m also wondering if I can use Go Git for this. I didn’t use this for Nano Journal, as I wanted to use LFS; which Go Git doesn’t support (instead I just shelled out to git). But I’m wondering if I can live without LFS for this.

Okay, let’s try this. I’ll push the current Hugo site to a test repo on my Forgejo instance and see whether I can check it out from Blogging Tools. This will be done as a user that I’ll configure Blogging Tools to act as.

Then I added Go Git to blogging tool and created a new provider for Git. Added a method to either pull or clone a remote repository, checkout a branch, and pull updates from the repo. I’ve chosen to use the file system for keeping the local workspace so that Blogging Tools doesn’t need to keep cloning the repo whenever there’s a need to add a clip. This I’m just going to keep in ephemeral storage so that when the Docker container gets cycled and the workspace lost, it’ll just recreate it.

func (p *Provider) CloneOrPull() error {

var (

repo *git.Repository

err error

)

if !isDir(p.workspace) {

// Workspace doesn't exist. Pull from repo.

repo, err = git.PlainClone(p.workspace, &git.CloneOptions{

URL: p.repoURL,

RecurseSubmodules: git.DefaultSubmoduleRecursionDepth,

Auth: &http.BasicAuth{

Username: p.username,

Password: p.password,

},

})

} else {

repo, err = git.PlainOpen(p.workspace)

}

if err != nil {

return fault.Wrap(err)

}

worktree, err := repo.Worktree()

if err != nil {

return fault.Wrap(err)

}

if err := worktree.Pull(&git.PullOptions{

RemoteURL: p.repoURL,

RecurseSubmodules: git.DefaultSubmoduleRecursionDepth,

ReferenceName: plumbing.ReferenceName(p.branch),

Auth: &http.BasicAuth{

Username: p.username,

Password: p.password,

},

}); err != nil {

return fault.Wrap(err)

}

if err := worktree.Checkout(&git.CheckoutOptions{

Branch: plumbing.ReferenceName(p.branch),

}); err != nil {

return fault.Wrap(err)

}

return nil

}

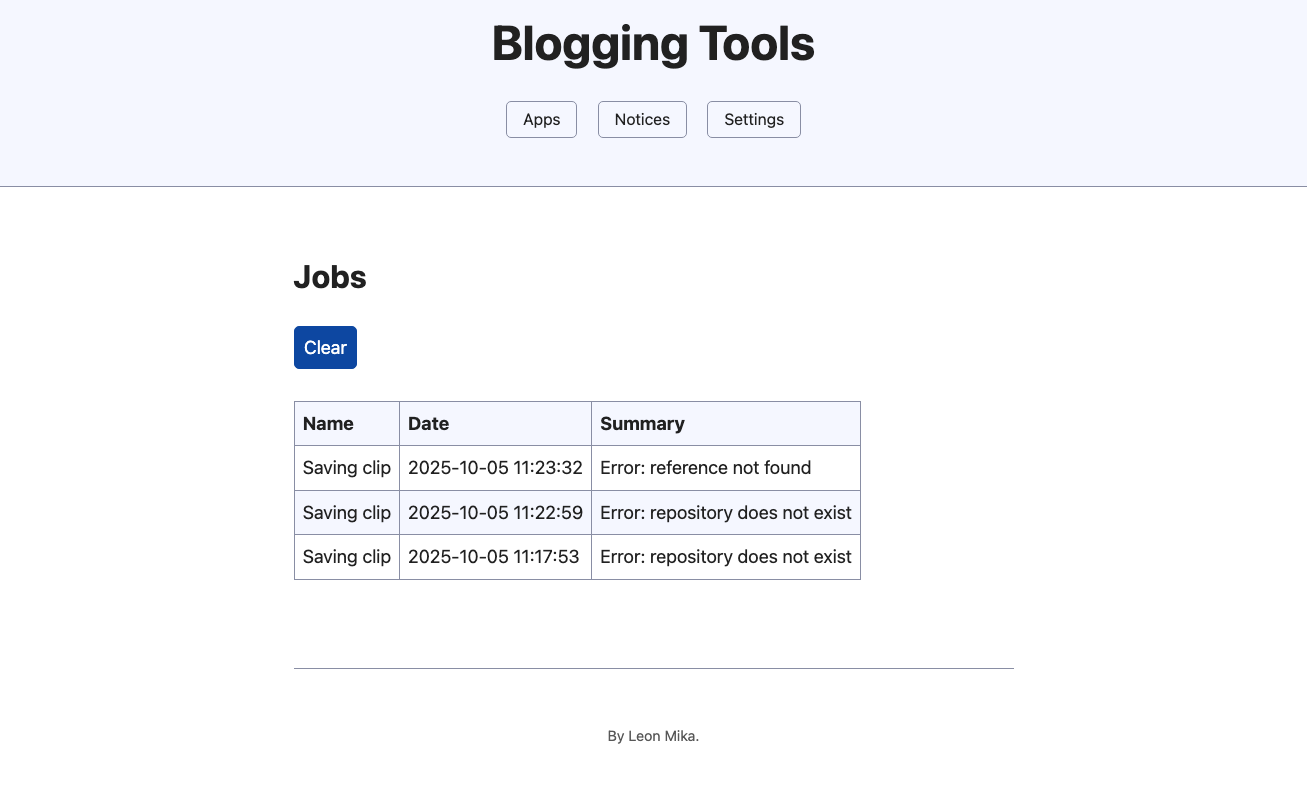

Quick test of this, and I’m seeing a “repository doesn’t exist” error.

Actually, no. That was because of an earlier use of the repository URL when I actually wanted the workspace. But now I’m seeing a “reference not found” error when I try to pull from the origin:

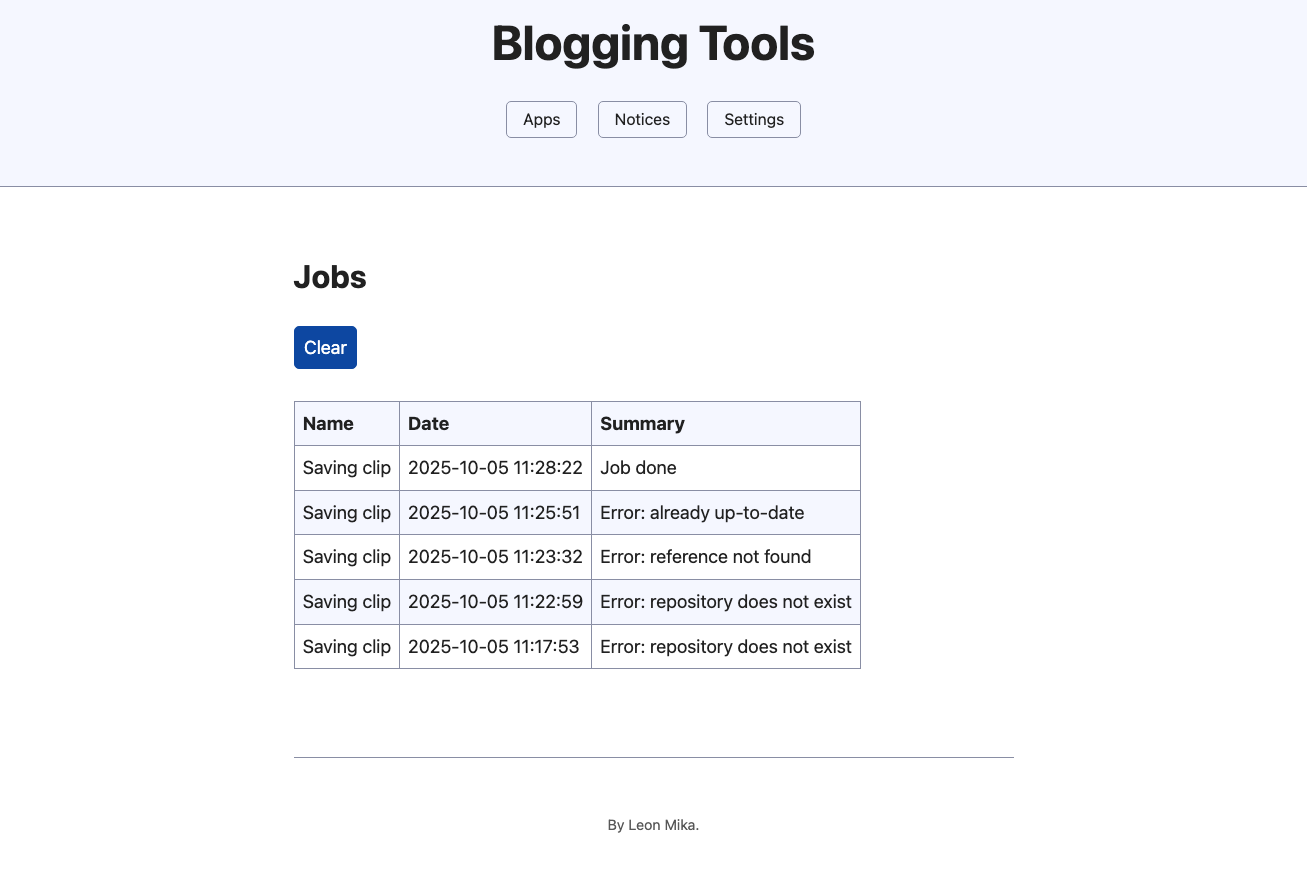

Ah, okay. I should’ve used plumbing.NewBranchReferenceName here. That’s fix that. Now it’s an already up-to-date error. That’s fine, I’ll just add an ignore case here:

if err := worktree.Pull(&git.PullOptions{

RemoteURL: p.repoURL,

RecurseSubmodules: git.DefaultSubmoduleRecursionDepth,

ReferenceName: plumbing.NewBranchReferenceName(p.branch),

Auth: &http.BasicAuth{

Username: p.username,

Password: p.password,

},

}); err != nil {

if !errors.Is(err, git.NoErrAlreadyUpToDate) {

return fault.Wrap(err)

}

}

Another test:

And that worked. I managed to pull changes from remote.

Deleting the workspace and trying a clone. Hmm, that’s strange. I’m seeing an authentication required error, yet it looks like the repository was cloned successfully. Let me try and ignore that error.

if repo == nil && err != nil {

return fault.Wrap(err)

} else if repo != nil && err != nil {

log.Printf("warn: error received alongside repo: %v", err)

}

Okay, that worked. It’s not pretty, but I think it’s fine for now.

Now to commit the new files to the repository. Although partial failures from staging multiple files — one file is stage but the second one fails for some reason — may introduce some problems where the state is not completely settled, so I may also need a method of ensuring the workspace is pristine: no files should exist after cloning or pulling that is not staged. So need to add that first. I don’t see a method to do this on the Workspace type, so basically what I’ll do is reset hard, and then iterate over the untracked files and remove them:

if err := w.Reset(&git.ResetOptions{

Mode: git.HardReset,

}); err != nil {

return fault.Wrap(err)

}

status, err := w.Status()

if err != nil {

return fault.Wrap(err)

}

for f, s := range status {

if s.Worktree == git.Untracked {

if os.Remove(f) != nil {

return fault.Wrap(err)

}

}

}

Well, that’s the plan eventually. I’ll just log them for now.

Then it’s just a matter of adding files and commit the changes. First attempt at this failed with an entry not found error. That was just a bad path. Adding files now working, along with committing, and now we’re failing at the push. Reason is that unlike all the other Go Git methods, which were pretty high level, this one leaks a bit of the mechanics of Git, requiring the use of a Ref Spec. I’ve just hard coded it to a version which will push the local main branch to the origin main branch.

if err := repo.Push(&git.PushOptions{

RemoteURL: p.repoURL,

Auth: &http.BasicAuth{

Username: p.username,

Password: p.password,

},

RefSpecs: []config.RefSpec{

config.RefSpec(fmt.Sprintf("+refs/heads/%v:refs/remotes/origin/%v", p.branch, p.branch)),

},

}); err != nil {

return fault.Wrap(err)

}

Oh, before that, I finding a cannot create empty commit: clean working tree. I’m opening the work tree between staging the files and committing. Is that the problem?

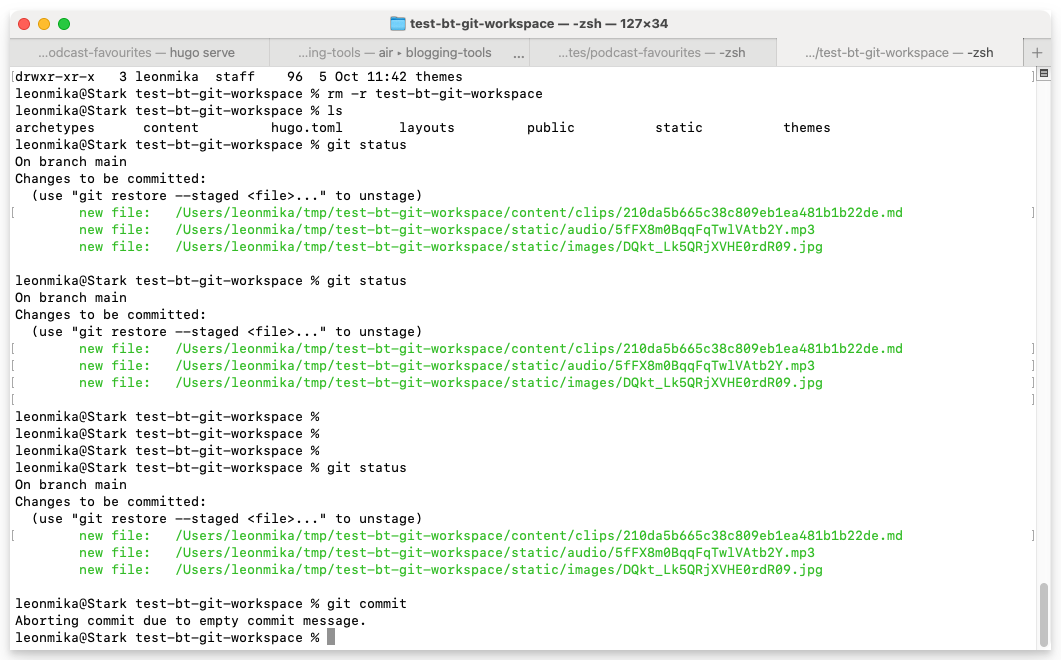

Hmm, no. It might be that I’m adding the full path of the file to the index. Running git status in that repository yielded full path entries, which is very uncharacteristic. Furthermore, trying to unstage them did nothing. I’ll remove the repo directory and change the logic to get the relative path of the file before they’re added:

for _, f := range files {

relPath, err := filepath.Rel(p.workspace, f)

if err != nil {

return fault.Wrap(err)

}

if _, err := w.Add(relPath); err != nil {

return fault.Wrap(err)

}

}

if _, err := w.Commit(msg, &git.CommitOptions{}); err != nil {

return fault.Wrap(err)

}

Oh, it was because the files I was trying to add were already added to the repository from earlier in the day, and they weren’t being changed by the job. Let me remove them from the repository and try again.

Okay, it was that. Commits are now working, although pushes don’t seem to be synchronising the commits. When I look at recent changes in Forgejo, it only shows my commits, not the ones created by the service user I made for Blogging Tools. Will try adjusting the refspec to push all heads:

RefSpecs: []config.RefSpec{

"+refs/heads/*:refs/remotes/origin/*",

},

Nah, that didn’t help. The method is returning no error, yet it doesn’t seem to be synchronising the branches. Will need to learn more about how this all works. But I think that’s something for later.