-

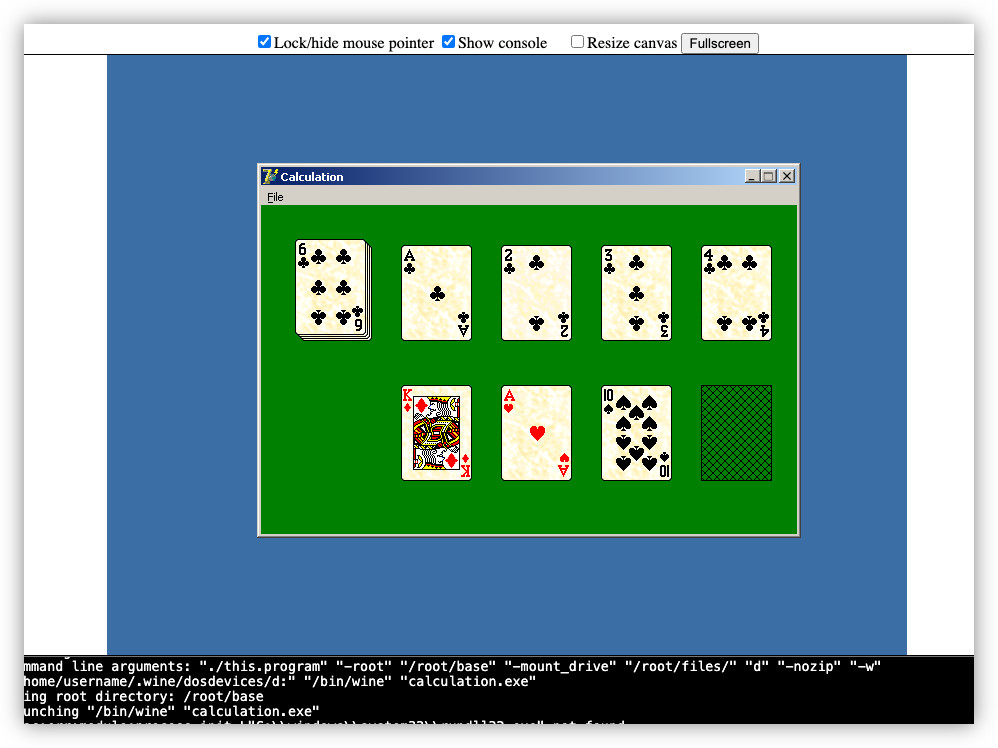

Thought I’d have another go at looking at BoxedWine for making an online archive of my old Delphi projects. They’ve been some significant improvements since the last time I looked at it. They don’t run fast, but that’s fine. As long as they run.

-

That Which Didn't Make The Cut

I did a bit of a clean-up of my projects folder yesterday, clearing out all the ideas that never made it off the ground. I’d figured it’d be good to write a few words about each one before erasing them from my hard drive for good. I suppose the healthiest thing to do would be to just let them go. But what can I say? Should a time come in the future where I wish to revisit them, it’d be better to have something written down than not. Continue reading →

-

Side Scroller 95

I haven’t been doing much work on new projects recently. Mainly, I’ve been perusing my archives looking for interesting things to play around with. Some of them needed some light work to get working again but really I just wanted to experience them. I did come across one old projects which I’ll talk about here: a game I called Side Scroller 95. And yes, the “95” refers to Windows 95. Continue reading →

-

Small Calculator Commands

This page documents the extra commands from Small Calculator. These were taken from source code, pretty much as is, but styled to suite the web, and any spelling mistakes fixed. These were retrievable from the application itself by typing “help” follow by the command. Available Commands The list of available commands are as follows BLOCK <statements> Executes a block of statements HELP [topic] Display help on topic DEFFNC <function> Defines a new function ECHO <text> Displays text on the line ECHOEXPR <cmd> Executes a command and displays the result EXEC <file> Executes a file of commands FUNCTIONS Displays all predefined functions IF <pred> Does a command on condition RETURN <val> Sets the return value RETURNEXPR <cmd> Sets the return value to the result of <cmd> Type "HELP <command>" to see infomation on a command BLOCK BLOCK {<cmd1>} {<cmd2>} . Continue reading →

-

Small Calculator

Date: Unknown, but probably around 2005 Status: Retired Give me Delphi 7, a terminal control, and an expression parser, and of course I’m going to build a silly little REPL program. I can’t really remember why I though this was worth spending time on, but I was always interested in little languages (still am), and I guess I though having a desk calculator that used one was worth having. I was using a parser library I found on Torry’s Delphi Pages (the best site at the time to get free controls for Delphi) for something else, and after getting a control which simulated a terminal, I wrote a very simple REPL loop which used the two. Continue reading →

-

Alto

Date: 2020 — present Status: Rockin' The year was 2020. The pandemic was just beginning and I was stuck at home, not being able to do much of anything. Worse, rumours came around that Google was shutting down Google Play Music, my music player of choice. They were going to force everyone onto their streaming service instead. Oh, they may have a place for all the music you’ve downloaded (or written) yourself, but not in the first version. Continue reading →

-

Message Simulator Client

Years: 2017 — 2020 Status: Gone I once worked at a company that was responsible for sending SMS messages via an API. Think one time passwords when you log into websites, before time-based OTP apps were a thing. And yeah, this did involve some “marketing” messages, although we were pretty strict about outright spam or phishing messages. Anyway, since sending messages costed us money, we had a simulator setup in our non-prod environments which we used for testing. Continue reading →

-

Build Indicators

AKA: Das Blinkenlights Date: 2017 — now Status: Steady Green I sometimes envy those that work in hardware. To be able to build something that one can hold and touch. It’s something you really cannot do with software. And yeah, I dabbled a little with Arduino, setting up sketches that would run on prebuilt shields, but I never went beyond the point of building something that, however trivial or crappy, I could call my own. Continue reading →

-

Broadtail

Date: 2021 – 2022 Status: Paused First project I’ll talk about is Broadtail. I think I talked about this one before, or at least I posted screenshot of it. I started work on this in 2021. The pandemic was still raging, and much of my downtime was watching YouTube videos. We were coming up to a federal election, and I was getting frustrated with seeing YouTube ads from political parties that offend me. Continue reading →