-

Moved Blogging Tools from a Linode VPS, hosted in Sydney, to a Hetzner one, and now one the services it calls out to is returning 404s. I originally thought it’s because the service is not available in Germany, but I tried moving it to Singapore next and it’s still returning 404. Very odd.

-

Just got a message from the real-estate agent I bought my current house from that it’s been 10 years since settlement. A worthy milestone. Saying that the time flew by is trite, but it really feels like the last 5 years was just a blink of the eye.

-

In this week’s I wish Obsidian did a thing, oh wait there might be a plugin for this, ah there is find: Note Archiver. Adds a new context menu item to move notes into an Archive directory. Works seamlessly with my manual archiving approach.

-

Kind of crazy that we now live in a world where it’s easier and potentially cheaper for restaurants and cafes to display their menu on 80 inch LCD TVs than it would be to just have a sign made.

-

To whom it may concern.

-

🔗 Pixel Envy: The Future of British Television in a U.S. Streaming World

The BBC has problems, but it matters to people. If a country values its domestic media — particularly public broadcasting — it should watch the future of British media closely and figure out what is worth emulating to stay relevant. The CBC is worth it, too.

I’d add that the ABC (Australian Broadcasting Cooperation) and SBS are worth it too. Culturally speaking, it’d be a sad day if those were to go away.

-

Don’t underestimate the utility of naming meeting rooms. As someone who was almost late to a meeting in the “conference room next door, but not that one you’re thinking of,” they can be super useful.

-

People at work were always talking about a bánh mì place that was “across the road.” Today, I realised that they weren’t referring to the major road a few doors down from the office, but literally across the street the office is located on (you can see it from the front door). Pretty decent bánh mì.

-

Watching an integration video to learn more about how to work with an API service provider. First slide:

We use HTTP REST, which has 4 verbs: GET, POST, PUT, DELETE. You just used a GET to get this video…

Ah, I guess we’re starting this story at the Big Bang. 😩

-

I know for myself that if an OS vendor started designing their products thinking that I’d want an emotional connection with my computer, I’d start looking for another OS vendor. This is coming from someone who’s turned off Siri on all their Macs. I’m here to use my computer, not make new friends.

-

All the recent changes to UCL is in service of unifying the scripting within Dynamo Browse. Right now there are two scripting languages: one for the commands entered after pressing

:, and one for extensions. I want to replace both of them with UCL, which will power both interactive commands, and extensions.Most of the commands used within the in-app REPL loop has been implemented in UCL. I’m now in the process of building out the UCL extension support, start with functions for working with result sets, and pseudo-variables for modifying elements of the UI.

Here’s a demo of what I’ve got so far. This shows the user’s ability to control the current result-set, and the selected item programatically. Even after these early changes, I’m already seeing much better support for doing such things than what was there before.

-

📘 Devlog

UCL — Assignment

Some thoughts of changing how assignments work in UCL to support subscripts and pseudo-variables. Continue reading →

-

Unexpected heron sighting. The noisy miners were not expecting it either, and they were not happy.

-

Like the coining of phrase “Canadian Devil Syndrome” by emailer Joseph on the latest Sharp Tech.

-

Serious Maintainers

I just learnt that Hugo has changed their layout directory structure (via) and has done so without bumping the major version. I was a little peeved by this: this is a breaking change1 and they’re not indicating the “semantic versioning” way by going from 1.x.x to 2.0.0. Surely they know that people are using Hugo, and that an ecosystem of sorts has sprung up around it. But then a thought occurred: what if they don’t know? Continue reading →

-

Watched the first semifinals of the Eurovision Song Contest this evening on SBS (the good and proper time for an Aussie). Good line-up of acts tonight: not too disappointed with who got through.

My favourites this evening: 🇮🇸🇪🇪🇪🇸🇸🇪🇸🇲🇳🇱🇨🇾, plus 🇳🇴🇧🇪🇦🇿 which were decent.

-

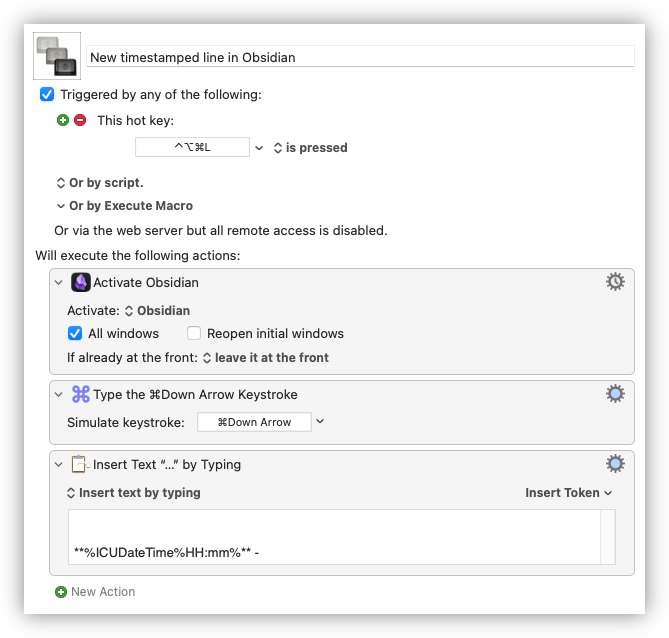

My first automation to assist me with this “issue driven development” approach: a Keyboard Maestro macro which will activate Obsidian, go to the end of the document, and add a new line beginning with the current time.

My goal is to have one Obsidian note per Jira task, which I will have open when I’m actively working on it. When I want to record something, like a decision or passing thought, I’ll press Cmd+Option+Ctrl+L to fire this macro, and start typing. Couldn’t resist adding some form of automation for this, but hey: at least it’s not some hacked-up, makeshift app this time.

-

Enjoyed watching Simon Willison’s talk about issue driven development and maintaining temporal document for tasks. Watch the video but that section can be boiled down to “now write it down.” Will give this a try for the tasks I do at work.

-

📘 Devlog

Blogging Tools — Finished Podcast Clips

Well, it’s done. I’ve finally finished adding the podcast clip to Blogging Tools. And I won’t lie to you, it took longer than expected, even after enabling some of the AI features my IDE came with. Along with the complexity that came from implementing this feature, that touched on most of the key subsystems of Blogging Tools, the biggest complexity came from designing how the clip creation flow should work. Blogging Tools is at a disadvantage over clipping features in podcast players in that it: Continue reading →

-

I sometimes wish there was a way where I could resurface an old post as if it was new, without simply posting it again. I guess I could adjust the post date, but that feels like tampering with history. Ah well.

In other news, my keyboard’s causing me to make spelling errors again. 😜

-

My online encounters with Steve Yegge’s writing is like one of those myths of someone going on a long journey. They’re travelling alone, but along they way, a mystical spirt guide appears to give the traveller some advice. These apparitions are unexpected, and the traveller can go long spells without seeing them. But occasionally, when they arrive at a new and unfamiliar place, the guide is there, ready to impart some wisdom before disappearing again.1

Anyway, I found a link to his writing via another post today. I guess he’s writing at Sourcegraph now: I assume his working there.

Far be it for me to recommend a site for someone else to build, but if anyone’s interested in registering

wheretheheckissteveyeggewritingnow.comand posting links to his current and former blogs, I’d subscribe to that.

-

Or, if you’re a fan of Half Life, Yegge’s a bit like the G-Man. ↩︎

-

-

Gotta be honest: the current kettle situation I find myself in, not my cup of tea. 😏

-

Amusing that find myself in a position where I have to log into one password manager to get the password to log into another password manager to get a password.

-

Does Google ever regret naming Go “Go”? Such a common word to use as a proper noun. I know the language devs prefer not to use Golang, but there’s no denying that it’s easier to search for.

-

The category keyword test is a go.