-

“Vibe coding” I can take or leave, but this poster’s point on iOS distribution is spot on:

I recently built a small iOS app for myself. I can install it on my phone directly from Xcode but it expires after seven days because I’m using a free Apple Developer account. I’m not trying to avoid paying Apple, but there’s enough friction involved in switching to a paid account that I simply haven’t been bothered.

I had a passing interest at looking at the iPhone SDK when it came out. What steered me towards learning the Android SDK was learning that any app I built for the iPhone had to distributed via the App Store1. That wouldn’t work for apps that I built for myself, or maybe a few friends, which is pretty much what I was interested in doing. Such apps are a completely different kettle of fish than building something meant for the whole world to use.

I don’t know what it’s like now, but despite how locked down Android is now, compared to those early years, it’s still seems to me to be much more opened to these sorts of apps than iOS.

-

And also having no Mac at the time. ↩︎

-

-

Here’s a useful Obsidian plugin. It allows you to use emoji short-codes in your notes, just like Slack and I think some flavours of Markdown. Good for todo lists where emojis could be used to highlight questions or priority items.

-

🔗 Adactio: Journal—Design processing

There’s no doubt about it, using a generative large language model helped a non-designer to get past the blank page. But it was less useful in subsequent iterations that rely on decision-making:

I’ve said it before and I’ll say it again: design is deciding. The best designers are the best deciders.

Another writer coming to the conclusion that the effective use of these AI tools rely on taste.

This is just a passing though but it’s interesting to think of these tools acting less like a multiplier and more like an “adder”. Unlike something that amplifies the skills someone already has, it simply elevates it, like the tide lifting all boats. Maybe it’s a little of both.

Via: Jim Nielsen’s Notes

-

A bit more on Godot this evening, mainly working on pausing the game, and the end-of-level sequence. Have got something pretty close to what I was looking for: a very Mario-esc sequence where the player enters a castle, it start auto-walking the character, and the level stats show up and “spin” for a bit. Not too bad, although I may need to adjust the timing and camera a little to keep the stats from being unreadable.

-

Not sure what’s going on but both Safari on the iPad, and Vivaldi on my phone have been feeling very sluggish these last couple of days. Could very well be a particular site I visit, but why would I still be experiencing slowdown after closing all tabs? Very strange.

-

I believe the gen AI could have a place in software development, but I wouldn’t say that I’m ready to go all in on “vibe coding”. I believe the best utility of gen AI comes from knowing what good code looks like. Someone with, if I may use the word here, a sense of taste. And I think the only way to develop that is to be hands on in the craft of writing code. You have to “touch” it, to feel it; almost like how a carpenter feels the grain of a new cabinet. And I don’t think you can get that if you see the code generated before your eyes.

-

Oof! Something I wrote has gone a little viral. It made it onto Hacker News a few days ago, and has had a bit of traction. But this weekend it’s just exploded. 12,376 views over the last two days and counting. Took me about a year and a half to get to 20k total views, and two days to get to 30k.

-

Stunning day for bocce today. Finally nice to get some autumnal weather. Tournament ended with a draw, so no definitive winner for the 2024 season.

-

Ugh! I’ll use WhatsApp if I must because of network effects (i.e. my friends are there) but let me state for the record that I do not like it, nor the company that owns it. Wonder if I could persuade my friends to move to something else. The network runs deep so it might be a tough sell.

-

I kinda wish I had a nice “bespoke” keyboard. The Microsoft Sculpt I’m using is fine, and it keeps my RSI at bay. But it’s uninteresting. Seeing — and more importantly, hearing — the keyboard others are using at work, it would be nice to have something new.

Although, I guess I could get something for work to replace the Apple Magic keyboard I tollerate.

-

Pitch for the first act of a romance movie: a barista expresses their love for a customer via latte art. Maybe the customer’s sitting there, wishing for love, maybe with a friend. Then they get their drinks and the friend notices that there’s an awful lot of hearts there. The rest writes itself. ☕️

-

Ugh, using IMDB for anything is just awful. Would love to give Callsheet a try, if only I didn’t have an Android phone.

-

One of the tools I built for work is starting to get more users, so I probably should remove UCL and replace it with a “real” command language. That’s the risk of building something for yourself: if it’s useful, others will want to use it.

I will miss using UCL, if I do have to remove it. Integrating another command language like TCL or Lisp is not easy, mainly because it’s difficult to map my domain to what the language supports. Other languages, like Lua or Python, map more nicely, but they’re awful to use as a command language. Sure, they may have REPLs, but dealing with the syntax is not fun when you’re just trying to get something done. That’s why I built UCL: to be useable in a REPL, yet rich enough to operate over structured data in a not-crappy way (it may not be glamorous, but it should be doing), while easy to integrate within a Go application.

Of course, if I want to continue to use it, it needs some effort put into it, such as documentation. So which one do I want more?

-

This week’s earworm: Yooka-Laylee and the Impossible Lair (Original Game Soundtrack). Might be all the Godot stuff I’m doing at the moment. 🎵

-

I’d be curious to know why Microsoft renamed Azure Active Directory to “Entra.” That name is… not good.

Still wondering this.

-

🔗 U.S. could lose democracy status, says global watchdog

Seems to me like this week was a pivotal time for the US, when Trump started ignoring a court order to stop a deportation. Most pleases I follow seem to suggest that this was the final guardrail. With this falling, I’m now hearing people (i.e. podcasters) say that US is no longer a democracy. So that’s all it takes?

-

🔗 Enshittification as a matter of taste

It’s starting to look like taste is increasingly going to be important in this brave new world of slop and Enshittification.

Via: Manton Reece

-

Wow, do not use Confluence for anything you’ll need to export as PDF. The export cannot layout tables — it does things like slap the header in the middle of one page and the first row on the next — and the right margin is too far in that it crops the prose. Looks sloppy and unprofessional.

-

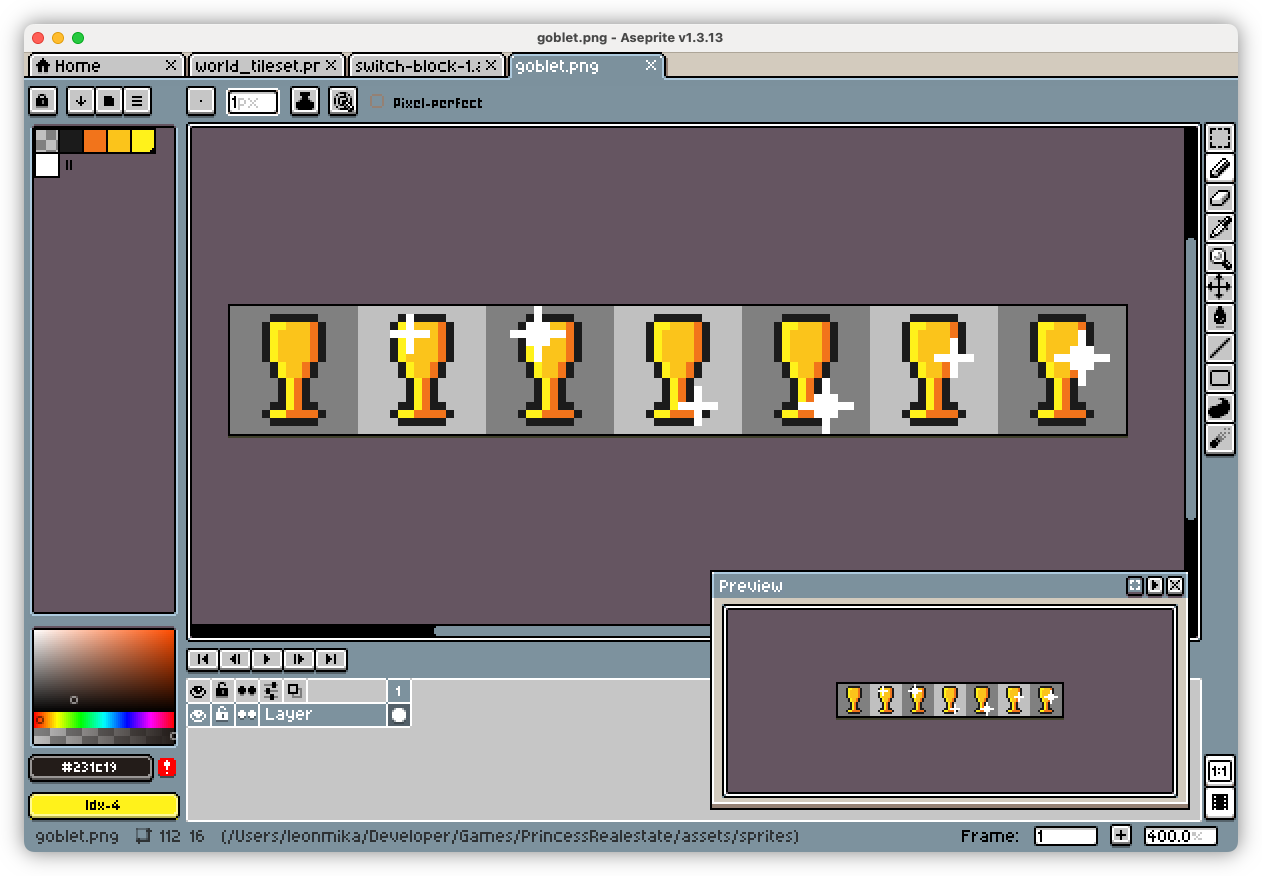

Can definitely recommend Aseprite for making simple pixel artwork. I’m using it now for making some sprites for my Godot game I’m working on. Reminds me of the time I used MS Paint for this during the 90’s era, back when it was closer to this than what it is now.

-

🔗 Make stuff, on your own, first

First. I don’t believe you create anything truly good with AI without first deeply your practicing craft in its absence. You have to hone your skills by making things without automation in order to perceive and understand what is truly good in your art. Otherwise the tools of automation will own you.

This point might be about ability, but I think it also applies to developing taste. You may get working code from an LLM, but do you know if it’s any good? Is it maintainable? If the shit were to hit the proverbial fan, would you be able to go on to fix it?

I know from my sessions playing with these things, the answer is usually not great. But being someone who’s been in the coding gig for 17 years, I know good code when I see it (or at least I like to think I do). But it took slogging through so much of it to develop that eye.

Via: Jim Nielsen’s Notes

-

The good news is that great writing is already all over the web. It’s just overwhelmed by all this platform-siloed, revenue-focused, engagement bullshit. It’s hidden among the sea of SEO-laden posts that flood the web. It’s bottled up on Medium or Substack, and other platforms that promise the exposure of social media.

It’s such a crime seeing great writing on platforms such as Medium or Substack. Yes, I know, I know: you’ve gotta make a living. But please, can it be on your own platform? Ghost is right there.

Via: rscottjones

-

Don't Be Afraid Of Types

Types in coding projects are good. Don’t be afraid to create them when you need to. Continue reading →

-

Another pro-tip for anyone writing in Go: multiple return values is nice but don’t over do it. Functions returning values that are related to each other are fine: think functions doing vector maths in 3-dimensions. Up to three values being returned along with an error, also fine (although I’d argue three is starting to push it). Anything else, consider returning a struct type.

-

Pro-tip: merge any changes to gRPC schemas only when you’ve got approvals to merge the implementation. Then merge both PRs at one time. Otherwise, you’ll have gRPC schemas that declare services that are not implemented. This is bad enough for clients but it’s worse for anyone working on the service.