-

🔗 The amazing helicopter on Mars, Ingenuity, will fly no more

Ingenuity has been an incrediable achievement. The engineers at NASA should be so proud of themselves. It’s sad to see this chopper grounded now, but seeing it fly for as long as it did has been a joy. Bravo!

-

It’s a bit frustrating that iOS treats all apps as if they’re info-scraping, money-grabbing, third-class citizens. How many times do I have to grant clipboard access to NetNewsWire before they realise that yes, I actually trust the app developer?

-

Why I Use a Mac

Why do I use a Mac? Because I can’t get anything I need to get done on an iPad. Because I can’t type to save myself on a phone screen. Because music software doesn’t exist on Linux. Because the Bash shell doesn’t exist on Windows (well, it didn’t when I stopped using it). That’s why I use a Mac. Continue reading →

-

I’ve been bouncing around projects recently but last week I’ve settled on one that I’ve been really excited about. This is reboot five of this idea, but I think this time it’ll work because I’m not building it for myself, at least not entirely. Anyway, more to say when I have something to show.

-

Shocking to hear Gruber on Dithering tell that story about the developer’s experience with Apple’s DevRel team. To be told, after choosing to opt out from being on the Vision Pro on day one, that they’re “going to regret it”? Is it Apple’s policy to be offensive to developers now?

-

Broadtail

Date: 2021 – 2022 Status: Paused First project I’ll talk about is Broadtail. I think I talked about this one before, or at least I posted screenshot of it. I started work on this in 2021. The pandemic was still raging, and much of my downtime was watching YouTube videos. We were coming up to a federal election, and I was getting frustrated with seeing YouTube ads from political parties that offend me. Continue reading →

-

Don’t mind me. Just eyeing off a pigeon that’s looking at me funny.

-

I don’t understand why Mail.app for MacOS doesn’t block images from unknown senders by default. They may proxy them to hide my IP address, but that doesn’t help if the image URLs themselves are “personalised”. Fetching the image still indicates that someone’s seen the mail, and for certain senders I do not want them to know that (usually spammers that want confirmation that my email address is legitimate).

-

Do browsers/web devs still use image maps? Thinking of something that’ll have an image with regions that’d run some JavaScript when tapped. If this was the 2000s, I’d probably use the map element for that. Would people still use this, or would it just be a bunch of JavaScript now? 🤔

-

Former site of a cafe I use to frequent. Kind of amazing to see how small the plot of land actually is, when you take out all the walls and furniture.

-

TIL about the JavaScript debugger statement. You can put

debuggerin a JS source file, and if you have the console open, the browser will pause execution at that line, like a breakpoint:console.log("code"); debugger; console.log("pause here");This is really going to be useful in the future.

-

Really enjoyed listening to Om Malik with Ben Thompson on Stratechery today. Very insightful and optimistic conversation.

-

Phograms

Originally posted on Folio Red, which is why this post references "a new blog". Pho-gram (n): a false or fanciful image or prose, usually generated by AI* There’s nothing like a new blog. So much possibility, so much expectation of quality posts shared with the world. It’s like that feeling of a new journal or notebook: you’re almost afraid to sally it with what you think is not worthy of it. Continue reading →

-

Just thinking of the failure of XSD, WSDL, and other XML formats back in the day. It’s amusing to think that many of the difficulties that came from working in these formats were waved away with sayings like “ah, tooling will help you there,” or “of course there’s going to be a GUI editor for it.”

Compare that to a format like Protobuf. Sure, there are tools to generate the code, but it assumes the source will be written by humans using nothing more than a text editor.

That might be why formats like RSS and XML-RPC survived. They’re super simple to understand as they are. For all the others, it might be that if you feel your text format depends on tools to author it, it’s too complicated.

-

I’m starting to suspect that online, multi-choice questionnaires — with only eight hypotheticals and no choice that maps nicely to my preference or behavior — don’t make for great indicators of personality.

-

The AWS Generative AI Workshop

Had an AI workshop today, where we went through some of the generative AI services AWS offers and how they could be used. It was reasonably high level yet I still got something out of it. What was striking was just how much of integrating these foundational models (something like an LLM that was pre-trained on the web) involved natural language. Like if you building a chat bot to have a certain personality, you’d start each context with something like: Continue reading →

-

I don’t know why I think I’ll remember where I saw an interesting link. A few days go by, and when I want to follow it, surprise, surprise, I forgot where I saw it. The world is swimming in bookmaking and read-it-later services. Why don’t I use them?! 🤦♂️

-

Replacing Ear Cups On JBL E45BT Headphones

As far as wearables go, my daily drivers are a pair of JBL E45BT Bluetooth headphones. They’re several years old now and are showing their age: many of the buttons no longer work and it usually takes two attempts for the Bluetooth to connect. But the biggest issue is that the ear cups were no longer staying on. They’re fine when I wear them, but as soon as I take them off, the left cup would fall to the ground. Continue reading →

-

🔗 Let’s make the indie web easier

Inspiring post. I will admit that while I was reading it I was thinking “what about this? What about that?” But I came away with the feeling (realisation?) that the appetite for these tools might be infinite and that one size doesn’t fit all. This might be a good thing.

-

Argh, the coffee kiosk at the station is closed. Will have to activate my backup plan: catching the earlier train and getting a coffee two stations down. Addiction will lead you to do strange things. ☕

-

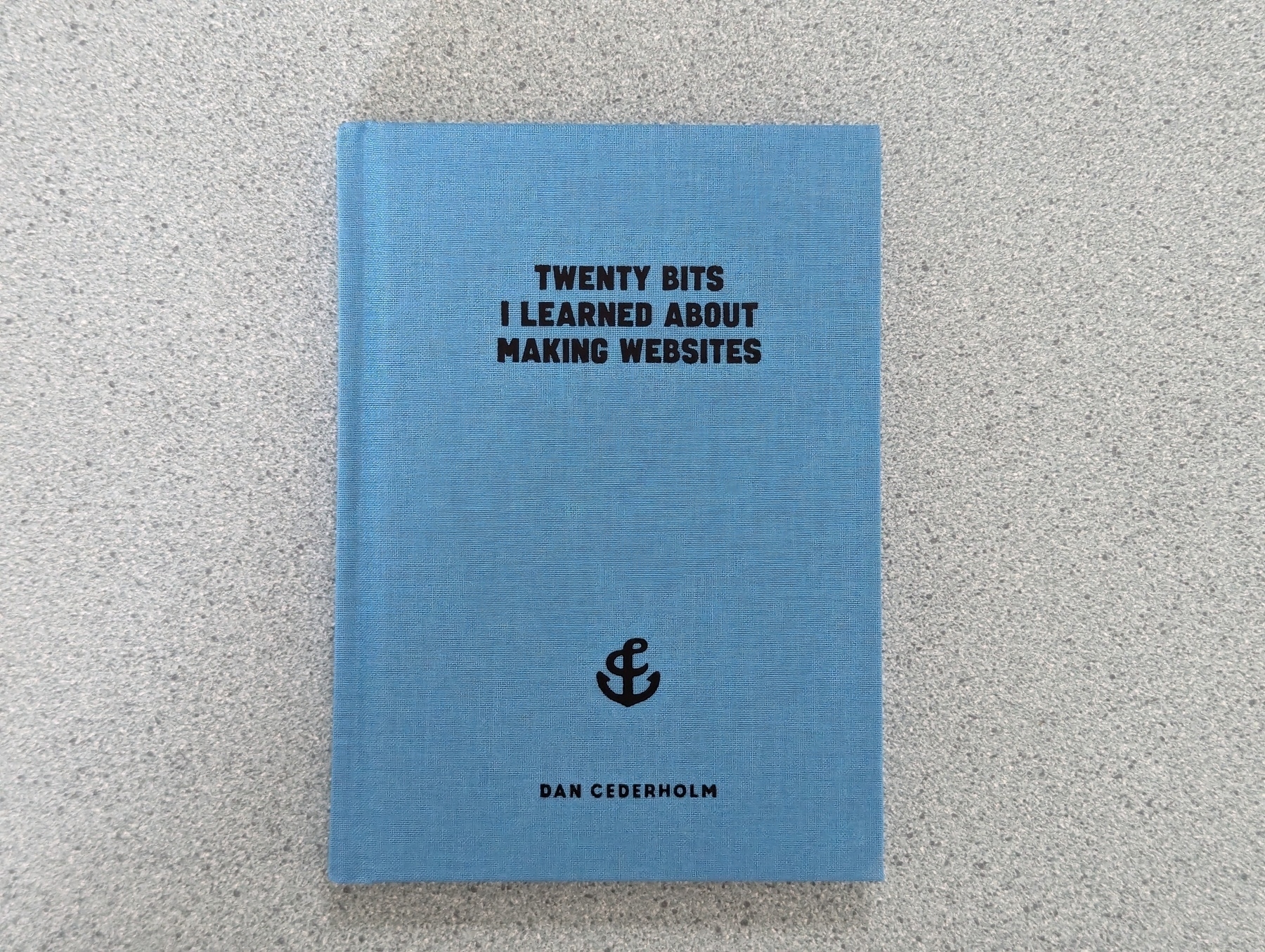

Finished reading: Twenty Bits I Learned about Making Websites by Dan Cederholm 📚

Got this book yesterday and read through it in about an hour. A joy to read, and a pleasure simply to hold.

-

Elm Connections Retro

If you follow my blog, you would’ve noticed several videos of me coding up a Connections clone in Elm. I did this as a bit of an experiment, to see if I’d would be interested in screen-casting my coding sessions, and if anyone else would be interested in watching them. I also wanted to see if hosting them on a platform that’s not YouTube would gain any traction. So far, I’ve received no takers: most videos have received zero views so far, with the highest view count being three. Continue reading →

-

If someone asked me what sort of LLM I’d used for work, I wouldn’t go for a code assistant. Instead, I’d have something that’ll read my Slack messages, and if one looks like a description of work we need to do, it’ll return the Jira ticket for it, or offer to create one if I haven’t logged one yet.

-

On Go Interfaces And Component-Oriented Design

Golang Weekly had a link to a thoughtful post about interfaces. It got me thinking about my use of interfaces in Go, and how I could improve here. I’ve been struggling with this a little recently. I think there’s still a bit I’ve got to unlearn. In the Java world, where I got my start, the principal to maintainable systems was a component-based approach: small, internally coherent, units of functionality that you stick together. Continue reading →