-

If you’ve ever been asked to decide the session duration for a work-based application, choose a time based on how long your users will be at work. The AWS session token I have last 8 hours, but I’m at work for 9: 8 hours of work plus 1 hour lunch break. Come the end of the day, if I’m still doing things in AWS, I’m always prompted to log in again.

Even better would be a session duration of 10 hours, just in case work goes a little long that day.

-

Well, it took 2 years, but the final step of decommissioning a domain is over: the domain has now expired. I feel bad for all the links I now broke (it was a domain that receive a little bit of traffic). I just hope all the 301 Permanent Redirects I was sending for 2 years did something.

-

Must be Theme Changing day, as I’ve made one last change that’s been on my wish-list for a while: the ability to page within the post screen themselves. This allows one to page through the entries without having to go back to the Archive section.

Here’s the template to do that, which uses Tiny Theme’s micro-hooks:

<!-- layouts/partials/microhook-after-post.html --> <nav class="paging"> <ul> {{ if .PrevInSection }} {{ if eq .PrevInSection.Params.trip .Params.trip }} <li class="previous"> <a href="{{.PrevInSection.Permalink}}">❮ Previous Post</a> </li> {{ end }} {{ end }} {{ if .NextInSection }} {{ if eq .NextInSection.Params.trip .Params.trip }} <li class="next"> <a href="{{.NextInSection.Permalink}}">Next Post ❯</a> </li> {{ end }} {{ end }} </ul> </nav>I also added a bit of CSS to space the links across the page:

nav.paging ul { display: flex; justify-content: space-between; }The only thing I’m unsure about is whether “Next Post” should appear on the right. It feels a little like it actually should be on the left, since the “Older Post” link in the entry list has an arrow pointing to the right, suggesting that time goes from right to left. Maybe if I removed the arrow from the “Older Post” link, the direction of time will become ambiguous and I can leave the post paging buttons where they are. Ah well, no time for that now. 😉

-

One other thing I did was finally address how galleries were being rendered in RSS. If you use the Glightbox plugin, the titles and descriptions get stripped from the RSS. Or at least it does in Feedbin, where all the JavaScript gets remove and, thus, Glightbox doesn’t get a chance to initialise. I’m guessing the vast majority of RSS readers out there do likewise.

So I added an alternate shortcode template format which wraps the gallery image in a

figuretag, and adds afigcaptioncontaining the title or description if one exists. This means the gallery images get rendered as normal images in RSS. But I think this sacrifice is worth it if it means that titles and descriptions are preserved. I, for one, usually add description to gallery images, and it saddens me to see that those viewing the gallery in an RSS reader don’t get these.Here’s the shortcode template in full, if anyone else is interested in adding this:

<!-- layouts/shortcodes/glightbox.xml --> <figure> <img src="{{ .Get "src" }}" {{ if .Get "alt" }} alt="{{ .Get "alt" }}" {{ end }} {{ if .Get "title" }} title={{ .Get "title" }} {{ end }} /> {{ if or (.Get "title") (.Get "description") }} <figcaption> {{ if .Get "title" }} <strong>{{ .Get "title" }} {{ if .Get "description" }} — {{end}}</strong> {{ end }} {{ .Get "description" | default "" }} </figcaption> {{end}} </figure>I’ve also raised it as an MR to the plugin itself. Hopefully it get’s merged and then it’s just a matter of updating the plugin.

-

A Summer Theme

Made a slight tweak to my blog’s theme today, to “celebrate” the start of summer. I wanted a colour scheme that felt suitable for the season, which usually means hot, dry conditions. I went with one that uses yellow and brown as the primary colours. I suppose red would’ve been a more traditional representation of “hot”, but yellow felt like a better choice to invoke the sensation of dry vegetation. I did want to make it subtle though: it’s easy for a really saturated yellow to be quite garish, especially when used as a background. Continue reading →

-

A grey and wet visit to Hastings today for lunch and coffee. Met someone at the cafe who bought his two cockatiels along with him, both in little harnesses.

-

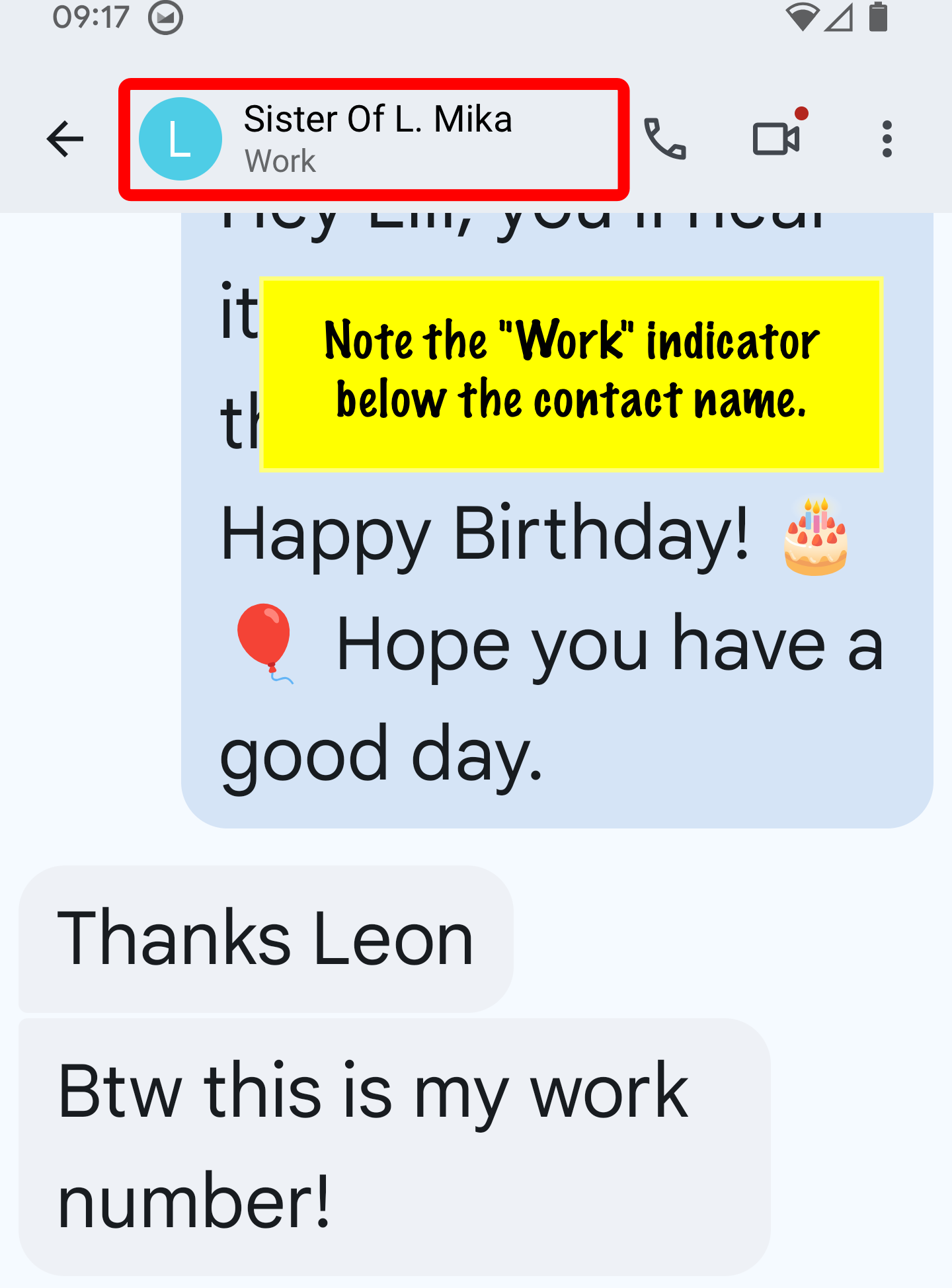

Dear Google,

If an address book has a contact with both a home and work phone number, please indicate which number is which in the Messages app. I just sent a birthday message to my sister’s work phone by mistake.

Something like this would be enough:

Regards,

lmika

-

“Hark! Is that the sound of singing angles, I hear?”

“No, it’s just a Hyundai EV reversing.”

Hmm, not sure I’m ready for this reversing EV sound future we’re facing.

-

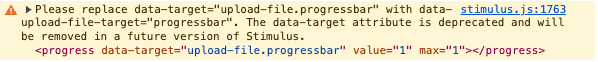

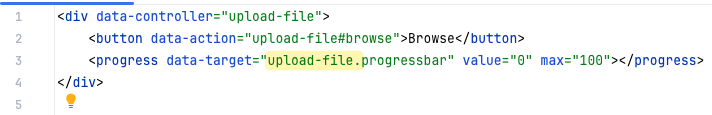

Oh, it turns out it’s an older style of referencing targets and is no longer supposed to be used. That’s a shame.

-

Huh, I was just adding a StimulusJS target attribute when Goland’s LLM suggested using a dot-based approach to reference the controller in the attribute value, instead of within the attribute name. I gave it a try and it worked.

Is referencing the target this way a new thing? I think I prefer it.

-

Agree with @manton here. I used to be quite religious about Test Driven Development, but I'm less so nowadays. For the simple stuff, it works great; but for anything larger, you know little about how your going to build something until after you build it. Only then should you lock it in with tests.

-

We might be seeing the end of the tunnel with our performance woes at work. I did some profiling with pprof this morning, and saw that a large amount of time was spent in

context.Value(). Which is strange, given that this is just a way of retreving values being carried alongside context instances.My initial suspicion was that tracing may have been involved. The tracing library we’re using carries spans — like method calls — in the context. These spans eventually get offloaded to a a service like Jaeger for us to browse.

We never got tracing working for this service, so I suspect all these spans were building up somewhere. The service wasn’t memory starved, but maybe the library was adding more and more values to the context — which acts like a linked list — and the service was just spending time traversing the list, looking for a values.

This is just speculation, and warrents further investigation, maybe (might be easier just to spend that effort getting tracing). But after we turned off tracing, we no longer saw the CPU rise to 100%. When we applied load, the CPU remained pretty constant at around 7%.

So it’s a pretty large signal that tracing not being offloaded is somewhat involved.

-

Frustrating day today. The service I’m working on is just not performing. It starts off reasonably well, but then the CPU maxes out and performance degrades to 20%.

It may be the JWT generator, but why would performance degrade like that over time? It could be the garbage collector (this is a Go app). Maybe we’re allocating too many things on the heap? Or maybe we’re hitting some other throttled limit, making too many API requests. But I can’t see how that’ll max out the CPU. It’s not like it’s waiting on IO (or, at least, I can’t remember seeing it max out on IO).

Anyway, looks like another session with the profiler is in the cards.

-

So apparently the X’Trapolis trains have some form of battery. I saw one just drop its pantograph, yet the lights inside were kept on. I guess that makes sense, as there needs to be a way to raise its pantograph back up again.

-

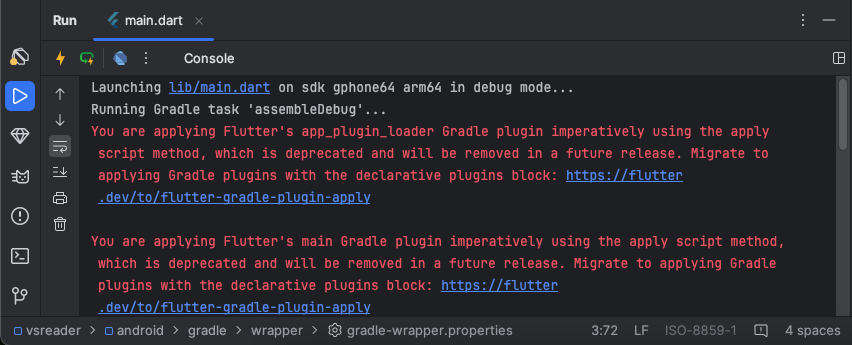

I remember a time when I thought Gradle was the bee’s knees: “no XML, config written in Groovy, how awesome.” Now, it’s the most frustrating part of Android development.

Yeah, I tried that. And it completely broke my build. Got it working in the end by upgrading Java and the Android SDK, but golly it sapped a lot from me. Although, to be fair, I’m not how standard the Gradle build is for a typical Android project. I guess it’s better than when they were using Ant.

Anyway, kids: just use Maven. Work through the XML. It’s just a better build system.

-

🧑💻 New post on TIL Computer: Link: Probably Avoid Relying On Error Codes To Optimistically Insert In Postgres

-

Slippery when wet.

Seriously, watch yourself when walking through that subway on a wet day. That tiled floor is treacherous.

-

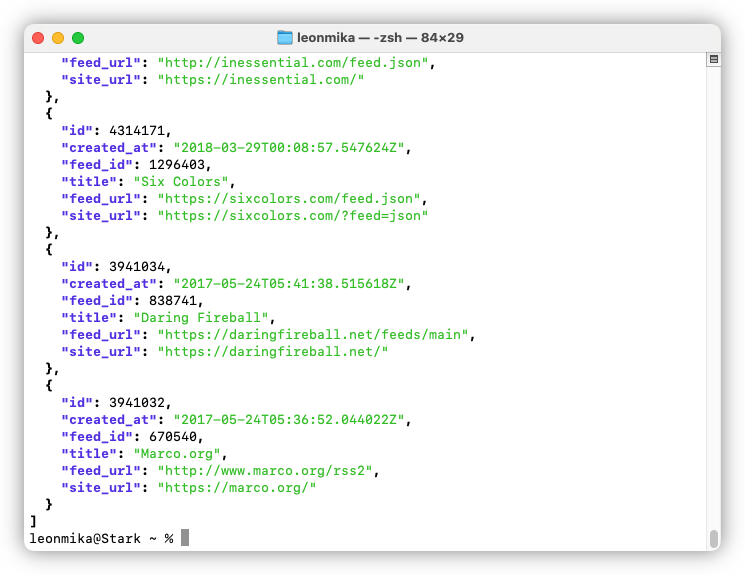

I was reading through the feeds in my Feedbin account a couple of weeks ago, as one does, and a passing curiosity grabbed me: when did I actually sign up to Feedbin, and what were my first subscriptions? I’m not sure if it’s possible to get this information from the frontend, but it turns out you can get it from Feedbin’s API. So I plugged in my auth details and took a look.

My first two subscriptions were back on 24 May 2017, for Marco Arment and Daring Fireball, although I’m sure there were others that I’ve since removed. I’m taking that to be the date I actually signed up to Feedbin, and I suspect that these two were part of collection of feeds that I moved from older RSS reader I was using at the time1.

I won’t go through every subscription I have but I will point out a few interesting ones. For instance, I thought I subscribed to Manton Reece much earlier, but apparently it was only on the 6th March 2020. I subscribed to Seth Godin on the 8th December 2020. And the latest subscription was added only a few weeks ago, on the 10th of November of this year.

All up, I’m currently subscribed to 64 feeds. Now, if only I could remember how I discovered these feeds.

-

That was hand-built, and I should dig it out and see if I can get it working again, just to remind myself how it looked. I do know that it used Google’s Material design language. ↩︎

-

-

Delta of the Defaults 2024

It’s a little over a year since Dual of the Defaults, and I see that Robb and Maique are posting their updates for 2024, so I’d thought I do the same. There’ve only been a few changes since last year, so much like Robb, I’m only posting the delta: Notes: Obsidian for work. Notion for personal use if the note is long-lived. But I’ve started using Micro.blog notes and Strata for the more short-term notes I make from day to day. Continue reading →

-

Wish Go had Java’s

::operator, where you could convert a method into a function pointer with the receiver being passed in as the first argument. Would be super useful for mapping slices. -

Upgraded my work laptop to Sequoia. “Love” the experience that this new version provides, especially the mouse-and-patience exercise I get in the morning. 👎

<img src=“https://cdn.uploads.micro.blog/25293/2024/cleanshot-2024-11-26-at-07.30.252x.png" width=“600” height=“541” alt=“Three permission requests stacked up, with the top one displayed asking if an app called “Obsidian” can find devices on local networks, with options to “Don’t Allow” or “Allow”.">

-

🧑💻 New post on TIL Computer: Don’t Use ‘T’ RDS Instance Types For Production

-

Looking for my next project to work on. I have a few ideas but my mind keeps wandering back to an RSS reader for Android. I read RSS feeds quite frequently on my phone and Feedbin’s web app is great, but I think I prefer a native app.

I just need to get over the hump of setting up my Android Studios. There’s something about starting a new project there that just sucks the life out of you.

-

I wonder if anyone can get anything out of Fandom these days. I went to it yesterday for something, and it was so full of ads that it was barely usable. Got out of there as soon as I could. Didn’t spend a second longer than I needed to. I certaintly wouldn’t choose to casually browse that site.

-

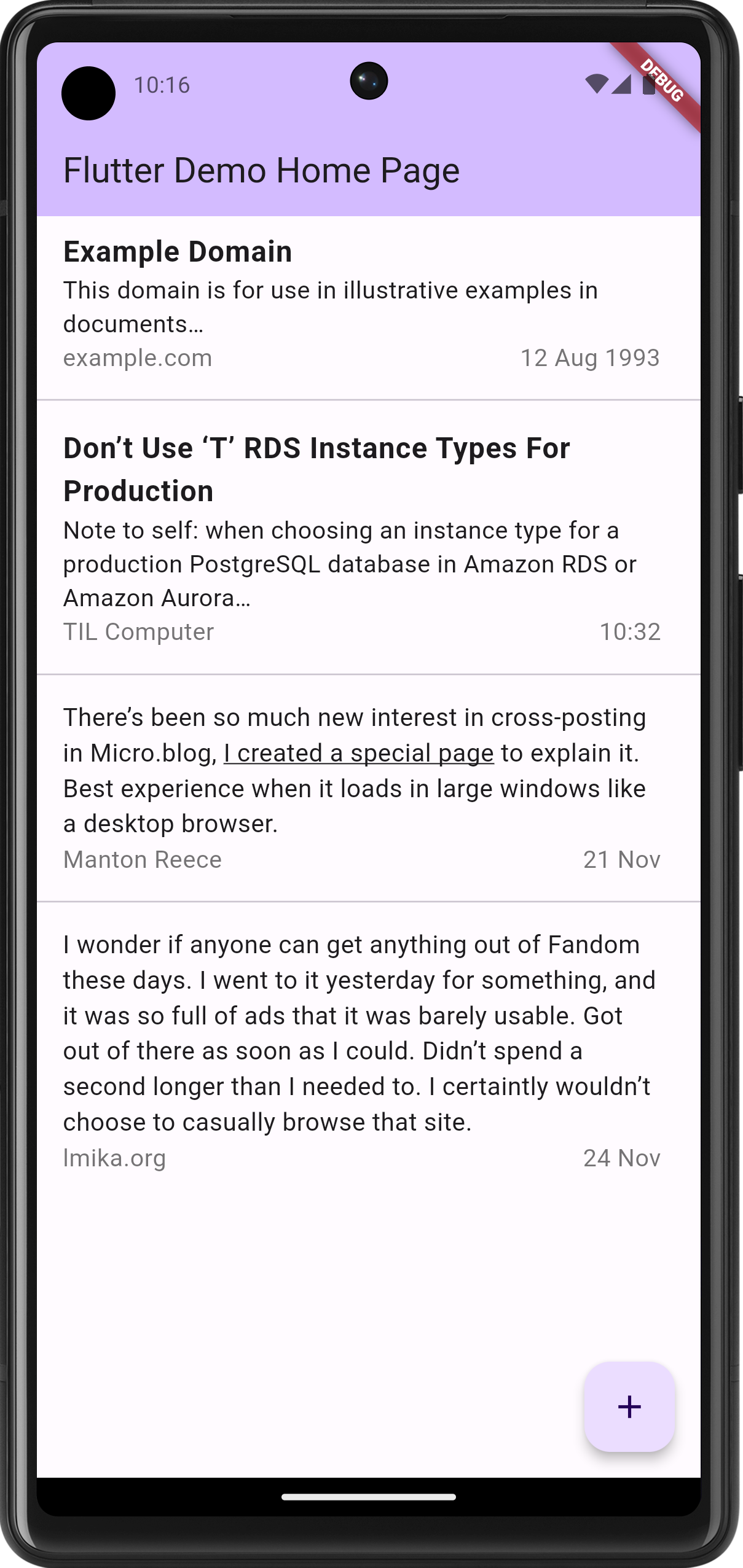

Playing around with some possible UI design choices for that Android RSS Feed Reader. I think I will go with Flutter for this, seeing that I generally like the framework and it has decent (although not perfect) support for native Material styling.

Started looking at the feed item view. This is what I have so far:

Note that this is little more than a static list view. The items comes from nowhere and tapping an item doesn’t actually do anything yet. I wanted to get the appearance right first, as how it feels is downstream from how it works.

The current plan is to show most of the body for items without titles, similar to what other social media apps would show. It occurred to me that in doing so, people wouldn’t see links or formatting in the original post, since they’ll be less likely to click through. So it might be necessary to bring this formatting to the front. Not all possible formatting, mind you: probably just strong, emphasis, and links. Everything else should result with an ellipsis, encouraging the user to open the actual item.

Anyway, still playing at the moment.