-

I posit to you that those that complain about the state of the web haven’t actually experience the web, or not enought of it. They spend all their time in closed platforms, consuming algorithmic feeds and not making nor following links, and get turned off by the ensittification of it all. It’s like travelling overseas, spending all your time at or around the hotel, coming home, then telling others that your trip was pretty boring.

-

Don’t want to say much about Apple’s lawsuit against Jon Prosser other than that it’s not a good look.

Might be that Apple has lost all sense of optics, as evident in the string of “success” they had in their recent advertising. So let me clue them in on something: Apple, you are no longer the underdog. If I’m going to see headlines saying “Apple sues X” where X is anything other than Microsoft, Google, Meta, or the US Government, I’m just going to think that you’re throwing your weight around trying to hurt or stop someone way smaller than you.

I’m sure Apple thinks they deserve it. Might be that they do. But that’s the downside of money and power: you come off as the big guy, and it’s hard to solicit any sympathy for you if you’re going at someone smaller, no matter how justified (you think) it is.

Anyway, that’s all I’ll say about this for now.

-

It’s funny how blogging can feel like you’re writing to everyone and no-one at the same time.

-

Nothing like ending the week with a somewhat involved Friday release to production.

![Meme of the ending screen of Metroid 1, which is a pixelated character stands on a textured surface against a starry night sky, accompanied by a congratulatory and cautionary message in bold, yellow text. Message is as follows: Great!! You fulfiled [sic] your mission. It will revive peace in Slack. But, it may be invaded by the other releases. Pray for a true peace in Slack!](https://cdn.uploads.micro.blog/25293/2025/friday-release-meme.png)

-

Listening to a podcast where the guest recounted a story of a young person saying that they “watch a lot of podcasts,” and I just sighed with despondency.

-

Someone needs to teach washing machine microcontroller engineers about non-volatile memory, so they can build machines that remember the last cycle and wash options. At the very least, they could be better in selecting good defaults. “Cottons” is not a good default if “everyday” is the mode I want.

-

I keep forgetting that the written word has different expectations around contractions. I’d probably speak aloud something that sounds like “the same round here”. But I need to remember to write that as around or ‘round. Being ‘round here is different from being round here (but still applicable 😉).

-

About 9 years ago, while visiting the USA on a work trip, I went to the Elephant & Castle in Washington, DC. Someone recommended it to me, but I was frankly underwhelmed: not what you’d typically get at a pub around here. Today I learnt that it’s actually a chain of pubs, which probably explains it.

-

Just one of those weeks where nothing is working at all. Computers, transport, the gym. Probably should just write this one off and regroup next week.

-

Eyeing this course on screencasting. Might be a good skill to develop. I’ve recorded a few screencasts for work in the past and not only did I find it a good reference to share to others, it was fun to do.

Via: Chris Coyier

-

Should turn in my software development credentials right now. Thought I fixed a bug which failed QA because it didn’t work when multiple items were submitted. I didn’t test submitting multiple items, I only tested singles. Effin’ amateur hour! 🤦

-

🔗 MacSparky: A Remarkable, Unremarkable Thing

We often talk about how people can’t put their phones down while in line at the market, but what about during moments of joy? When taking in a theme park with your family, at the beach, or on vacation? Those moments are found solely in your immersion in the now.

A thought-provoking post.

-

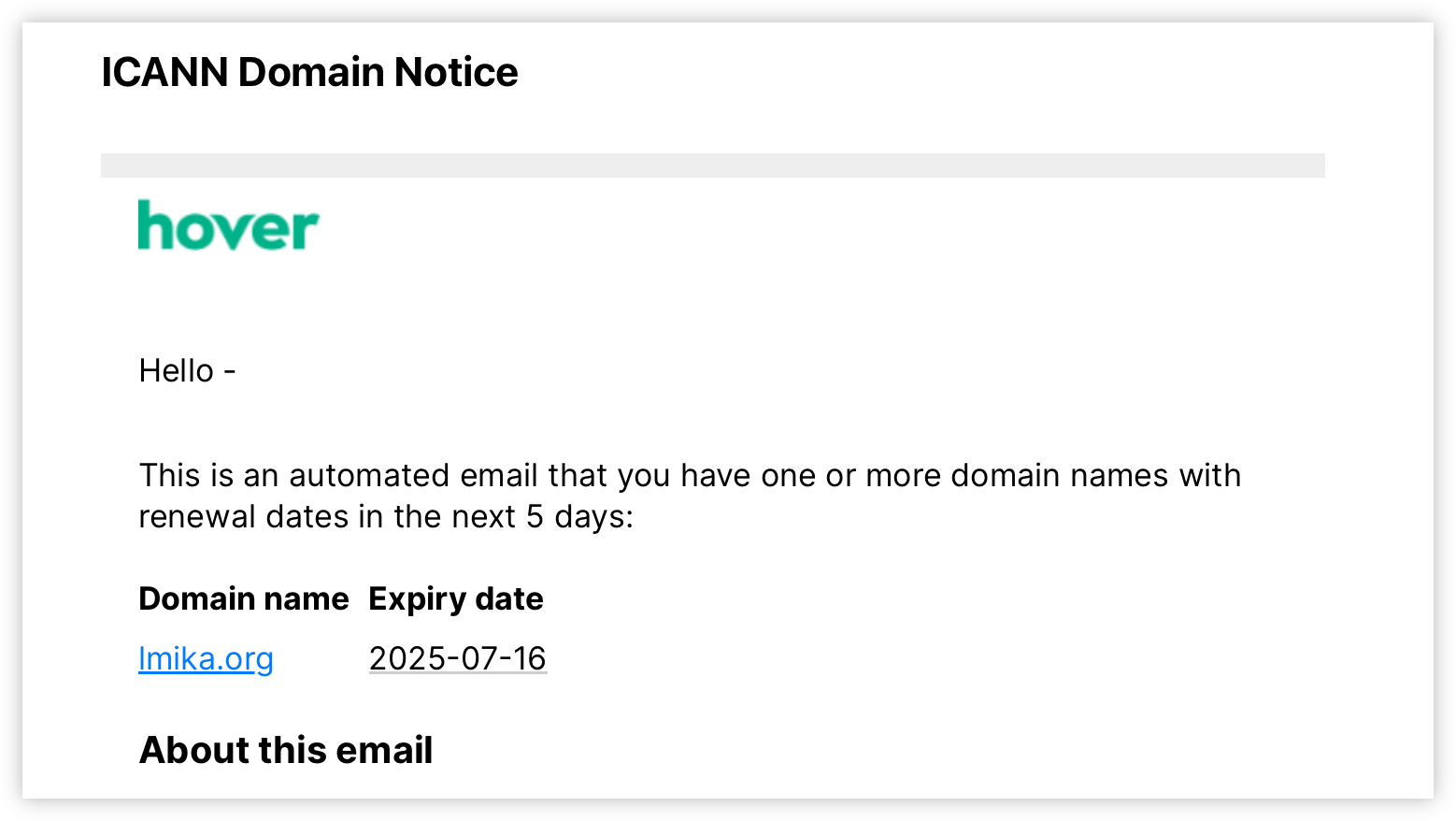

It’s striking how few Substack writers have setup their own domain name. Every link I see in the post I’m reading bar one is

something.substack.com. Is it just too difficult to do? I thought that service attracted those that want to go out on their own. This feels like going halfway. -

Oof! Interviewing always takes it out of me. And I’m not even the one being interviewed. 😩

-

It’s DNS. It’s always DNS. It always will be DNS.

-

Train line outage is still ongoing. Apparently there’s been a derailment due to pantographs getting entangled in the overhead wires. So the new rolling stock experience continues. Today’s is a brief ride in a Siemens, complete with driver pointing out landmarks of interest.

-

Got lucked into getting a Comeng train. Haven’t ridden one of these in years. They’re being retired so may be one of the last times I get to ride them.

-

Making lemonade out of the lemons that is a total outage of my train line by seeing areas of the network that are completely new to me.

-

I feel for anyone that needs to write any code that deals with audio. Hope you like big arrays of numbers and small quanta of time.

-

Ooh, has it been 5 years already?

-

Overheard the barista talk about watching a YouTube video of someone who owns an expresso bar taking a minute to make a coffee. “Why does it take him a minute to make a coffee? I can make 5 coffees in that time. I understand pride in your work, but if I were waiting for that…”

They go on to debate the expectation of customers of a cafe verses those going to such fancy establishments. But if it were me, I wouldn’t have the patients to wait a full minute for a coffee. Or if I do, it better be the best damn coffee I ever had.

-

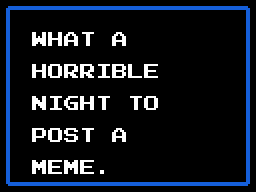

An online tool for generating images of message screens from retro 90’s games. Quite a selection of classic game message screens. Now, my egotistical side will think it beneath me to use this for posting lame memes here. To that part of me I say…

Via: Robb Knight

-

📘 Devlog

Godot Project — Some Feelings

Progress on the Godot game has been fulfilling yet tinged with doubt about its value and purpose. Continue reading →

-

Amazing Dithering this week. Loved the discussion on why Apple is so driven to remove all software chrome. Never considered that it was because of how the various teams are organised (spoilers in the included clip).

-

Everything’s been so quiet around here. The school holidays explain it in part, but I wasn’t expecting it to be this quiet. Is everyone away? Or maybe they’re just staying indoors trying to keep warm. In any case, makes going to the cafe and being the only customer a little awkward.