-

Working with PostgreSQL is an absolute joy. Such an amazing database. Just goes to show that the fun tech out there is not always the new and shiny. The battled hardened, featureful, bread-and-butter tools that tends to get overlooked can be just as good (Linux falls into that category as well).

-

👨💻 New post on AWS over at Coding Bits: AWS Secrets Manager Cached Credentials Error

-

Attempting to give head scratches while recording video is more difficult than it looks. 🦜

-

An Unfair Critique Of OS/2 UI Design From 30 Years Ago

A favourite YouTube channel of mine is Michael MJD, who likes to explore retro PC products and software from the 90s and early 2000s. Examples of these include videos on Windows 95, Windows 98, and the various consumer tech products designed to get people online. Can I just say how interesting those times were, where phrases such as “surfing the net” were thrown about, and where shopping centres were always used to explain visiting websites. Continue reading →

-

I found this video on the failure of the Star Wars Hotel by Jenny Nicholson to be absolutely fascinating. A great example of Disney enshittification and promising more then they can deliver. Many of her other videos are great as well (I actually went on a binge session over the weekend). 📺

-

👨💻 New post on Go over at Coding Bits: Disabling Parallel Test Runs In Go

-

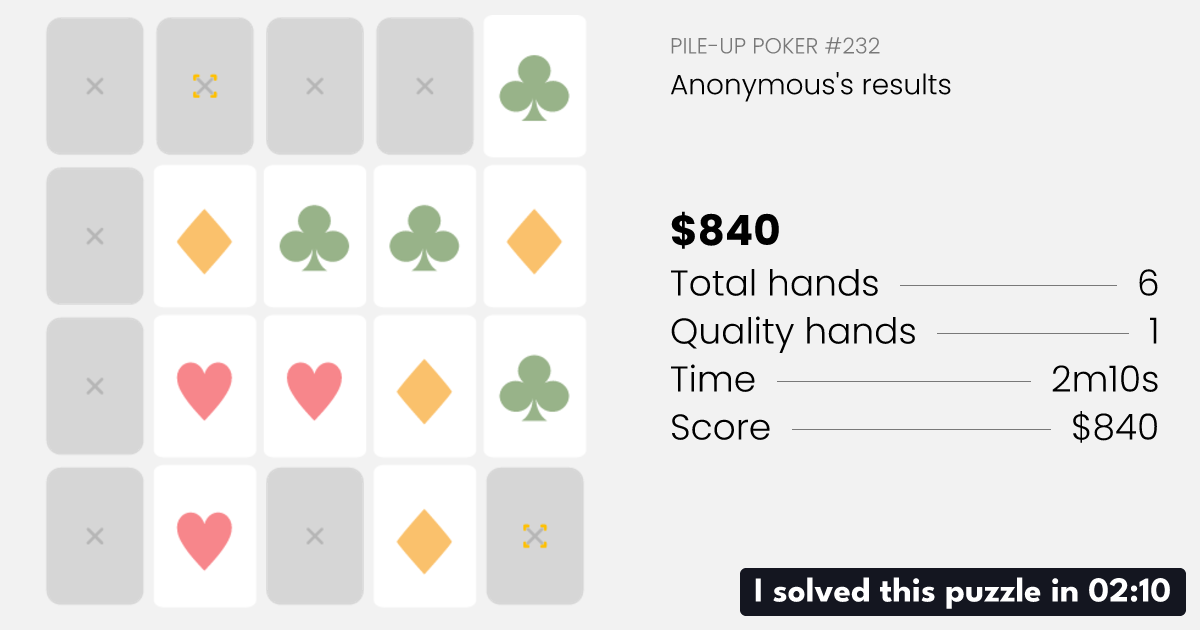

My Pile-Up Poker result for today = $840.00. Decent result for a first game.

Also, I’m not sure if sharing my actual “solution” is consider a spoiler, so click through to see that.

-

Some More Thoughts On Unit Testing

Kinda want to avoid this blog descending into a series of “this is wrong with unit testing” posts, but something did occur to me this morning. We’ve kicked off a new service at work recently. It’s just me and this other developer working on it at the moment, and it’s given us the opportunity to try out this “mockless” approach to testing, of which I ranted about a couple of weeks ago (in fact, the other developer is the person I had that discussion with). Continue reading →

-

I’m not on Threads but I do click through sometimes to post shared on Mastodon, and I’m a little confused by the web-based video player. It auto-plays, which is annoying enough, but it does so with the sound off and there’s no way to pause or scrub back to the beginning. Is that by design? Do I just have to always refresh the page whenever I want to watch something just so I don’t miss the beginning? Very strange.

-

Must say I’m really enjoying M. G. Siegler’s new blog Spyglass. I’ve liked pretty much every post I’ve read so far. Definitely worth subscribing to.

-

“What about your Savoys, Mrs. D?”

-

A black swan event.

(And yes, I took this photo just so I can use this caption).

-

Returned to Tuggeranong this morning for breakfast and a walk around the lake. Really enjoy going to “Tuggers” when I’m in ACT. I can’t quite explain it, but I always get New Zealand vibes whenever I visit.

-

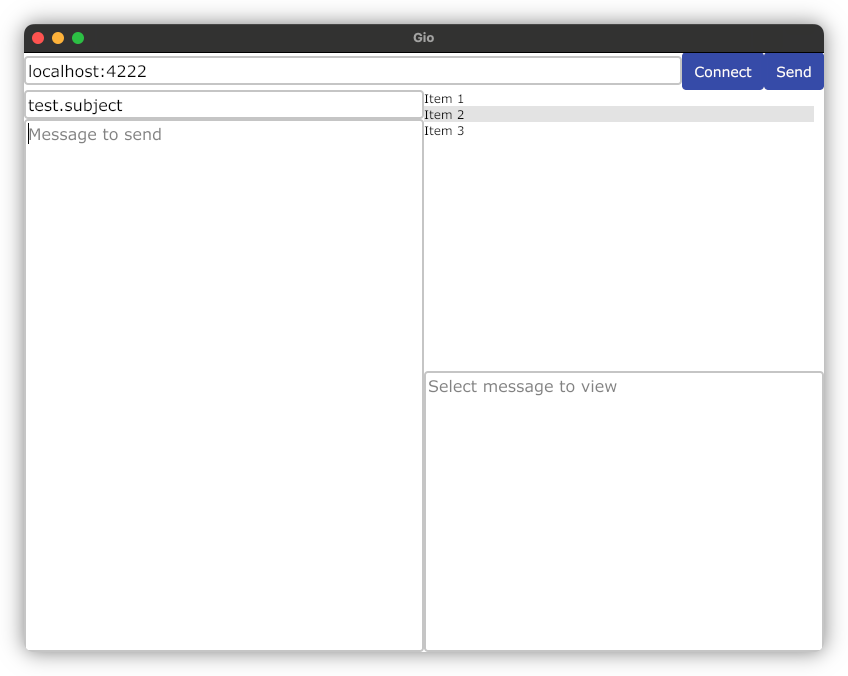

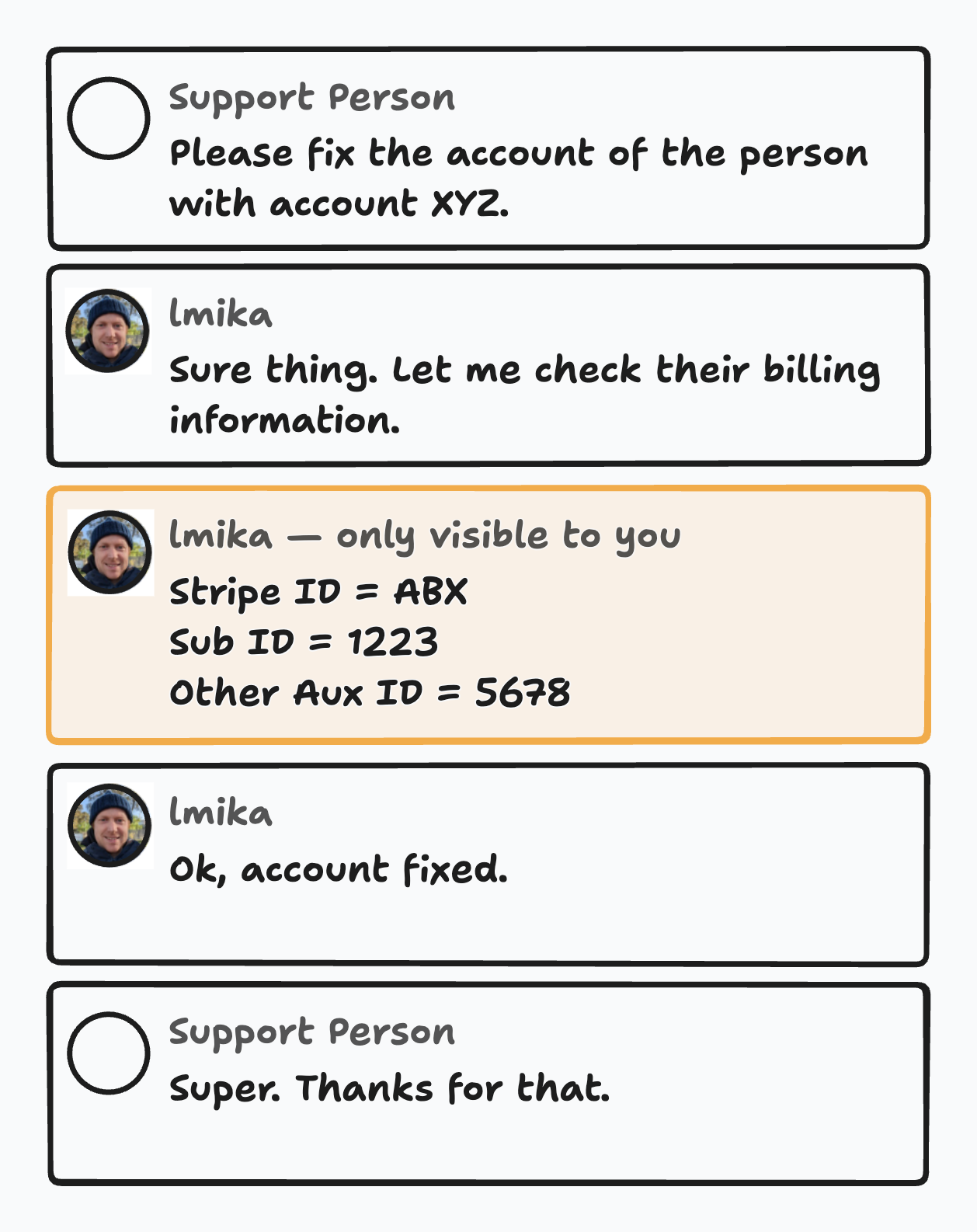

If Slack’s looking for features to add, my vote would be for personal “annotation” messages in threads, similar to what Hey mail has. Many a time I receive a support request as a Slack thread, and it’d be nice to add notes such as customer IDs as a message that only I can see.

Here’s a mockup:

Edit: I knew I talked about this before. And looking through On This Day, I found this post, where I professed my wish for FastMail to add the same feature. Probably a good hint that such a feature should be table steaks for any system involving other people.

-

New offering at the Cockatiel Cafe: the “breakfast bar”. 🦜

-

👨💻 New post on Databases over at Coding Bits: PostgreSQL, pgx, sqlc and bytea

-

Wish TLDraw offered a cylinder shape. I know my needs are quite niche, but I really like using this app for back-of-the-napkin architecture diagrams of software systems, and having a cylinder shape to represent a database or queue would really come in handy.

-

Playing around with Vintage Logo this morning. HT to @odd and @mandaris for posting a link to this iOS app. They’ve got some very nice logo templates here, including this art deco one featuring a bird in flight.

Used it to make this logo, which I call: lodgings for the evening.

-

I can’t for the life of me remember how to create a child page in Notion’s mobile app. Why do they hide that option with the bullets and headings? Couldn’t the New Page button be context specific, instead of creating a new top-level page wherever you press it?

-

Reliving my European trip through my posts here. They’ve started to appear on my On This Day page.

I sometimes wonder if I should’ve posted more at the time. I deliberately kept to my usual pace of one or two posts a day, mainly because others suggest that it’s usually not a great idea posting loads of photos of your trip on social media, lest you make people jealous about what you’re currently doing. Although, when they say “social media” they mean Instagram or TikTok and not Micro.blog, although one could make the case that’s still kinda, sorta, if-you-squint-and-tilt-your-head social media, maybe?

Ah well, doesn’t matter. I think I made to right call: living the moment instead of spending all my time working out whether something was post worthy. Plus, I’ve still got loads of photo and journal entries that I could turn into blog posts. Maybe one day I will.

-

Adjusting my SQL code formatting preferences at the moment, and it just feel so… byzantine. There’s probably close to 200 different settings here (I stopped counting after 100) and hunting through them trying to find the one that controls whether parenthesis should appear on the same line as the

CREATEstatement is so time-consuming.It would be nice for editors to provide a way to “learn” a code formatting style. They can present you with some sample code and say to you, “format this the way you’d like to see it and we’ll learn from that.” There can be several of these samples, presented to you one at a time, each one intended to answer a particular question about how the style should be. For example: one might have a dramatically narrow page width, forcing the user to break long lines.

From that, they can build a profile that will be used to configure the formatter. The configuration will essentially be the same as what’s used by formatters today — and can be transferred or checked into source control like a regular config file. It’ll just come from this learning routine, rather than the user poking through piles of checkboxes and pickers spread across 8 different tabs (I counted those as well).

Could this be something an onboard LLMs can actually do? Not sure. But if code editors are looking for “AI” features to add to their product, this might be one worth considering.

-

Side Scroller 95

I haven’t been doing much work on new projects recently. Mainly, I’ve been perusing my archives looking for interesting things to play around with. Some of them needed some light work to get working again but really I just wanted to experience them. I did come across one old projects which I’ll talk about here: a game I called Side Scroller 95. And yes, the “95” refers to Windows 95. Continue reading →

-

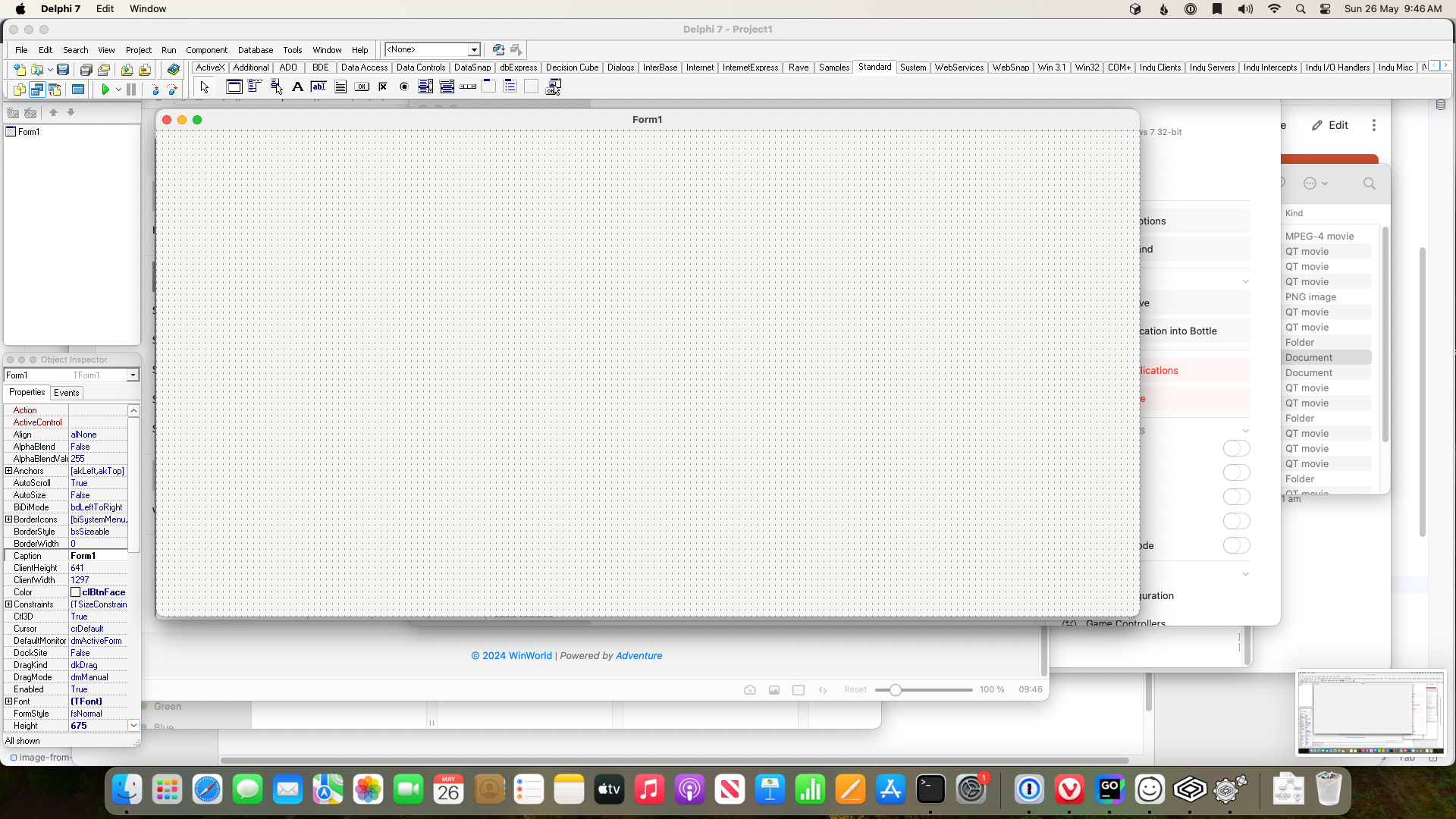

Coding like it’s 2003.

Poking around WinWorld this morning and found a working copy of Borland Delphi 7. Works perfectly in Crossover.

-

Bulk Image Selection

Some light housekeeping first: this is the 15th post on this blog so I thought it was time for a proper domain name. Not that buying a domain automatically means I’ll keep at it, but it does feel like I’ve got some momentum writing here now, so I’ll take the $24.00 USD risk. I’d also like to organise a proper site favicon too. I’ve got some ideas but I’ve yet to crack open Affinity Design just yet. Continue reading →