-

Tried the commute home via the Metro Tunnel and it looks like the gains are negligible.

The trains were timely: both the one I caught from Anzac, and my connection at Melbourne Central arrived when I reached the platform. But I felt that I got lucky here. And I ended up on the same train out I would’ve caught if I went my usual route.

Furthermore, I felt I got lucky with road crossings, and if I missed the one at Kings Way, I would’ve missed both trains.

So no, it’s not viable. Too many lucky arrivals just to get me on time with my usual way home. A decent alternative, but it won’t work for my main commute home.

-

TIL that the time ball, the thing that drops on New Year’s Eve in New York’s Times Square, was actually a proper form of time-keeping used in nautical operations. There’s even a historical time ball tower located in Williamstown, a suburb of Melbourne.

-

Took the Metro Tunnel to work again today, which I wasn’t completely planning for. But what can I say? It’s a nice commute. Brand spanking new infrastructure, along with a nicer walk to the office (apart from crossing Kings Way). Maybe I’ll stick with it for a while.

-

Argh! Just when I wanted to measure how long my commute home takes, my line is experiencing major delays. This is not how you do science.

-

One thing you’ll notice when you’re travelling through the Metro Tunnel is that all the exits have numbers. This is a recent change made just before the soft launch, and a few gunzels on YouTube wondered if it would’ve been better to list landmarks or destinations on the signs instead: this exit for the Shrine, that exit for St. Kilda Rd. trams heading to the city, etc.

As a local, this sounded like a convincing argument. I know what’s in the vicinity and where I want to go, and all I need to know is which exit will get me there the quickest. But while talking with others at work, someone mentioned that the exit numbers are useful for those who are less familiar with the area, and are using navigation software, with listed directions, to get around. That convinced me that keeping the exit numbers is probably better.

-

On Post Timestamps

Some thoughts of using dual timestamps for blog posts to differentiate between the publication date and the date of the event, considering the implications for chronological feeds and user experience. Continue reading →

-

Arrived at work a few minutes later than usual, so the commute in via the Metro Tunnel is viable (it could be better: Metro needs to run more trains during peak to justify the transfer). But I do like the walk in from Flinders St. so I’m unlikely to change that. It’ll be the commute home that may be more favourable to me.

-

Now that the Big Switch has been switched, it’s time to see how viable it is to integrate the new Metro Tunnel in my commute. Plus I’ve yet to check out Town Hall station.

-

The runoff from the drinking fountains around my place collect into small troughs at the ground. It’s typically for dogs, but the gallahs have also been making use of them.

-

The runoff from the drinking fountains around my place collect into small troughs at the ground. It’s typically for dogs, but the gallahs have also been making use of them.

-

📘 Devlog

Micro apps - Some Score Cards

In the spirit of maintaining a document of what I’ve been working on, and being somewhat inspired by Matt Birchler’s posts about his micro apps, I’d thought I’d document on some of the small apps I’ve worked on recently. The fact of the matter is that I’ve been building quite a few of these apps over the course of the summer, primarily in response to a specific need I have at the time. Continue reading →

-

Bluey sighting.

-

I think half the value of using coding agents is just having something to rubberduck with. You pose it a problem, it suggests something which doesn’t work, then while you’re trying to come up with a reply with why it’s wrong, you find the solution yourself (this happened to me today).

-

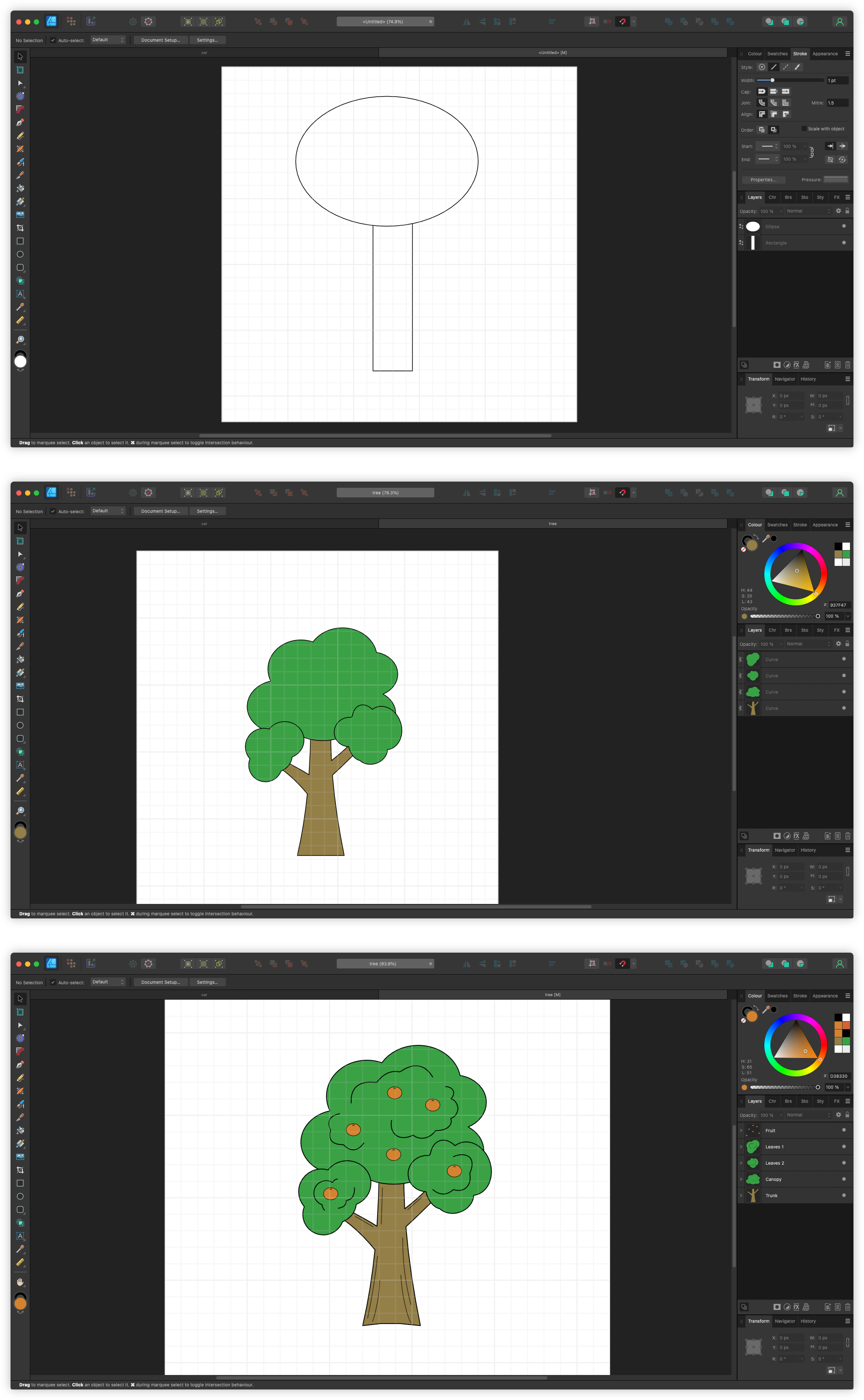

More work on assets for my niece’s game. This time I tried something organic: a tree. Not the most difficult organic thing, I agree. Need to build up to the hard things.

-

Amazing win by Alcaraz in the mens semifinals of the Australian Open. What a match: 5 sets, 3 tie-breakers, 5 hours and 27 minutes. That was not an easy win.

-

🛠️ exe.dev: Persistent VMs via SSH

Managed VMs via SSH. Signed up for this, although I’m not entirely sure what I’m going to use it for.

-

Finished reading: Open by Andre Agassi 📚

Heard a few people recommend this, for good reason. This was a pretty good read. You don’t need to be a tennis enthusiast to enjoy it.

-

It’s a bit of a shame that I start browsing social media (or posting on Micro.blog) when I start listening to music on the way home. No longer “present” and actually listening to the music. Maybe I need to add a feature to Alto Player that’ll pause the music when I switch apps. 😀

-

TIL you can Option drag things that have a MacOS URL scheme into Obsidian to create a link to them. Managed to do this for both a file and an email.

-

I’m seeing some misunderstandings of how to use test helpers in the projects I’m working in. There are a few instances of helpers being copied and pasted around the repository, despite them doing largely the same thing, such as accessing the message bus. But the whole purpose of these helpers is that they build atop of each other. You build out a message bus test helper for the provider that works with the message bus, and then you use that exact same helper in the tests for the service layer. It’s just like any other form of software engineering: you work with what’s already there.

This could be seen as a violation of the principal of unit tests being isolated, but I’d argue that that’s more of a principal in how the tests are executed: the outcome of one test doesn’t depend on the outcome of another. But just because it’s a unit test it doesn’t mean that all principals of software engineering should be thrown out the window. Modular design, separation of concerns, encapulations, abstraction, etc. These apply to unit tests just as much as they do to production code.

-

Is there no bigger admission that a unit test is unreliable than attempting to fix it with a sleep? Ugh!

-

I may need to adjust my stance against mocking in unit tests to exclude anything that works with a message bus. There’s just too much asynchronicity going on: timing so tight that the speed of the machine dictates whether a test passes or fails. Probably easier to use mocks in those circumstances.

-

Reason Go should add enums number 5,132: you’re not left up to runtime checks to verify that an enum value is invalid. You can rely on the type system to do that. It’s all well and good to implement marshallers and unmarshallers that verify that the input is a valid enum value, but that doesn’t stop developers from casting any arbitrary string to your enum type. And then what are you expected to do in your String() method (which doesn’t return an error)? Do you panic? Return “invalid?” Return the string enum value as is, even if it is invalid?

Making it impossible to even produce invalid types is the whole reason why we have a type system. It’s why I prefer Go over an untyped language like Python and JavaScript. So it would be nice if Go supported enums in the type system to get this guarantee.

-

Thinking more about TextEdit, there seems to be a tension between the expectation of the user and platform owner. I can see TextEdit being a great testbed for any new feature the platform owner wants to add: iCloud Sync, AI writing tools, etc. Its simple design makes it a perfect canvas for experimenting with such features. Yet it’s this same simplicity which appeals to users that just want a no-frills text editor. They’re not expecting nor wishing for the bells and whistles like autosaving or text generation. They just need a basic text field with the stock-standard new/open/save interaction that’s been around for a good 35 years now. To me, there’s no need to push TextEdit forward in any capacity. TextEdit is feature complete, and has been since it’s original release.

-

Chalk me up as someone who hates that TextEdit persists Untitled files. I use such windows as in-memory scratchpads that sometimes contain pretty sensitive information I do not want saved. It’s especially problematic when iCloud synchronises them to computers you’re not expecting them to appear on.