Workpad

- It needs command line completion. Typing out a full attribute name is so annoying, especially considering that you need to be a little careful you got the attribute name right, lest you actually add a new one.

- It needs command line history. Pressing up a few times is so much better than typing out the full command again and again. Such a history could be something that lives in the workspace, preserving it across restarts.

- The

set-attranddel-attrcommands need a switch to take the value directly, rather than by prompting the user to supply it after entering the command (it can still do that, but have an option to take it as a switch as well). I think having a-setswitch after the attribute names would suffice. - 14 rows at the bottom of the screen (the default)

- Half the screen

- All but the top 6 rows of the screen, so you can still see a bit of the table view

- The entire screen (i.e. hiding the table view)

- Completely hidden (i.e. the table view takes up the entire screen).

- Define new commands

- Display messages to, and get input from, the user

- Read the attributes of, and set the attributes of, the currently selected row (only string attributes at this stage)

- Run queries and get result sets, which can optionally be displayed to the user

-

I use the term “ordinary” here as a euphemism for “not very good”. ↩︎

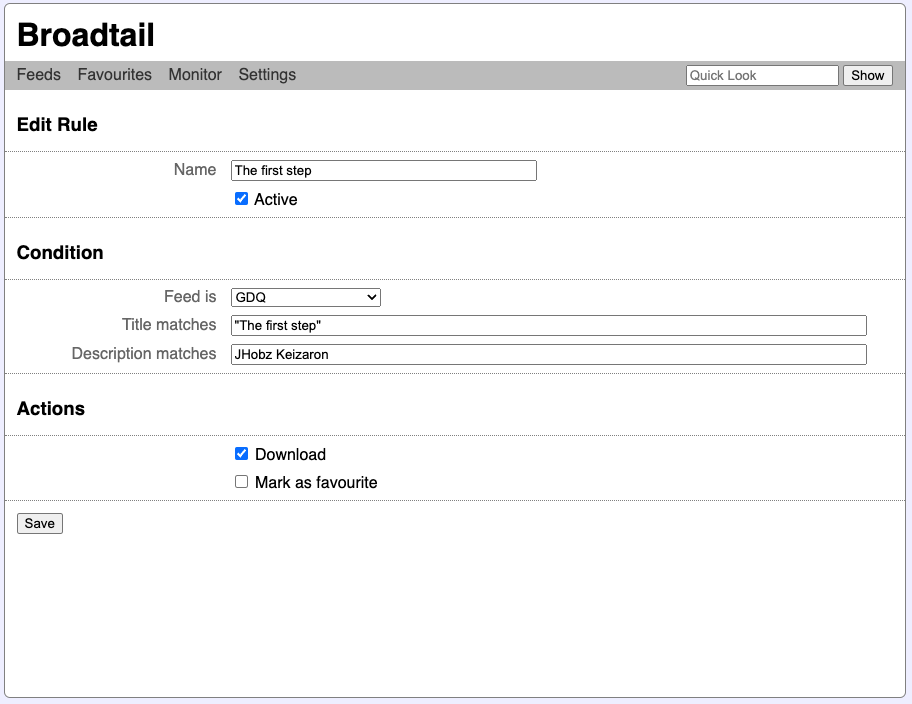

- The feed in which it appears in. This can be set to “any” to apply the rule to all feed items.

- Whether the title matches a given string. The match rules are similar to the searches in the feed item list views, which are appearance of each of the space separated tokens somewhere in the title (in any case) with phrases appearing as quoted strings.

- Whether the description matches a given string.

- Start a download of the video

- Mark the feed item as a favourite

- Providers and Services will remain stateless

- State will be managed by controllers

- Operations in controllers are only available through tea.Cmd implementations.

- Updates from controllers will only be available through tea.Msg implementations.

- View models (i.e.

tea.Model) will only know enough state to be able to render themselves. - There will be one master model which will coordinate the communication between controllers and view models. This model will react to messages from the controllers and update the views. It will also react to messages from the views and launch operations on the controllers.

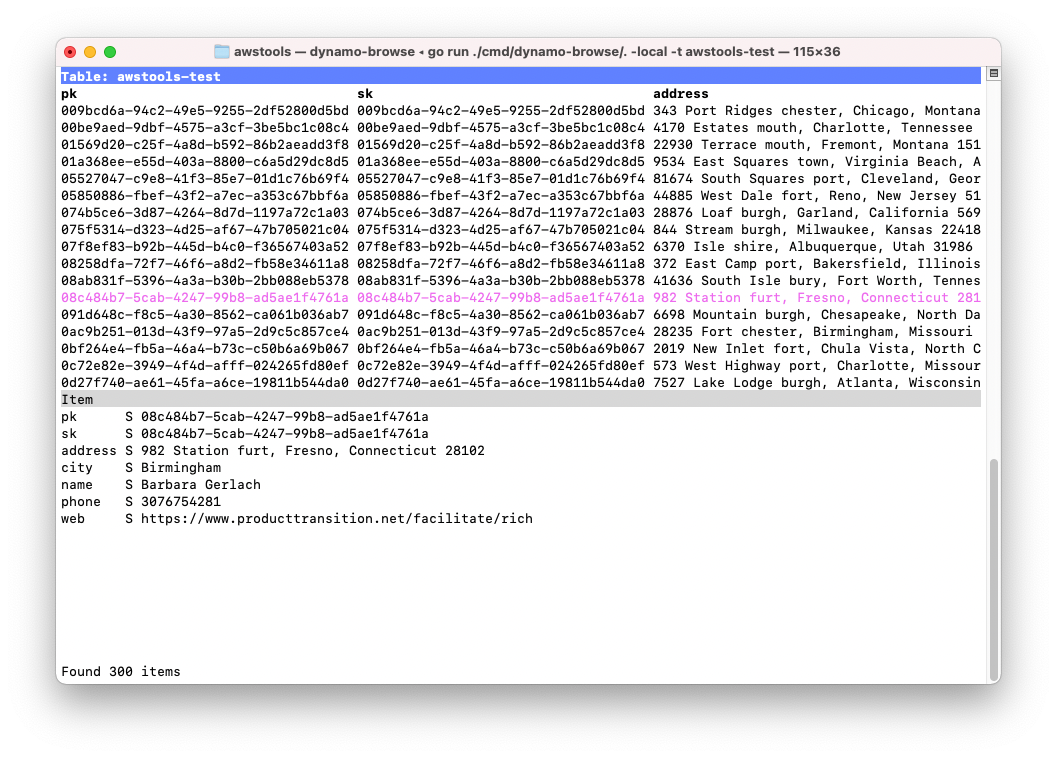

- A TUI browser/workspace for DynamoDB tables

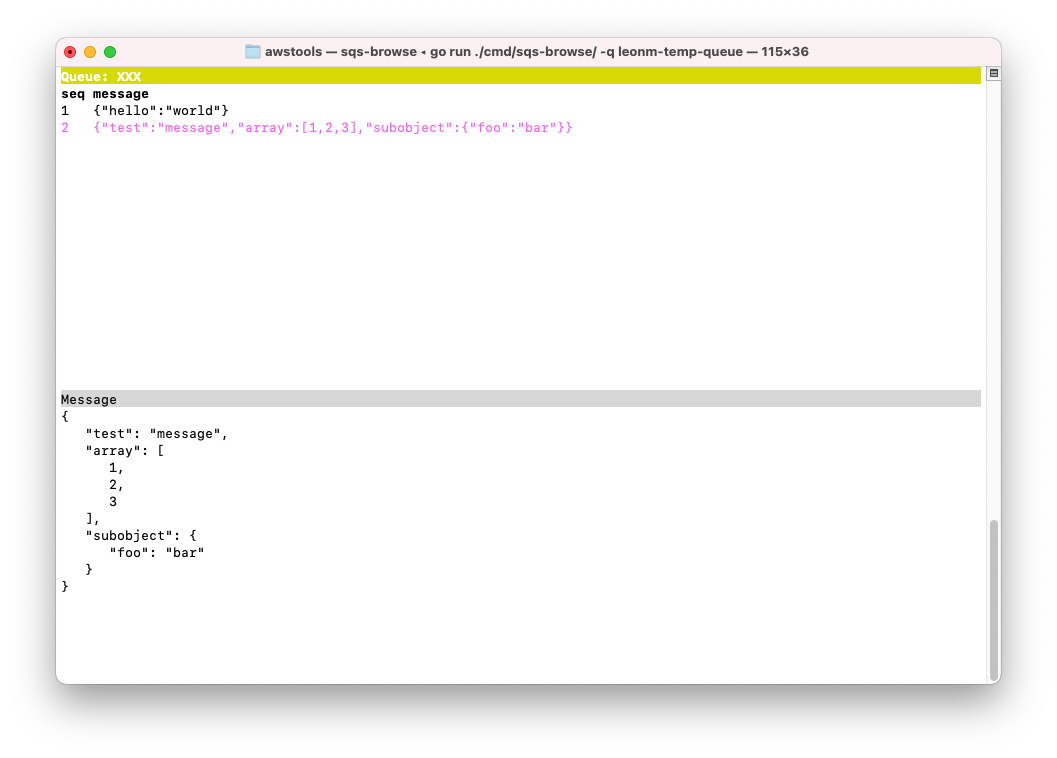

- A TUI workspace for monitoring SQS queues

- Some tool for reading JSON style log files.

- Queries in DynamoDB: There’s currently no way to run queries or filter the resulting items. I’m hoping to design a small query language to do this. I’m already using Participal to power a very simple expression language to duplicate items, so as long as I can design something that is expressive enough and knows how to use particular indices, I think this should work.

- Putting brand new items in DynamoDB: At the moment you can create new items based on existing items in a way, but there’s currently no way to create brand new items or adjust the attributes of existing items. For this, I’d like to see an “edit item” mode, where you can select the attribute and edit the value, change the type, add or remove attributes, etc. This would require some UI rework, which is already a bit tentative at this stage (it was sort of rushed together, and although some architectural changes have been made to the M and the C in MVC, work on the V is still outstanding).

- Preview item changes before putting them to DynamoDB: This sort of extends the point above, where you see the diff between the old item and new item before it’s put into DynamoDB.

- Workspaces in SQS Browse: One idea I have for SQS browse is the notion of “workspace”. This is a persistent storage area located on the disk where all the messages from the queue would be saved to. Because SQS browse is currently pulling messages from the SQS queue, I don’t want the user to get into a state where they’ve lost their messages for good. The idea is that a workspace is always created when SQS browse is launch. The user can choose the workspace file explicitly, but if they don’t, then the workspace will be created in the temp directory. Also implicit in this is support for opening existing workspaces to continue work in them.

- Multiple queues in SQS Browse: Something that I’d like to see in SQS Browse is the ability to deal with multiple queues. Say you’re pulling from one queue and you’d like to push it to another. You can use a command to add a queue to the workspace. Then, you can do things like poll the queue, push messages in the workspace to the queue, monitor it’s queue length, etc. Queues would be addressable by number or some other way, so you can simply run the command push 2 to push the current message to queue 2.

Finished version 0.0.3 of Audax Toolset yesterday. The code has been ready since the weekend, but it took me Sunday morning and yesterday (Monday) evening to finish updating the website. All done now.

Now the question is whether to continue working on it, or do something different for a change. There are a few people using Dynamo-Browse at work now, so part of me feels like I should continue building features for it. But I also feel like switching to another project, at least for a little while.

I guess we’ll let any squeaky wheels make the decision for me.

I’ve been using Dynamo-Browse all morning and I think I’ll make some notes about how the experience went. In short: the command line needs some quality of life improvements. Changing the values of two attributes on two different items, while putting them to the DynamoDB table each time, currently results in too many keystrokes, especially given that I was simply going back and forth between two different values for these attributes.

So, in no particular order, here is what I think needs to be improved with the Dynamo-Browse command line:

Finally, I think it might be time to consider adding more language features to the command line. At the moment the commands are just made up of tokens, coming from a split on whitespace characters (while supporting quotes). But I think it may be necessary to convert this into a proper language grammar, and add some basic control structures to it, such as entering multiple commands in a single line. It doesn’t need to be a super sophisticated language: something similar to the like TCL or shell would be enough at first.

It might be that writing a script would have solved this problem, and it would (to a degree at least). But not everything needs to be a script. I tried writing a script this morning to do the thing I was working on and it felt just so overkill, especially considering how short-lived this script would actually be. Having something that you can whip up in a few minutes can be a great help. It would have probably taken me 15-30 minutes to write the script (plus the whole item update thing hasn’t been fully implemented yet).

Anyway, we’ll see which of the above improvements I’ll get to soon. I’m kinda thinking of putting this project on hold for a little while, so I could work on something different. But if this becomes too annoying, I may get to one or two of these.

Thinking About Scripting In Dynamo-Browse

I’ve been using the scripting facilities of dynamo-browse for a little while now. So far they’ve been working reasonably well, but I think there’s room for improvement, especially in how scripts are structured.

At the moment, scripts look a bit like this:

const db = require("audax:dynamo-browse");

const exec = require("audax:x/exec");

db.session.registerCommand("cust", () => {

db.ui.prompt("Enter UserID: ").then((userId) => {

return exec.system("/Users/lmika/projects/accounts/lookup-customer-id.sh", userId);

}).then((customerId) => {

let userId = output.replace(/\s/g, "");

return db.session.query(`pk="CUSTOMER#${customerId}"`, {

table: `account-service-dev`

});

}).then((custResultSet) => {

if (custResultSet.rows.length == 0) {

db.ui.print("No such user found");

return;

}

db.session.resultSet = custResultSet;

});

});

This example script — modified from one that I’m actually using — will create a new cust command which will prompt the user for a user ID, run a shell script to return an associated customer ID for that user ID, run a query for that customer ID in the “account-service-dev”, and if there are any results, set that as the displayed result set.

This is all working fine, but there are a few things that I’m a little unhappy about. For example, I don’t really like the idea of scripts creating new commands off the session willy-nilly. Commands feel like they should be associated with the script that are implementing them, and this is sort of done internally but I’d like it to be explicit to the script writer as well.

At the same time, I’m not too keen of requiring the script writer to do things like define a manifest. That would dissuade “casual” script writers from writing scripts to perform one-off tasks.

So, I’m thinking of adding an plugin global object, which provides the hooks that the script writer can use to extend dynamo-browse. This will kinda be like the window global object in browser-based JavaScript environments.

Another thing that I’d like to do is split out the various services of dynamo-browse into separate pseudo packages. In the script above, calling require("audax:dynamo-browse") will return a single dynamo-browse proxy object, which provides access to the various services like running queries or displaying things to the user. This results in a lot of db.session.this or db.ui.that, which is a little unwieldily. Separating these into different packages will allow the script writer to associate them to different package aliases at the top-level.

With these changes, the script above will look a little like this:

const session = require("audax:dynamo-browse/session");

const ui = require("audax:dynamo-browse/ui");

const exec = require("audax:x/exec");

plugin.registerCommand("cust", () => {

ui.prompt("Enter UserID: ").then((userId) => {

return exec.system("/Users/lmika/projects/accounts/lookup-customer-id.sh", userId);

}).then((customerId) => {

let userId = output.replace(/\s/g, "");

return session.query(`pk="CUSTOMER#${customerId}"`, {

table: `account-service-dev`

});

}).then((custResultSet) => {

if (custResultSet.rows.length == 0) {

ui.print("No such user found");

return;

}

session.resultSet = custResultSet;

});

});

Is this better? Worse? I’ll give it a try and see how this goes.

Dynamo-Browse: Using The Back-Stack To Go Forward

More work on Audax toolset. Reengineered the entire back-stack in dynamo-browse to support going forward as well as backwards, much like going forward in a web-browser. Previously, when going back, the current “view snapshot” was being popped off the stack and lost forever (a “view snapshot” is information about the currently viewed table, including the current query and filter if any are set). But I’d need to maintain these view snapshot if I wanted to add going forward as well.

So this evening, I changed it this such that view snapshots were no longer being deleted. Instead, there is now a pointer to the current view snapshot that will go up or down the stack when the user wants to go backwards or forwards. This involved changing the back-stack from a single-linked list to a doubly-linked list, but that was pretty straightforward. The logic to pop snapshots from the stack hasn’t been removed: in fact, it has been augmented so that it can now remove the entire head up to the current view snapshot. This happens when the user goes backwards a few times, then runs a new query or sets a new filter, again much like a web-browser where going backwards a few times, then clicking a link, will remove any chance of going forward from that point.

Last thing I changed this evening was to restore the last viewed table, query or filter when relaunching dyanmo-browse using an existing workspace. This works quite nicely with the back-stack as it works now: it just needs to read the view snapshot at the head.

Adding Copy-To-Clipboard And Layout Changes In Dynamo-Browse

Spent some more time on dynamo-browse this morning, just a little bit.

Got one new feature built and merged to main, which is the ability to copy the displayed item to the pasteboard by pressing c. This is directly inspired by a feature in K9S, which allows you to copy the logs of a running pod to the pasteboard (I use this feature all the time). The package I’m using to access the pasteboard is github.com/golang-design/clipboard, which seems to offer a nice cross-platform approach to doing this.

The copied item is not meant for machine use. It’s exactly what’s displayed in the item viewport and is intended for tracking items that change over time. What I’ve found myself doing, when I need to track such an item, is to manually select it with Terminal and pasting it to an untitled textedit window. I do this again when the item changes until I’m ready to eye the changes manually. It’s not sophisticated, but it tends to work. And although this feature is not a full-blown item comparer, it will make this use case a little easier. Plus, it will copy the entire image, not just the lines displayed when a viewed item is too large for the viewport.

Speaking of which, the other thing I started working on today was on allowing the user to resize the item viewport to take up more of the screen. At the moment, the displayed item viewport takes up the bottom 14 lines of the screen, and is not resizable. Naturally, for large items, this is not large enough, and having the ability to resize this viewport would be a good thing to have.

I’ve been thinking about the best way to handle this, and I’ve decided that an approach similar to how Vim does this — where you need to press a key-chord followed by a number to move the vertical split between two views — would be a little to annoying here. Too much fine grain control for when you just need the viewport bigger. So, I’m opting for a more course-grain approach, where you simply press w and the viewport size will cycle amongst 5 different sizes:

Doing this will not necessarily steal focus from the table view: even if shown the entire screen, you can still go through each item by pressing Up or Down. But you’ll be able to see more of the selected item.

Anyway, that’s the theory. We’ll see how well this works in practice.

Adding A Back-Stack To Dynamo-Browse

Spent some more time working on dynamo-browse, this time adding a back-stack. This can be used to go back to the previously viewed table, query or filter by pressing the Backspace key, kind of like how a browser back button works.

This is the first feature that makes use of a workspace, which is a concept that I’ve been thinking about since the start of the project. A workspace is basically a file storing various bits of state that could be recalled in future launches of the tool. A workspace is always created, even if one is not explicitly specified by the user. The workspace filename be set by using the -w switch on launch, or if one is not specified, then a new workspace filename within the temp directory is generated. The workspace file itself is a Bolt database, which is a very simple, embeddable key/value store that uses B-Trees and memory-mapped data access. I’m actually using StormDB to access this file, since it provides a nice interface for storing and querying Go structs without having to worry about marshalling or unmarshalling.

The back-stack consist of view-snapshots, which are essentially records of the currently view table, filter, and query. The view-snapshots are stored as a linked list, and the back-stack simply pushes new snapshots as the view changes (because this is stored on file, the backlinks are actually ID references and not physical pointers). I had a bit of trouble getting this right at first. The initial version actually pushed a snapshot of the previous view before it changed (i.e. a new table was selected or the filter was changed). But this resulted in a bit of messy implementation, with push calls copied and pasted across the various controllers with various special cases, etc.

So I went back and reimplemented it so that the top of the back-stack is actually a snapshot of the current view. Pressing the Backspace key will actually pop the current snapshot, then read the head of the stack and use that to update the view. This means that there will always be one item in the stack, which bothered me a little at first, but that did make the code easier to implement.

There is another benefit to this arrangement which just came to me while I was writing this. Since the current state of the view is always at the top of the stack, I could add the ability to restore the last view when launching dynamo-browse with an existing workspace, saving the user from selecting a table and running their last query. This will come a bit later. For the meantime, I need to get this back-stack working with user scripts, so that when a script changes the view, the user can still go back.

Finally, a shoutout to boltcli which is a useful CLI tool for browsing a Bolt database. This tool has come in handy for debugging this feature.

More On Scripting In Dynamo-Browse

Making some more progress on the adding scripting of dynamo-browse. It helps being motivated to be able to write some scripts for myself, just to be able to make my own working life easier. So far the following things can be done within user scripts:

Here’s a modified example of a script I’ve been working on that does most of what is listed above:

const db = require("audax:dynamo-browse");

db.session.registerCommand("cust", () => {

db.ui.prompt("Enter user ID: ").then((userId) => {

return db.session.query(`userId="${userId}"`);

}).then((resultSet) => {

db.session.currentResultSet = resultSet;

});

});

db.session.registerCommand("sub", () => {

let currentItem = db.session.selectedRow;

let currentUserId = currentItem.item["userId"];

if (!currentUserId) {

db.ui.print("No 'userId' on current record");

return;

}

db.session.query(`pk="ID#${currentUserId}"`, {

table: "billing-subscriptions"

}).then((rs) => {

db.session.currentResultSet = rs;

});

});

The process of loading this script is pretty basic at the moment. I’ve added a loadscript command, which reads and executes a particular JavaScript file taken as an argument. Scripts can be loaded on startup by putting these loadscript commands (along with any other commands) in a simple RC file that is read on launch.

I’m not sure how much I like this approach. I would like to put some structure around how these scripts are written and loaded, so that they’re not just running atop of one another. However, I don’t want too much structure in place either. To me, having the floor for getting a script written and executed too high is a little demotivating, and sort of goes against the ability to automate these one-off tasks you sometimes come across. That’s the downsides I see with IDEs like GoLand and, to a lesser degree, VS Code: they may have vibrant plugin ecosystems, but the minimum amount of effort to automate something is quite high, that it may not be worth the hassle.

A nice middleground I’m considering is to make it to have two levels of automation: one using JavaScript, and the other closer to something like Bash or TCL. The JavaScript would be akin to plugins, and would have a rich enough API and runtime to support a large number of sophisticated use-cases, with the cost being a fair degree of effort to get something usable. The Bash or TCL level would have a lower level of sophistication, but it would be quicker to do these one-off tasks that you can bang out and throw away. This would mean splitting the effort between the scripting API and command line language, which would fall on me to do, so I’m not sure if I’d go ahead with this (the command line “language” is little more than a glorified whitespace split at the moment).

In the meantime, I’ll focus on making sure the JavaScript environment is good enough to use. This will take some time and dog-fooding so they’ll be plenty to do.

I’ve also started sharing the tool with those that I work with, which is exciting. I’ve yet to get any feedback from them yet, but they did seem quite interested when they saw me using it. I said this before, but I’ll say it again: I’m just glad that I put a bit of polish into the first release and got the website up with a link I can share with them. I guess this is something to remember for next time: what you’re working on doesn’t need to be complete, but it helps that it’s in a state that is easy enough to share with others.

Scripting In Dynamo-Browse

Making some progress with adding scripting capabilities to dynamo-browse. I’ve selected a pretty full-featured JS interpreter called goja. It has full support for ECMAScript 5.1 and some pretty good coverage of ES6 as well. It also has an event loop, which means that features such as setTimeout and promises come out of the box, which is nice. Best of all, it’s written in Go, meaning a single memory space and shared garbage collector.

I’ve started working the extension interface. I came up with a bit of a draft during the weekend and now just implementing the various bindings. I don’t want to go too fast here. I’m just thinking about all the cautionary tales from those that were responsible for making APIs, and introducing mistakes that they had to live with. I imagine one way to avoid this is to play with the extension API for as long as it make sense, smoothing out any rough edges when I find them.

It’s still early days, but here are some of the test scripts I’ve been writing:

// Define a new "hello" command which will ask for your name and

// print it at the bottom.

const dynamobrowse = require("audax:dynamo-browse");

dynamobrowse.session.registerCommand("hello", () => {

dynamobrowse.ui.prompt("What is your name? ").then((name) => {

dynamobrowse.ui.alert("Hello, " + name);

});

});

The prompt method here asks the user for a line of text, and will return the user’s input. It acts a bit like the prompt function in the browser, except that it uses promises. Unfortunately, Goja does not yet support async/await so that means that anonymous functions will need to do for the time being (at least Goja supports arrow functions).

Here’s another script, which prints out the name of the table, and set the value of the address field on the first row:

const dynamobrowse = require("audax:dynamo-browse");

dynamobrowse.session.registerCommand("bla", () => {

let rs = dynamobrowse.session.currentResultSet;

let tableName = rs.table.name;

dynamobrowse.ui.alert("Current table name = " + tableName);

rs.rows[0].item.address = "123 Fake St.";

});

The rows array, and item object, makes use of DynamicArray and DyanmicObject, which is a nice feature in Goja in which you do not need to set the elements in the array, or fields on the object explicitly. Instead, when a field or element is looked up, Goja will effectively call a method on a Go interface with the field name or element index as a parameter. This is quite handy, particularly as it cuts down on memory copies.

These scripts work but they do require some improvements. For example, some of these names could possibly be shortened, just to reduce the amount of typing involved. They also need a lot of testing: I have no idea if the updated address in the example above will be saved properly.

Even so, I’m quite happy about how quickly this is coming along. Hopefully soon I’ll be in a position to write the test script I’m planning for work.

Also, I got my first beta user. Someone at work saw me use dynamo-browse today and asked where he could get a copy. I’m just so glad I got the website finished in time.

Release Preparation & Next Steps

Finally finished the website for the Audax toolset and cut an initial release (v0.0.2). I’ve also managed to get binary releases for Linux and Windows made. I’ve started to work on binary releases for MacOS with the intention of distributing it via Homebrew. This is completely new to me so I’m not sure what progress I’ve made so far: probably nothing. For one thing, I’ll need a separate machine to test with, since I’ve just been installing them from source on the machine’s I’ve been using. Code signing is going to be another thing that will be fun to deal with.

I’ll slip that into a bit of a background task for the moment. For now, I’d like to start work on a feature for “dynamo-browse” that I’ve been thinking of for a while: the ability for users to extend it with scripts.

Some might argue that it may be a little early for this feature, but I’m motivated to do it now as I have a personal need for this, which is navigating amongst a bunch of DynamoDB tables that all store related data. This is all work related, so can’t say too much here. But if I were just to say that doing this now is a little annoying. It involves copying and pasting keys, changing tables, and running filters and queries manually: all possible, but very time consuming. Having the ability to do all this with a single command or keybinding would be so much better. And since this is all work related, I can’t simply modify the source code directly as it will give too much away. Perfect candidate for using an embedded scripting language.

So I think that’s what I’ll start work on next.

I also need to think about starting work on “sqs-browse”. This would be a complete rewrite of what is currently there: which is something that can be used for polling SQS queues and viewing the messages there. I’m hoping for something a little more sophisticated, involving a workspace for writing and pushing messages to queues, along with pulling, saving and routing them from queues. I haven’t got an immediate need for this yet, but I’ve come close on occasion so I can’t leave this too late.

A New Name for Audax Tools (nee AWS Tools)

I think I’ve settled on a name for the project I’ve been calling “awstools”. “Settled” is probably a good word for it: I came up with it about a week ago, and dismissed it at first as being pretty ordinary1. But over that time, it’s been slowly growing on me. Also, I’ve yet to come up with any alternatives that are better.

Anyway, the name that I’ve settled on is the Audax Toolset.

It’s not a great name. Honestly, anyone with more creativity that me could probably come up with a bunch of names better than this. I’m going to play the name-is-the-thing-you-say-to-talk-about-the-thing here.

But the name was not completely random. “Audax” was chosen from the second part of the Latin name for a wedged-tail eagle: Aquila audax. Translated from Latin it means bold, and although I would definitely not describe this project as in any way “bold”, it is a TUI based application which, given the limited palette for building UIs, may have some instances of bold font. But what I’m trying to invoke with this name is the sense of an eagle flying in a cloudless sky. Cloudless → cloudy → the Cloud → AWS (yeah, like I said it’s not a great name).

Anyway, it’s good enough for the moment. And the domain name audax.tools was available, which was a good thing. Right now, the domain doesn’t point to anything but it will eventually point to the website and user guide.

Speaking of which, the user guide is still slowly coming along. I got a very rough draft of the actual guide itself finished. I’ll need to proofread it and flesh it out a little, and also add some screenshots. The reference, which will list all the commands and key bindings (there isn’t many of them), needs to be written as well, but that’s probably the easiest thing to write. I will admit that the guide itself makes for pretty dry reading, and I’m not entirely convinced it would be enough for someone coming in cold to download up the tools and start using them. I may also need to write some form of tutorial, or maybe even record a demo video. Something to consider once I’ve got the rest of the site finished, I guess.

AWS Tools: Documentation & The Website

Worked a little more on “awstools” (still haven’t thought of a good alternative name for it). I think the “dynamo-browse” tool is close to being in a releasable state. I’ve spent the last couple of days trying to clean up most of the inconsistencies, and making sure that it’s being packaged correctly.

Now it’s documentation writing time. I’m working my way through a very basic website and user guide. It’s been a little while since I’ve written any form of user-level documentation — most of the documents I write have been for other developers I work closely with — and I admit that it feels like a bit of a slog. It might be the tone of writing that I’ve adopted: a little dry and impersonal, trying to walk that fine line between being informative without swamping the reader with big blocks of words. I might need to work on that: no real reason why the documentation needs to be boring to the reader.

The website itself will be a statically generated site using Hugo and will most likely be served using GitHub pages. I’ve settled on the terminal theme, since “awstools” is a suite of terminal-based apps. That reasoning might be a little corny, but to be honest, I have grown to actually like the theme itself. I haven’t settled on a domain for it yet.

While working on the documentation, I ran into a useful website that contains a comprehensive list of HTML entities, complete with previews. Good reference for the arrows glyphs I need to use to represent key bindings in the document.

AWS Tools Dev Diary

A little more work on “awstools” today, mainly on a bit of a cleanup spree to make them suitable for others to use. This generally means fixing up any inconsistencies in how the commands work. An example of this is the put command, which now writes all modified items that are marked to the table (or if there are no marked items, all modified items) instead of just the selected one. This brings it closer to how the delete command works.

Also merged the set-s and set-n commands into a single set-attr command, which now has the ability to specify an optional attribute type along with the attribute name. This still only works with the currently selected item, and I think I’ll keep it like that for the moment. I do want something to modify attributes of all marked items (or even all items), but it might be better if that was a separate command, as it may allow for some potentially useful actions like adding a suffix to the value instead of simply changing it.

Some of these command names are a bit unwieldy, like set-attr, but I’m hopeful to replace many of these with simple keystrokes down the line. I’m trying not to reserve many generic names like “set” in the off chance of adding something like TCL or similar to make simple scripts (this is in addition to something closer to JavaScript for more feature-full extensions). Nothing settled here, but trying to keep that option open.

WWDC Videos In Broadtail

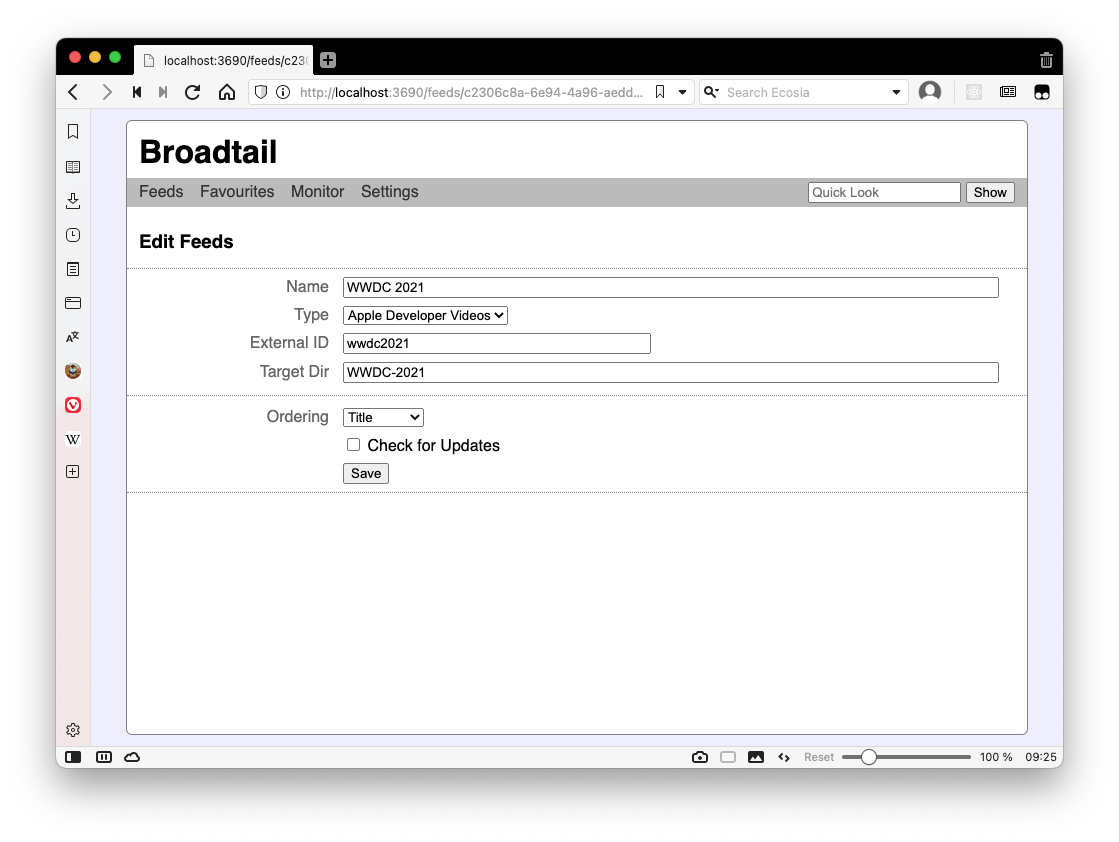

Some more work on Broadtail. This time, I added the ability to use it to download Apple WWDC videos.

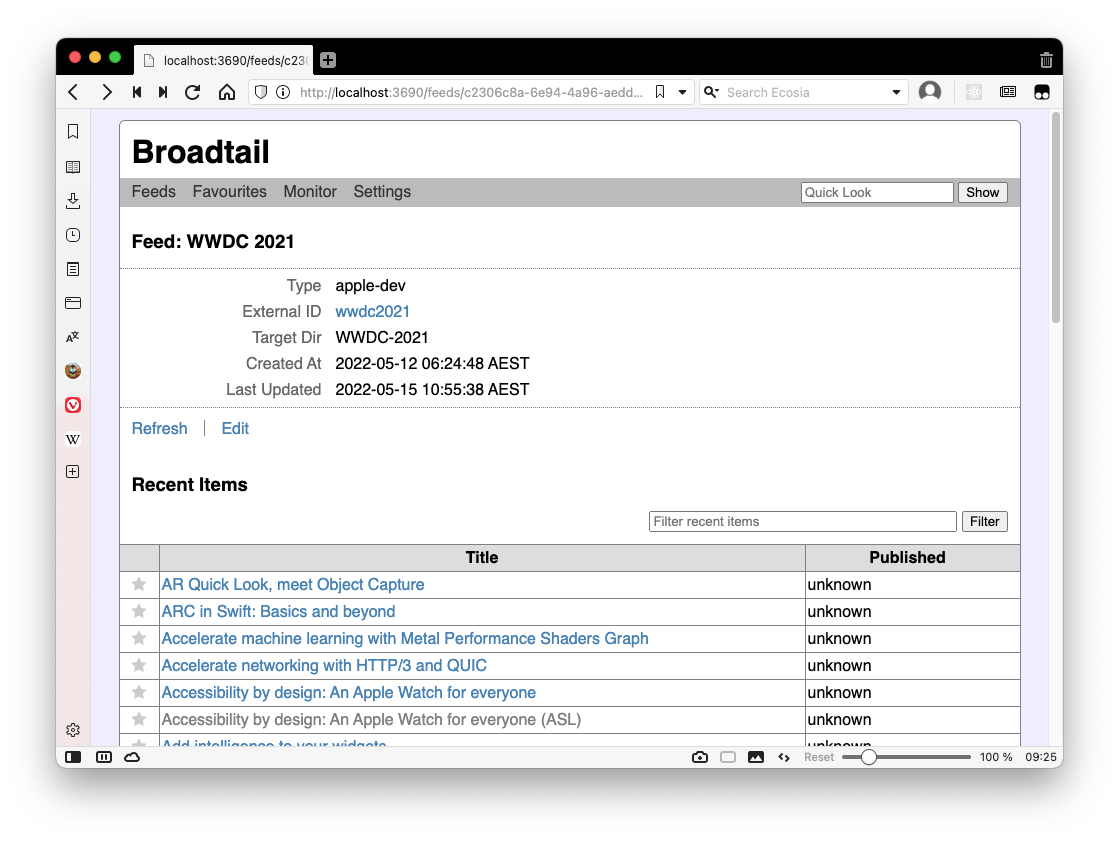

The way it works is based on the existing RSS feed concept. In order to get the list of videos for a particular WWDC year, you “subscribe” to that by setting up a feed with the new “Apple Developer Videos” type. The external ID is taken from the URL slug of the web-site that Apple publishes the session videos. For example, for WWDC 2021, the external ID would be “wwdc2021”.

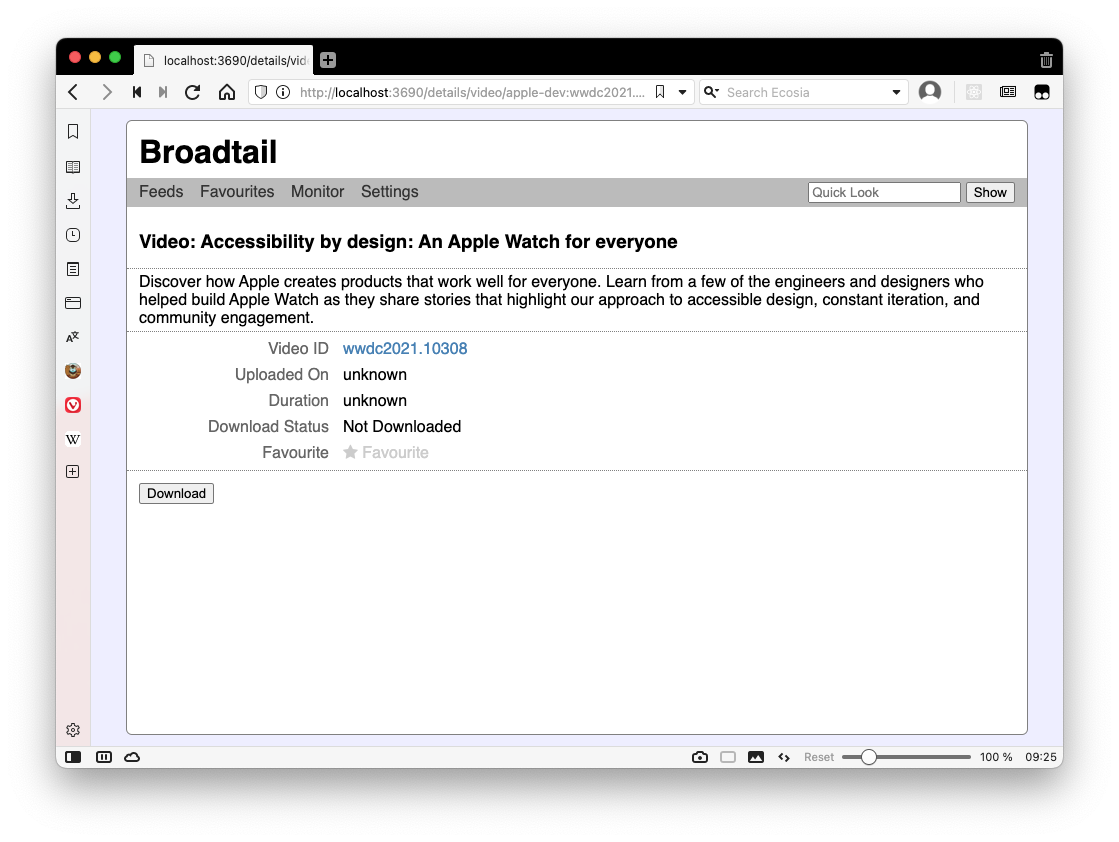

Downloading the videos is more or less the same.

There are a few differences between this feed type, and the YouTube RSS feed. For instance, it only makes use of what is available from the website, which means details like publishing date or duration are not really available. This is why the “Publishing” date is displayed as “unknown”. That’s also why the videos are arranged in alphabetical order and the feed itself is not automatically refreshed (although doing so manually by clicking “Refresh” within the feed page will work). These are actually properties that can now be applied to all feeds of one wishes, although the YouTube feeds are still arranged in reverse chronological order by default.

From a coding perspective, this involved a lot of refactoring. I was hoping to move to a more generic feed and video type, but this was the feature that eventually got me to do so. Thing is that if I wanted to add more feed and video types in the future, it should be easier to do so.

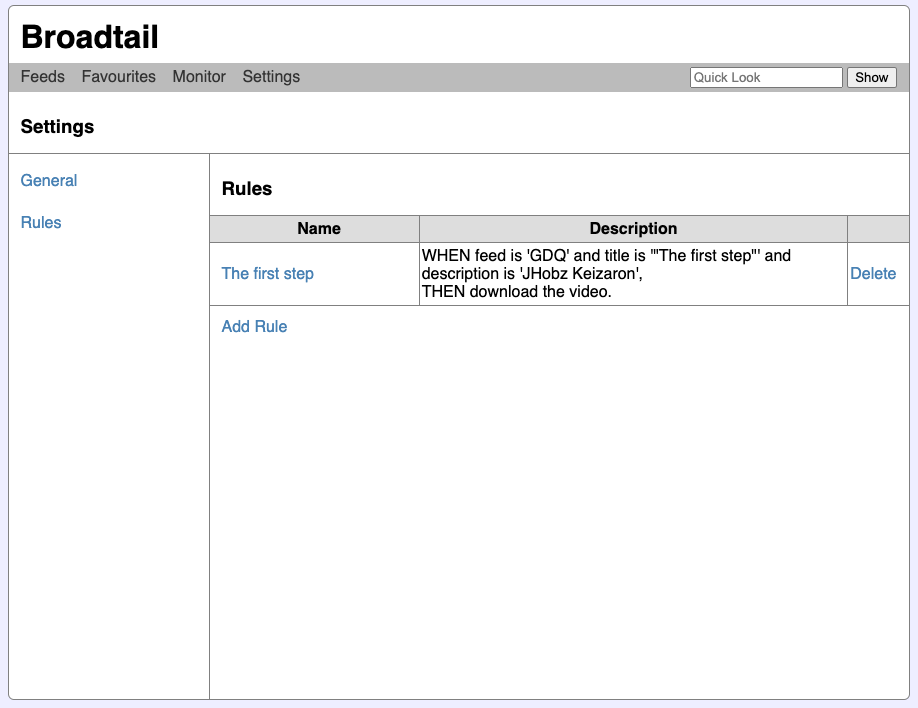

Feed Rules In Broadtail

Generally, when there’s a video that I’m interesting in watching, I take a look at Broadtail to see if it’s available. When it is, I go ahead and download it.

However, some videos take a long time to download — we’re talking 10 hours or so — and they’re usually published when I’m not looking, like during the night when I’m asleep (thank’s time-zones). So I’d thought it would be nice for Broadtail to kick off the download for me when the video shows up in the feed.

So I’ve added Feed Rules to do this.

Feed rules are very simple automations that happen when new items are found in during the RSS feed poll. When the video shows up in the feed, and matches the rule condition, Broadtail will perform the rule action for that video.

Feed Rules are added as a new sub-section in “Settings”, which itself is a new top-level section of the app (the “General” sub-section is empty at this stage).

Feed Rules consist of a name, whether the rule is active, a set of conditions, and a set of action. A feed item will need to match all the conditions of the rule in order for the actions to be performed.

The conditions of a feed rule touch upon the following properties of a feed item:

If a feed item matches all the conditions, Broadtail can perform the following actions for the feed item:

There might be more conditions or actions added in the future. So far this seems to be the bare minimum to make the feature usable.

Doing a small weekend/week-long project at the moment to track favourite moments in a few podcasts I’m listening to. This is something that I’ve been thinking about for a while, and I’m not entirely sure what compelled me to actually start work on it. Probably because the system I’ve been using so far — a set of timestamped Pocketcast links managed in Pinboard — has been growing quite recently and much of the limitations involved, such as the list being unordered and no skip back 30 seconds available on playback, is start to annoy me. It’s also a chance for a bit of novelty, at least for a few days or so.

It took roughly a day or so to get a small Buffalo web-app up and running which does most of what I want. It just needs some styling and a better way to play the episodes, which is what I’m working on now. I really don’t want to spend more than a week working on this — last thing I need are more projects. But a good thing about this one is that I think the scope is naturally quite small, so no real risk of it blowing out to become too large.

Two new awstool commands: one for browsing SSM parameters and one for simply viewing JSON log files. The SSM parameter one was especially handy, as I was dealing with parameter subtrees a lot and doing that in the AWS web console is always a pain. As for the JSON log viewer; well, let’s just say there were one too many log files from Kubernete pods I needed to look at this week.

The pattern for working with state seems to be working. I may need to be a little careful that the state management doesn’t get too unwieldily as I add features and more things that need to be tracked. But at the moment, it seems to be manageable.

I’ve been racking my brain trying to best work out how to organise the code for awstools. My goals are to make it possible to have view models composable, have state centralised but also localised, and keep controllers from having too much responsibility. I started another tool, which browses SSM parameters, to try and work this all out.

I think I’ve settled on the following architecture:

We’ll see how this goes and whether it will scale as additional features are added.

More work on the AWS tools, mainly rebuilding the UI framework. Need to rip out all the Operation type stuff, as BubbleTea already does this using messages and commands (see tutorial 2). Also taking some time building some UI models that I can reuse across the various commands, including a few that deal with layout changes.

Also tracked down what was causing that delay when trying to create a new list. It turns out during the call to list.New(), a bunch of adaptive styles are created, which includes a test to see if the terminal is in light or dark mode. This calls some terminal IO methods which were blocking for a significant amount of time, we’re talking in the order of 10’s of seconds.

The good thing is that this check is only made once, so what I did was move the check into the main function. Some preliminary tests indicating that this may work: the lists are consistently being created very quickly again. We’ll see if this lasts.

More work on Broadtail this morning. Managed to get the new favourite stuff finished. Favourites are now a dedicated entity, instead of being tied to a feed item. This means that any video can now be favourited, including ones not from a feed. The favourite list is now a top-level menu item as well.

Also found a useful CLI tool for browsing BoltDB files.

New AWS Tools Commands

For a while now, I’ve been wanting some tools which would help manage AWS resources that would also run in the terminal. I know in most circumstances the AWS console would work, but I know for myself, there’s a lot of benefit from doing this sort of administration from the command line.

I use the terminal a lot when I’m developing or investigating something. Much of the time while I’m in the weeds I’ve got a bunch of tabs with tools running and producing output, and I’m switching between them as I try to get something working across a bunch of systems.

This is in addition to cases when I need to manage an AWS mock running on the local machine. The AWS console will not work then.

At the start of the week, I was thinking of at least the following three tools that I would like to see exist:

As of yesterday, I actually got around to building the first two.

The first is a tool for browsing DynamoDB tables, which I’m calling dynamo-browse (yes, the names are not great). This tool does a scan of a DynamoDB table, and shows the resulting items in a table. Each item can be inspected in full in the lower half of the window by moving the selection.

At the moment this tool only does a simple scan, and some very lightweight editing of items (duplicate and delete). But already it’s proven useful with the task I was working on, especially when I came to viewing the contents of test DynamoDB instances running on the local machine.

The second tool is used for monitoring SQS queues.

This will poll for SQS messages from a queue and display them in the table here. The message itself will be pretty printed in the lower half of the screen. Messages can also be pushed to another queue. That’s pretty much it so far.

There are a bunch of things I’d like to do in these tools. Here’s a list of them:

As for when I’ll actively work on these tools. It will probably be when I need to use them. But in the short term, I’m glad I got the opportunity to start working on them. They’ve already proven quite useful to me.